Frontier AI training and inference now operate at unprecedented scale. The new co-packaged optics networking from NVIDIA delivers 3.5x higher efficiency and 10x resiliency for large-scale GPU clusters.

Lambda, the Superintelligence Cloud, today announced it is among the first AI infrastructure providers to integrate NVIDIA’s silicon photonics–based networking.

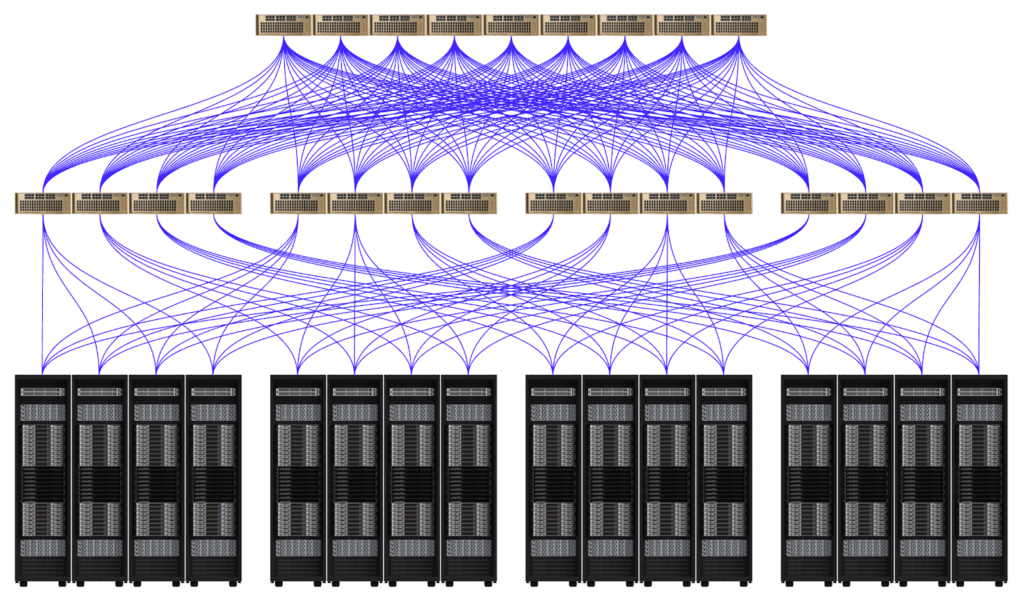

The shift to co-packaged optics CPO, addresses a critical bottleneck in AI infrastructure. As AI models now train on hundreds of thousands of GPUs, and beyond, the network connecting them has become as important as the GPUs themselves. Traditional networking approaches are not keeping pace with this scale.

Why CPO Matters for AI Compute Networks

NVIDIA Quantum-X Photonics InfiniBand and NVIDIA Spectrum-X Photonics Ethernet switches use co-packaged optics (CPO) with integrated silicon photonics to provide the most advanced networking solution for massive-scale AI infrastructure. CPO addresses the demands and constraints of GPU clusters across multiple vectors:

- Lower power consumption: Integrating the silicon photonic optical engine directly next to the switch ASIC eliminates the need for transceivers with active components that require additional power. At launch, NVIDIA mentioned a 3.5x in power efficiency improvement over traditional pluggable networks.

- Increased reliability and uptime: Fewer discrete optical transceiver modules, one of the highest failure rate components in a cluster, mean fewer potential failure points. NVIDIA cites 10x higher resilience and 5x longer AI application runtime without interruption over traditional pluggable networks.

- Lower latency communication: Placing optical conversion next to the switch ASIC minimizes electrical trace lengths. This simplified data and electro-optical conversion path provides lower latency than traditional pluggable networks.

- Faster deployment at scale: Fewer separate components, simplified optics cabling, and fewer service points mean that large-scale clusters can be deployed, provisioned, and serviced more quickly. NVIDIA cites 1.3x faster time to operation versus traditional pluggable networks.

“NVIDIA Quantum‑X Photonics is the foundation for high-performance, resilient AI networks. It delivers superior power efficiency, improved signal integrity, and enables AI applications to run seamlessly in the world’s largest datacenters,” said Ken Patchett, VP of DC Infrastructure, at Lambda. “By integrating optical components directly next to the network switches, we believe our customers can deploy AI infrastructure faster while significantly reducing operational costs – essential as we continue to scale to support frontier AI workloads.”

NVIDIA reports that NVIDIA Photonics delivers 3.5x better power efficiency, 5x longer sustained application runtime, and 10x greater resiliency than traditional pluggable transceivers. Co-packaged optics can provide increased compute per watt and enhanced network reliability, enabling faster model training and inference.

Built for the era of real-time AI, NVIDIA Photonics networking features a simplified design, resulting in fewer components to install and maintain. Lambda continues to provide scalable AI infrastructure, helping enterprises, research labs, and startups build multi-site, large-scale GPU AI factories.

“AI factories are a fundamentally new class of infrastructure – defined by their network architecture and purpose-built to generate intelligence at massive scale,” said Gilad Shainer, senior vice president of networking at NVIDIA. “By integrating silicon photonics directly into switches, NVIDIA Quantum-X silicon photonics networking switches enable the kind of scalable network fabric that makes massive-GPU AI factories possible.”

How Lambda Plans to Leverage It

Lambda is preparing its next-generation GPU clusters to integrate CPO networking using NVIDIA Quantum-X Photonics InfiniBand and Spectrum-X Photonics Ethernet switches. These advances in silicon-photonics switching are critical as we design massive-scale training and inference systems. For Lambda’s NVIDIA GB300 NVL72 and NVIDIA Vera Rubin NVL144 clusters, we are adopting CPO-based networks to deliver higher reliability and performance for customers while simplifying large-scale deployment operations and improving power efficiency.

This builds on Lambda’s cooperation with NVIDIA. Lambda recently achieved NVIDIA Exemplar Cloud status, validating its ability to deliver consistent performance for large-scale training workloads on NVIDIA Hopper GPUs. Over the past decade, Lambda has earned six NVIDIA awards, affirming its position as a trusted collaborator in NVIDIA’s ecosystem.

About Lambda

Lambda, The Superintelligence Cloud, is a leader in AI cloud infrastructure serving tens of thousands of customers.

Founded in 2012 by machine learning engineers who published at NeurIPS and ICCV, Lambda builds supercomputers for AI training and inference.

Our customers range from AI researchers to enterprises and hyperscalers.

Lambda’s mission is to make compute as ubiquitous as electricity and give everyone the power of superintelligence. One person, one GPU.

Contacts

Lambda Media Contact

pr@lambdal.com