Light-speed computing for the AI revolution

Just as fiber optics revolutionized global communications by replacing electrical signals with light, photonics is poised to transform AI infrastructure by fundamentally reimagining how we process and move data.

The Energy Crisis in AI

Today’s AI systems consume staggering amounts of energy. Training large language models requires megawatt-hours of electricity, while inference at scale demands massive data centers that consume as much power as small cities. As AI capabilities expand exponentially, this energy consumption threatens to become unsustainable.

The bottleneck isn’t just in computation—it’s in the fundamental physics of moving electrons through silicon. Every bit transfer generates heat, every calculation requires power, and every nanosecond of latency compounds into massive inefficiencies at scale.

Enter Photonic Computing

Photonic computing replaces electrons with photons—particles of light that move at the ultimate speed limit of the universe. Unlike electrons, photons don’t interact with each other, eliminating interference and reducing energy loss. They can carry multiple wavelengths simultaneously, enabling massive parallel processing that would be impossible with traditional electronics.

Key Advantages of Photonic AI

- Ultra-low latency: Light-speed processing reduces computation time by orders of magnitude

- Massive bandwidth: Wavelength division multiplexing enables parallel data streams

- Energy efficiency: Photons generate virtually no heat, dramatically reducing power consumption

- Scalability: Linear scaling without the quadratic growth in complexity of electronic systems

The Fiber Optics Parallel

The transformation mirrors the fiber optics revolution of the 1980s and 1990s. Before fiber optics, long-distance communication relied on electrical signals that degraded over distance and required frequent amplification. The switch to light-based transmission enabled the global internet, high-definition video streaming, and instantaneous worldwide communication.

Similarly, photonic computing promises to break through the current limitations of electronic processing. Where traditional processors hit physical limits of heat dissipation and signal interference, photonic processors operate in an entirely different domain—one where the speed of light is the only constraint.

Real-World Applications

Photonic AI infrastructure would enable breakthrough applications across industries:

- Real-time language translation with zero perceptible latency

- Autonomous vehicles with instantaneous decision-making capabilities

- Climate modeling with unprecedented resolution and speed

- Medical diagnostics processing complex imaging in real-time

- Financial modeling with microsecond decision capabilities

LightMatter: Pioneering the Transition

LightMatter is leading the photonic AI revolution with a pragmatic, phased approach that bridges today’s silicon infrastructure with tomorrow’s all-optical systems. Rather than attempting to replace entire computing architectures overnight, they’re strategically transforming AI infrastructure piece by piece, starting where it matters most.

Phase 1: Light-Enabled Silicon

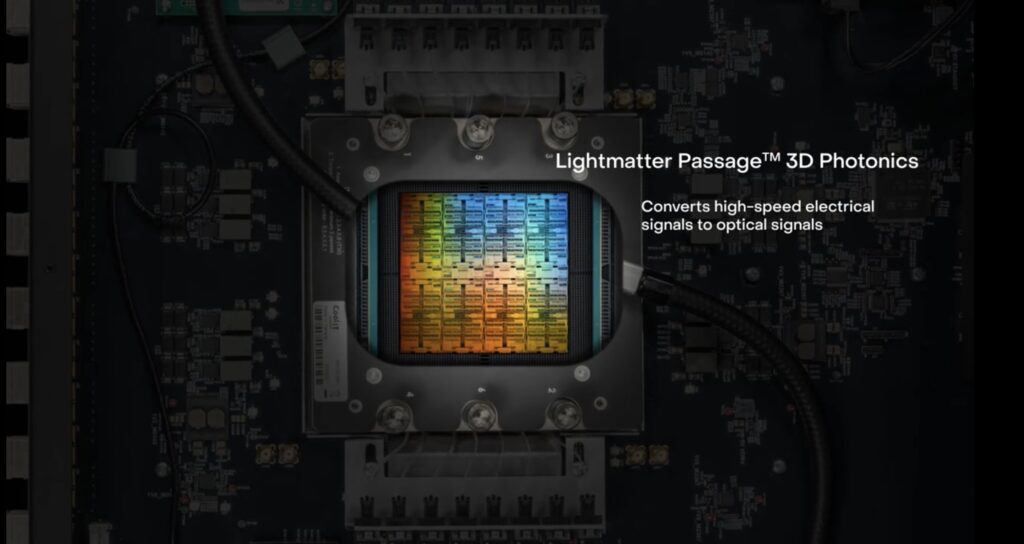

LightMatter’s breakthrough began with their photonic interconnect technology—the critical bottleneck in modern AI systems. Their Passage™ interconnect replaces electrical wires between chips with light-based connections, instantly eliminating the bandwidth limitations and energy waste of traditional copper interconnects.

This approach is genius in its simplicity: existing silicon chips continue to handle computation, but data moves between them at light speed with minimal energy loss. GPU clusters that once consumed massive amounts of power just moving data between processors now operate with dramatically improved efficiency and throughput.

LightMatter’s Interconnect Advantages

- 25x bandwidth increase over electrical interconnects

- 90% reduction in interconnect power consumption

- Drop-in compatibility with existing silicon processors

- Scalable architecture supporting thousands of connected devices

Phase 2: Full Photonic Infrastructure

LightMatter’s long-term vision extends beyond interconnects to complete photonic computing systems. Their roadmap includes photonic neural network accelerators that perform matrix operations—the fundamental building blocks of AI—entirely in the optical domain. This represents the ultimate evolution: computation itself happening at light speed.

These full photonic systems promise to deliver the theoretical maximums of optical computing: processing speeds limited only by the speed of light, energy consumption approaching the fundamental physical limits, and massive parallel processing capabilities that dwarf today’s electronic systems.

The Path Forward

LightMatter’s phased approach demonstrates the practical path to photonic AI dominance. By first solving the interconnect bottleneck with immediately deployable technology, they’re building the foundation for more revolutionary advances while delivering real value today.

The transition won’t happen overnight, but the physics is compelling. As AI models continue to grow in complexity and energy demands become unsustainable, photonic infrastructure offers a path to exponentially more capable and efficient AI systems.

The Light-Speed Future

Just as fiber optics made the modern internet possible, photonic computing will unlock the next generation of AI—systems that think at the speed of light while consuming a fraction of today’s energy.

The question isn’t whether photonics will transform AI infrastructure—it’s how quickly we can make the transition. In the race for artificial general intelligence, light itself may be our greatest ally.