Via Satellite announced Lynk and Omnispace merger among the 10 Hottest Companies in Satellite for 2026

Via Satellite announced Lynk and Omnispace merger among the 10 Hottest Companies in Satellite for 2026.

10 Hottest Companies in Satellite for 2026Via Satellite's annual list of the 10 Hottest Companies in Satellite

Via Satellite’s annual 10 Hottest Companies rounds up 10 “must watch” companies in the satellite industry, from constellations, manufacturing, launch, and more. Via Satellite editors chose the companies on this list based on their expected activity for the year, and a mix of market share, transformational technology, ground-breaking deals, and overall industry excitement.

We're thrilled to see Trajectory portfolio Co Lynk Global recognized for what the combined company would bring together in terms of "valuable assets and experience in the direct-to-device market." Together with Lynk, they are creating what will be the world's most comprehensive, global multi-orbit direct-to-device (D2D) infrastructure and solution -- bridging the gaps for mobile network operators globally.

LYNK/OMNISPACE see the full awards and winners

The pending Lynk and Omnispace merger brings together valuable assets and experience in the direct-to-device market that could see the two companies be much stronger together than they were separately. Both were early leaders in reimagining satellite connectivity’s role in the telco ecosystem. Lynk was the first to demonstrate a text message sent from a satellite in Low-Earth Orbit (LEO) to a mobile phone on Earth in 2020. Similarly, Omnispace CEO Ram Viswanathan set out an early vision of satellite being a larger part of 5G networks in a hybrid future. Despite their early successes, neither company so far has been able to move into wide, operational deployment.

The merger announced in November will bring together Omnispace’s access to S-band spectrum, with Lynk’s experience building and operating satellites and active partnerships with mobile network operators (MNOs) around the world. Omnispace cites its market access footprint as reaching 1 billion people across the Americas, Europe, Africa and Asia; while Lynk has relationships with more than 50 MNOs. SES is also coming onboard as a strategic investor, and the combined company will benefit from SES’s relationships with telecom and government customers. And the company has a wider vision than just consumer phones — this type of connectivity could be particularly interesting for commercial and industrial vehicles, and government and utility sectors worldwide. Joining together could be the spark that leads Lynk and Omnispace to greater success in the direct-to-device D2D market.

Securitize and Uniswap Labs collaborate to unlock liquidity options for BlackRock’s BUIDL

ALSO READ

OKX Ventures backs STBL in partnership with Hamilton Lane and Securitize

New integration pairs the efficiencies of Uniswap’s technology with the familiarity of traditional markets, enables near instant liquidity between BUIDL and USDC for investors

Uniswap Labs, the leader in decentralized finance, and Securitize, the leader in tokenizing real-world assets (RWAs), today announced a strategic integration to make BlackRock USD Institutional Digital Liquidity Fund (BUIDL) shares available to trade via UniswapX technology. This integration will enable onchain trading of BUIDL, both unlocking new liquidity options for BUIDL holders, and marking a significant step in bridging the gap between traditional finance and DeFi.

“Our mission at Labs is simple: make exchanging value cheaper, faster and more accessible,” said Hayden Adams, Uniswap Labs Founder and CEO. “Enabling BUIDL on UniswapX with BlackRock and Securitize supercharges our mission by creating efficient markets, better liquidity, and faster settlement. I’m excited to see what we build together.”

Securitize Markets will facilitate trading for any BUIDL investor who elects to participate through UniswapX’s RFQ framework. The automated system enables participants to identify the most competitive quote from an ecosystem of whitelisted market participants known as subscribers (including Flowdesk, Tokka Labs, and Wintermute), and settles the trade atomically onchain through immutable smart contracts. All investors utilizing the capability are pre-qualified and whitelisted through Securitize.

"This is the unlock we've been working toward: bringing the trust and regulatory standards of traditional finance to the speed and openness for which DeFi is known," said Carlos Domingo, CEO of Securitize. "For the first time, institutions and whitelisted investors can access technology from a leader in the decentralized finance space to trade tokenized real-world assets like BUIDL with self-custody."

With this integration of UniswapX and Securitize, investors now have the option to access available quotes across the market to swap BUIDL bilaterally with available whitelisted subscribers 24/7, 365 days a year.

“This collaboration with Uniswap Labs alongside Securitize is a notable step in the convergence of tokenized assets with decentralized finance. The integration of BUIDL into UniswapX marks a major leap forward in the interoperability of tokenized USD yield funds with stablecoins,” said Robert Mitchnick, Global Head of Digital Assets at BlackRock.

BlackRock has also made a strategic investment within the Uniswap ecosystem.

Disclosure

BUIDL holders are solely responsible for making their own decision to trade on UniswapX and do so at their own risk. BUIDL holders should review the BUIDL Offering Memorandum disclosure regarding UniswapX prior to making a decision to transact in BUIDL shares through UniswapX via Securitize. Uniswap Labs and Securitize are third party service providers in relation to BUIDL. BlackRock is not providing investment advice nor recommending to holders of BUIDL that they should use UniswapX, and BlackRock makes no assurances about the results that will be obtained thereby, the availability or performance of UniswapX, or liquidity and pricing thereon. BlackRock, in accordance with its own objectives, has made an investment within the Uniswap ecosystem. Any existing investment by BlackRock may be discontinued at any time, in accordance with BlackRock’s own objectives, and in connection with which BlackRock and its affiliates undertake no duty to provide notice of any kind to any person. BlackRock and its affiliates do not recommend, endorse, promote, provide advice or solicit investment as to, or make any representations of any kind whatsoever regarding the Uniswap ecosystem or protocol, Uniswap Labs, Uniswap Foundation, Uniswap DUNI, and/or UNI tokens to (or from) any person and have no responsibility or duty, and disclaim any and all liability, to any person in connection therewith.

About Uniswap Labs:

Uniswap Labs is a core contributor to the Uniswap Protocol – the world’s largest DEX, which has processed over $4 trillion in volume. Uniswap Labs also builds products that help users access the protocol, including the Uniswap Web App, Wallet, and Trading API. In addition, Uniswap Labs is the primary technical provider for Unichain, a DeFi-native Ethereum Layer 2 designed to be the home for liquidity across chains.

About Securitize:

Securitize, the world’s leader in tokenizing real-world assets with $4B+ AUM (as of November 2025), is bringing the world onchain through tokenized funds in partnership with top-tier asset managers, such as Apollo, BlackRock, BNY, Hamilton Lane, KKR, VanEck and others. In the U.S., Securitize operates as a SEC-registered broker dealer, SEC-registered transfer agent, fund administrator, and operator of a SEC-regulated Alternative Trading System (ATS). In Europe, Securitize is fully authorized as an Investment Firm and a Trading & Settlement System (TSS) under the EU DLT Pilot Regime, making it the only company licensed to operate regulated digital-securities infrastructure across both the U.S. and EU. Securitize has also been recognized as a 2025 Forbes Top 50 Fintech company.

For more information, please visit:

Website | X/Twitter | LinkedIn

About BlackRock:

BlackRock’s purpose is to help more and more people experience financial well-being. As a fiduciary to our clients and a leading financial technology provider, we help millions of people build savings that serve them throughout their lives by making investing easier and more affordable. For additional information on BlackRock, please visit www.blackrock.com/corporate

Contacts

Bridgett Frey

bridgett-frey@uniswap.org

Tom Murphy

tom.murphy@securitize.io

Grace Emery

Grace.Emery@blackrock.com

Humanoid robotics innovator Apptronik closes $935 Million Series A at $5 Billion valuation

Apptronik is a premier robotics company dedicated to building the next generation of general-purpose humanoid robots designed to work alongside humans. Its flagship humanoid, Apollo, represents a breakthrough in versatile robotics, featuring a human-centric design and an adaptable software stack that allows it to perform diverse functions—from logistics and manufacturing to retail—with the precision and safety required for industrial environments.

Repeat investors including B Capital, Google, Mercedes-Benz and PEAK6, alongside new investors including AT&T Ventures, John Deere and QIA, back Apptronik to scale production and deployment of Apollo™ humanoid robots

AI-powered robotics company Apptronik today announced a $520 million Series A-X funding round, with participation from existing investors including B Capital, Google, Mercedes-Benz and PEAK6, and new investors including AT&T Ventures, John Deere and Qatar Investment Authority (QIA). The Series A-X extension round follows a $415 million oversubscribed initial Series A raise in 2025, bringing Apptronik’s total Series A to more than $935 million and total capital raised to nearly $1 billion. After the initial Series A announcement, Apptronik continued to receive substantial inbound investor interest, leading the company to open the new extension of its Series A at a 3x multiple of the Series A valuation, underscoring strong investor confidence in Apptronik’s vision for AI-powered robots that support people in every facet of life.

With this fresh capital, Apptronik will ramp up production of its award-winning humanoid robot, Apollo™, and expand its global network of commercial and pilot deployments. The investment will accelerate time to market and enable Apptronik to invest in projects that are needed to solve the use cases of its large number of retail, manufacturing, and logistics customers, including building state-of-the-art facilities for robot training and data collection. The funding will also fuel continued innovation in the company’s pioneering human-centered robot design, paving the way for its highly anticipated new robot set to debut in 2026.

Apptronik has inked partnerships with some of the largest brands in the world, including Mercedes-Benz, GXO Logistics, and Jabil. Apptronik also has an industry-leading strategic partnership with Google DeepMind to build the next generation of humanoid robots, powered by Gemini Robotics.

“Today’s investment is a strong vote of confidence in our mission to deliver humanoid robots that are designed to work alongside humans, not just as tools but as trusted collaborators,” said Jeff Cardenas, co-founder and CEO of Apptronik. “With the backing of our longstanding investors and strategic partners, we’re poised to unveil the newest version of Apollo and maximize the impact of embodied AI across industries. Together, we’re transforming work flows, reimagining factory floors, and writing a new chapter for next-generation humanoid robots that are designed and built to drive meaningful societal progress.”

“Apptronik is setting the standard in embodied AI at scale,” said Howard Morgan, Chair and General Partner of B Capital. “At this pivotal moment, we’re proud to deepen our support as the team accelerates production and real-world deployment of AI-powered collaborators designed to work alongside people and reshape manufacturing, logistics and other mission-critical industries.”

Apollo is designed to revolutionize human-robot interaction, initially in critical industries like logistics and manufacturing, with future planned expansion into retail, healthcare, and eventually, the home. Apollo takes on physically demanding work and labor-intensive operational processes in manufacturing and logistics – working alongside human counterparts to transport components, sort, and kit, among other tasks.

About Apptronik

Apptronik is a human-centered robotics company developing AI-powered robots to support humanity in every facet of life. Our humanoid robot, Apollo, is designed to collaborate thoughtfully with humans—initially in critical industries such as manufacturing and logistics, with future applications in healthcare, the home, and beyond. Apollo is the culmination of nearly a decade of development, drawing on Apptronik’s extensive work on 15 previous robots, including NASA’s Valkyrie robot. Apptronik started out of the Human Centered Robotics Lab at the University of Texas at Austin and has nearly 300 employees. Learn more at apptronik.com.

https://youtube.com/shorts/dE0hSJ-E5ec?si=LgNub_gANPEGZQsr

Media Contact

Liz Clinkenbeard

Aerospace innovator Natilus raises $28 Million Series A to commercialize hyper efficient blended wing aircraft

Aerospace is about to change forever with Natilus disruptive aerospace innovation, and the launch of their blended wing passenger and cargo aircraft. We are thrilled to be along for this remarkable journey that signals a new dawn for the way we travel and create less impact on our planet and climate. The determination and tenacity of the team has been remarkable to get Natilus to the inflection point.

- Former Boeing Military Aircraft and Phantom Works exec joins Board of Directors to advance rapid prototyping and commercialization

- New capital will support the first full-scale flight of KONA, a next-generation regional freighter for commercial and defense logistics

- Its passenger aircraft, HORIZON EVO, will enter commercial service in the early 2030s

Natilus, a U.S.-based aerospace manufacturer of blended-wing aircraft, today announced it has secured $28 million in Series A financing. The financing was led by Draper Associates and includes strategic investors with focuses on aerospace, defense, and global freight logistics including: Type One Ventures, The Veterans Fund, and Flexport. Also participating are new investors New Vista Capital, Soma Capital, Liquid 2 VC, VU Venture Partners, and Wave FX.

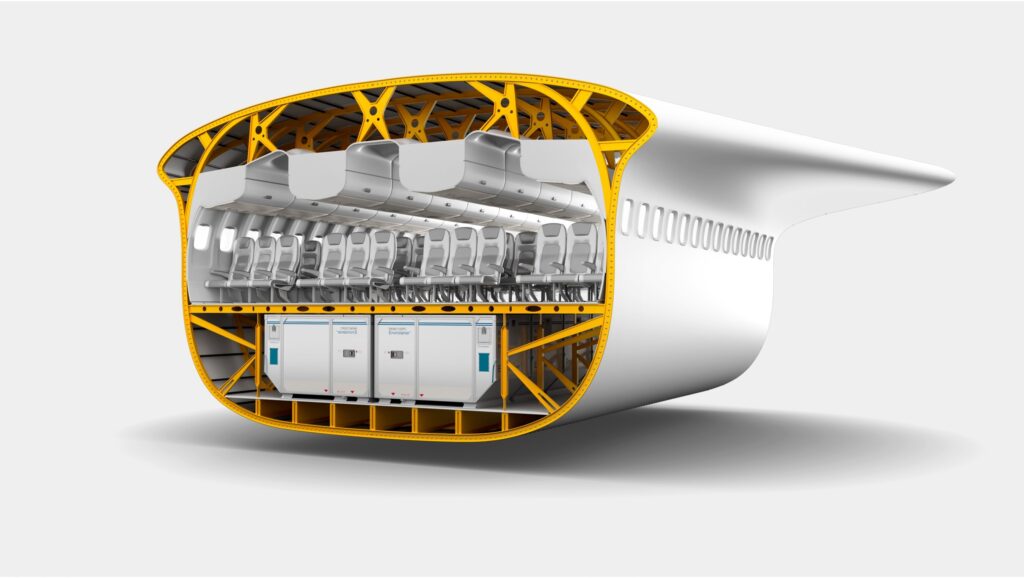

Natilus has attracted broad buy-in across defense, air freight and commercial aviation markets for the game changing economics that its blended-wing-body platform enables. Leveraging improved aerodynamics, capacity, and efficiency, its family of blended-wing aircraft cut fuel usage by 30% and carbon emissions and operational costs by 50%. This latest funding will allow Natilus to complete manufacturing of its first full-scale prototype of regional cargo plane KONA, which is expected to fly in the next 24 months. Natilus will also further invest in the development of its second aircraft, HORIZON EVO, a 200+-passenger aircraft intended to compete with the Boeing 737 MAX and Airbus A321-neo. Today, Natilus also debuted its transition from a single-deck to a dual-deck aircraft, implementing modifications to the profile and interior that substantially enhance passenger experience and safety.

Global aircraft demand has outpaced the combined production capabilities of Boeing and Airbus – leaving a shortfall of 15,000 planes that must be met over the next 20 years to satisfy global need. As a result, the market is hungry for a new manufacturing entrant that can navigate supply chain constraints and deliver a superior aircraft.

In the last 12 months, Natilus has made significant progress on its IP family and national manufacturing efforts. In July, it was awarded a patent for KONA's diamond-shaped cargo bay and in March, it initiated the launch of its first domestic manufacturing site search to produce KONA. Currently, Natilus's commercial product order book stands at 570+ aircraft, with reservations from major players like SpiceJet, Nolinor Aviation, Flexport, and Ameriflight – and is valued at $24 billion.

In addition to strong demand from domestic and global carriers, Natilus's optionally-piloted KONA is gaining interest for its potential defense applications. With its 3.8 ton payload capacity and ability to land on shorter, gravel runways, KONA can provide intra-theater lift and transport cargo to remote locations more efficiently than ever before. The cargo freighter can support Agile Combat Employment (ACE) and logistics resupply in highly contested and austere regions such as the Indo-Pacific. Natilus has engaged in conversations with the U.S. Army, U.S. Air Force, and the Department of Defense, which see value in KONA.

"The aviation market is ripe for a new aircraft manufacturing entrant," said Tim Draper, Founding Partner of Draper Associates. "Natilus's innovative and technology-driven approach to developing blended wing aircraft has opened the doors for air freight and passenger airlines alike to embrace these new planes."

Natilus has derisked the technology and expedited widespread commercial adoption by designing its planes to use existing engine technology and include vertical tails for control and stabilization. Natilus has designed its family of aircraft to be compatible with existing gate operations and airport infrastructure to maintain interoperability.

Meanwhile, Natilus is actively pursuing FAA Part 23, Amendment 64 certification for KONA and is determining a location for its 250,000 square feet manufacturing site to build 60 KONA per year. The company is on track to deliver the first KONA later this decade and the first HORIZON EVO in the early 2030s.

Natilus welcomes world-class aviation veteran and former Boeing executive, Kory Mathews, to the Natilus Board of Directors. During his tenure at Boeing, where he held positions as the VP of Phantom Works and VP and Chief Engineer of Boeing Military Aircraft, Kory led advanced aircraft design and rapid prototyping. He will leverage his experience there and now, as a Senior Partner at New Vista Capital, to provide valuable OEM and defense perspectives to the company.

"We're not just building aircraft. We're reshaping the future of aviation beyond the limitations of the tube-and-wing airframe to fundamentally transform how we transport goods and people," said Aleksey Matyushev, Co-Founder and CEO of Natilus. "With this latest funding and newest personnel additions, we are strongly positioned to bring our family of blended-wing aircraft to market, disrupting the Boeing-Airbus duopoly and bringing much-needed innovation to the aviation industry."

About Natilus

Natilus is a U.S.-based company developing a family of hyper-efficient blended-wing-body (BWB) aircraft designed to transport people and cargo more sustainably and efficiently than ever before. With over 570+ aircraft pre-orders valued at $24 billion, Natilus is commercializing its BWB aircraft that unlock improved aviation economics – reducing fuel consumption by 30% and operational costs by 50% while increasing payload capacity by 40%. Founded in 2016, the Natilus team is composed of innovators from Boeing, General Atomics, Northrop Grumman, Skunkworks, SpaceX, and Piper Aircraft. Learn more at natilus.co.

Natilus aerospace unveils new hyper efficient HORIZON EVO blended wing aircraft as it readies for FAA Certification and commercial fleet integration

- HORIZON EVO fits seamlessly into existing airport infrastructure and operations

- Design includes new dual-deck cabin, improved egress, and faster turnaround times

- Critical feedback from FAA and carrier customers addresses certification requirements

Natilus, a U.S. aerospace manufacturer of blended-wing-body aircraft (BWB), today reached a critical milestone as it readies its passenger plane, HORIZON EVO, for commercial certification and fleet integration. Based on key feedback from the Federal Aviation Administration (FAA) and its base of global carrier customers, the HORIZON EVO design has evolved from a single-deck to a dual-deck aircraft. These enhancements offer more practicality in design, build, and operations – while improving overall passenger experience and safety. HORIZON EVO will enter commercial service in the early 2030s.

"In our ongoing conversations with the FAA and customers, there's real excitement around what our new airframe brings, not only in terms of fuel economics, but in addressing some of the recent and real pain points happening in aviation today around safety, passenger experience, and plane shortages," said Aleksey Matyushev, Co-Founder and CEO of Natilus. "These airline-validated insights really drove the design enhancements around dual-deck practicality, egress certifiability, and turnaround times and put us on a clear path to commercial certification."

Natilus's vision for the commercial-ready HORIZON EVO is centered on three key design pillars which maintain the same interoperability with existing airport ground infrastructure, while implementing modifications to the profile and interior that substantially enhance passenger experience and safety.

Key Design Pillars:

- Dual-Deck with a Focus on Safety: Mirroring the dual-deck layout of existing narrowbodies, HORIZON EVO offers both a spacious upper deck cabin for passengers as well as lower deck for standard cargo containers, pressurization advancements for comfort, and improved access to emergency exit paths..

- More Overhead Storage Space and Windows Throughout the Cabin: Addressing a rising pain point for carriers, HORIZON EVO's dual-deck design offers more overhead storage space for passengers. The spacious upper deck also reflects customer-driven desire for the coveted window seat with an increase in windows, novel for the BWB.

- Improved Turnaround Times & Seamless Infrastructure Interoperability: The HORIZON EVO design maintains its purpose-built fit with existing passenger and cargo ground infrastructure and now includes the ability to carry standard air-freight containers in its lower deck. To ease the burden of loading, there will be multiple aisles in the premium and economy cabins.

Key Specifications:

- Cruise Mach: 0.78+

- Cruise Altitude: 35,000 ft.

- Fuel Type: Jet A or SAF

- Engine Type: PW 1500F Geared Turbofans or CFM LEAP

- Flight Deck: All Glass Fly-by-Wire

- Aircraft Span: 118 ft.

- Aircraft Length: 110 ft.

- Gate Class: C4

- PAX Count: 150 Three Class, 200 Two Class, 250 Single Class

- Economy Layout: 4 x 3 seats

- Cabin: Height: 7 ft., Width: 26 ft.

- Lower Deck Height: 4 ft., Lower Deck Width: 18 ft.

- Exits: 8

- Upper Deck Cargo Volume: 8,500 cubic ft.

- Lower Deck Cargo Volume: 2,600 cubic ft.

- Upper Deck Cargo Containers: 16 AAA (88x125 base)

- Lower Deck: 12 LD3-45 containers

"The commercial aviation industry is facing a fast-approaching reckoning in which demand for new airplanes far exceeds current production capacity, with global fleets forecasted to double over the next 20 years, driving the need for more than 40,000 airplane deliveries. It's a crucial moment for innovation that solves the economics for carriers, the experience for passengers, and the environmental impact for aviation," said Dennis Muilenburg, CEO of New Vista Capital and former Chairman & CEO of The Boeing Company. "We believe HORIZON EVO presents a highly-attractive transformative design at the leading-edge of that solution."

About Natilus

Natilus is a U.S.-based company developing a family of hyper-efficient blended-wing-body (BWB) aircraft designed to transport people and cargo more sustainably and efficiently than ever before. With over 570+ aircraft pre-orders valued at $24 billion, Natilus is commercializing its BWB aircraft that unlock improved aviation economics – reducing fuel consumption by 30% and operational costs by 50% while increasing payload capacity by 40%. Founded in 2016, the Natilus team is composed of innovators from General Atomics, Northrop Grumman, Skunkworks, SpaceX, and Piper Aircraft. Learn more at natilus.co.

Ecommerce infrastructure leader Syncware acquired MAPP Trap to expand wholesale automation capabilities

We're excited to share that Syncware is merging with MAPP Trap as we work toward creating the most comprehensive platform for wholesale commerce automation. This alliance brings together our leading wholesale automation platform with MAPP Trap's proven MAP compliance and brand protection capabilities, giving consumer product brands the tools they need to thrive in today's complex retail landscape.

With e-commerce projected to hit $6.8 trillion in 2025 and products appearing across millions of storefronts, brands need more than visibility. They need real enforcement. That's exactly what this combination delivers.

Welcome to the syncware family, Ron Solomon and the entire MAPP Trap team!

Syncware, the leading automation platform for wholesale order operations, today announced a merger with MAPP Trap, an enterprise SaaS solution that monitors and enforces Minimum Advertised Price (MAP) compliance and Unauthorized Reseller removals across e-commerce channels.

The alliance strengthens Syncware's mission to be the operating system of wholesale commerce by expanding its product suite to include critical brand protection and pricing enforcement capabilities. This combination enables Syncware to offer brands a more comprehensive platform for managing the complexities of b2b commerce.

"The explosion of online retailers has created unprecedented challenges for brands trying to protect their margins and maintain brand integrity," said Gregg Greenberg, CEO of Syncware. "MAPP Trap's proven enforcement and unauthorized reseller removal capabilities, combined with Syncware’s automation platform, will provide brands with more tools for managing their wholesale operations."

Addressing a Critical Market Need

With e-commerce sales projected to reach $6.8 trillion globally in 2025 and online retail representing over 16% of total U.S. retail sales, brands face an increasingly fragmented retail ecosystem. Products now appear across millions of e-commerce storefronts spanning marketplaces like Amazon, Walmart, eBay, Shopify, and independent direct-to-consumer sites, often on hundreds or thousands of unauthorized channels.

This proliferation has made automated MAP monitoring and enforcement essential for brands seeking to protect margins, maintain brand equity, and preserve critical retailer relationships.

Proven Platform with Enterprise Customers

Founded in 2014 by Ron Solomon, MAPP Trap serves more than 150 brands spanning multiple industries, with particular strengths in pet care, health and nutrition, outdoor, children’s products, and industrial products. The company's customer roster includes household names such as Atomic, Hanes, Land O' Lakes, Spinmaster, Ingersoll Rand, and Wahl Clipper.

The platform monitors millions of sellers and SKUs daily, providing real-time price monitoring, seller identification, and automated enforcement workflows that go beyond simple alerts to deliver actual compliance outcomes.

"When I founded MAPP Trap in 2014, it was born out of a personal need I experienced firsthand with my toy company, Swingset Press, the frustration of watching brand equity erode due to unauthorized sellers and MAP violations," said Ron Solomon, Founder of MAPP Trap. "Over the past decade, we've built a platform and a team that brands trust as business-critical. Joining forces with Syncware allows us to deliver even greater value to the brands we serve while expanding to new verticals and audiences."

Key Platform Capabilities

MAPP Trap's technology delivers:

- Comprehensive Monitoring: Product and online seller scanning across marketplaces and domains

- Seller Intelligence: Advanced algorithms and investigative techniques to identify and "unmask" sellers behind obscure storefronts

- True Enforcement: Automated communication workflows, multi-step escalation, and "white glove" enforcement services that drive real compliance outcomes

Leadership and Customer Continuity

MAPP Trap will continue to operate as an independent business unit ensuring seamless continuity of service for all customers. As such, MAPP Trap will retain its leadership and dedicated staff, with Ron Solomon continuing to lead the business and reporting to Gregg Greenberg, CEO of the combined companies. The MAPP Trap platform will maintain its current capabilities and customer experience, with no planned changes to the technology or service delivery that customers rely on today.

About MAPP Trap

MAPP Trap is a SaaS platform focused on online brand protection, MAP enforcement, and unauthorized seller monitoring across e-commerce channels. Founded in 2014, the company helps brands protect their margins, maintain brand integrity, and preserve retailer relationships through automated monitoring and enforcement.

For more information, visit www.mapptrap.com

Media Contact:

Bill Robb

Head of Marketing and Ecosystem

Syncware

Book a demo to learn more about Syncware or provide your email address below to sign up for email updates.

Machina Labs closed $124 Million Series C to scale robotic manufacturing infrastructure for advanced mobility and defense

We couldn't be more excited for Trajectory Co Machina Labs, and their announcing a huge $124 million Series C to fuel their incredible trajectory.

Machina Labs CEO "Machina is no longer just building robotic machines. Machina is now the factory that uses our robotic machines. Not just a platform. A full-stack, end-to-end factory for advanced metal structures serving primes and OEMs across defense, aerospace, and automotive. We started with sheet metal forming. Now we are machining, welding, and assembling. From raw metal to finished structure. Fundraising is a milestone, but it is really a reflection of the team. I am deeply proud of what they have built and even more excited about what comes next. The next few years for us are about being big part of reindustrialization in the US and our friends. Not the nostalgic version where we rebuild yesterday’s factories and hope it works this time. We are building something fundamentally new. Factories that are distributed, flexible, and programmable. Factories that can switch products as fast as software changes. Factories that scale by replication, not by mega projects that take years to stand up. Our third and first high volume facility will deploy 50 Robocraftsmen to prove you do not have to choose between flexibility and scale. You can have both. And we are not stopping there. Right after, we will launch our fourth facility internationally. Not in one or two years. In months. To prove this new paradigm is not only agile in manufacturing, but agile in deployment. This is what the future factory looks like.

Funding accelerates deployment of Machina Labs’ first large-scale, U.S.-based Intelligent Factory for advanced metal structures production, the company’s third facility overall, as it scales into industrial manufacturing infrastructure

The leader in advanced AI powered robotics manufacturing, Machina Labs, closed their Series C financing totaling $124 million and the development of its first large-scale Intelligent Factory. Woven Capital, Toyota’s growth-stage venture arm, Lockheed Martin Ventures, Balerion Space Ventures, and Strategic Development Fund (SDF) invested in the round.

The funding marks a critical inflection point for Machina Labs as the company scales from breakthrough manufacturing technology to deploying software-defined production infrastructure capable of supporting mission-critical metal structures across defense, aerospace, and advanced mobility.

“The world’s most advanced designs are being held back by 20th-century factories,” said Edward Mehr, CEO and co-founder of Machina Labs. “This round allows us to scale manufacturing infrastructure that moves at the speed of software. We’re not just making parts, we’re reprogramming the factory itself to serve defense, aerospace, and automotive customers who can’t afford to wait.”

From Breakthrough Technology to Manufacturing Infrastructure

A significant portion of the capital will be used to launch Machina Labs’ first large-scale Intelligent Factory in the U.S., a 200,000-square-foot, production-ready facility that will house up to 50 RoboCraftsman™ cells and produce thousands of complex structural assemblies annually for defense and aerospace customers.

From missile structures to airframes, Machina Labs’ Intelligent Factory is designed to manufacture a wide range of complex metal structures, without significant retooling or reconfiguration. Powered by Machina Labs’ RoboCraftsman platform, the Intelligent Factory will enable customers to move from digital design to production inside the same facility, compressing timelines from months to days.

“We believe Machina Labs’ AI-driven manufacturing approach will play a key role in shaping the future of aerospace production,” said Chris Moran, Vice President and General Manager at Lockheed Martin Ventures. “The launch of their new factory marks a major step forward, demonstrating how intelligent, robotic production can bring greater speed, precision, and scalability to the industry.”

Manufacturing Speed as a Strategic Imperative

Machina Labs is actively supporting U.S. government and commercial programs where speed of production has become a strategic constraint. The company has secured contract awards from the U.S. Air Force Research Laboratory and the U.S. Air Force Rapid Sustainment Office, and is working with a leading defense prime on metal structures production for missiles and hypersonics.

By integrating forming, machining, welding, and assembly into a single intelligent factory, Machina Labs is laying the groundwork for a future in which manufacturing capacity can be deployed, scaled, and adapted as dynamically as software.

“Modern defense systems are often limited not by design, but by how fast they can be manufactured,” said Phil Scully, General Partner and Co-Founder at Balerion Space Ventures. “Machina Labs is building the manufacturing backbone required to close that gap and operate as a true Tier 1 partner.”

Manufacturing Innovation in Mobility

While defense remains a core focus, Machina Labs’ platform is inherently dual-use, supporting commercial innovation alongside national security needs. The company continues to work closely with Toyota to develop production-quality automotive panels that unlock design freedom and enable rapid customization at scale.

"The automotive industry has long been the proving ground for manufacturing innovation," said Ro Gupta, Managing Director at Woven Capital. "Machina Labs is pioneering intelligent forming technology that brings craft-level precision to industrial scale, enabling the flexible, responsive production that next-generation mobility demands. This is exactly the kind of innovation that will shape advanced manufacturing's future, and we're proud to support their journey."

About Machina Labs

Machina Labs is reinventing metal manufacturing with AI and robotics. The company builds and operates intelligent factories capable of rapidly forming and assembling aerospace- and defense-grade metal structures directly from digital design. Its flagship platform, RoboCraftsman™, combines advanced robotics, machine learning, and proprietary RoboForming™ technology to deliver software-defined manufacturing at production scale. Founded in 2019 and based in Los Angeles, Machina Labs serves customers across the U.S. Department of War, defense primes, and mobility leaders. The company is scaling its platform to become a Tier 1 manufacturing partner for the next generation of mission-critical systems. For more information, visit machinalabs.ai and you can find the press kit here.

Contacts

Mesh closes $75M Series C at $1B valuation to accelerate universal Crypto Payments Network adoption

Unicorn status validates momentum as the only unified global payments network for today's borderless, tokenized economy

Mesh announced the successful close of their $75M Series C. The round brings our total funding to more than $200M and values the company at $1B.

As crypto moves from experimentation to real-world adoption, scalable infrastructure has become mission-critical. We built Mesh to address that challenge, centering our work around two core pillars:

- Solving fragmentation: We created a single “network of networks” uniting wallets, exchanges, and blockchains so payments and conversions can work across platforms by default.

- Unlocking “any-to-any payments”: We launched SmartFunding orchestration, allowing consumers to pay with the assets they already hold while merchants settle instantly in their preferred currency.

The leading crypto payments network MESH, announced it closed a $75 million Series C funding round, bringing its total amount raised to over $200 million and valuing the company at $1B. Dragonfly Capital led the round, with participation from Paradigm, Moderne Ventures, Coinbase Ventures, SBI Investment, and Liberty City Ventures.

Crypto is making the payment rails built for an analog world obsolete, and Mesh is leading this movement by connecting a fragmented global crypto market and bypassing the slow settlements and excessive fees long established by traditional finance. As the industry moves from experimentation to real-world adoption, capital is increasingly flowing toward infrastructure rather than speculation, with Mesh standing out as the only unified payment network for a borderless, tokenized economy.

The round also accelerates Mesh's expansion into regions like Latin America, Asia and Europe, fueling product development and strengthening a global network that already reaches more than 900 million users worldwide. Previously, the company announced its expansion into India, citing the country's young, tech-savvy population and $125B+ in annual remittances as reasons for the move. Mesh also previously announced support for Ripple USD and forged new partnerships with Paxos and Rain.

"Crypto is crowded by design, with new tokens and new protocols emerging every day," said Bam Azizi, Co-founder and CEO of Mesh. "That fragmentation creates real friction in the customer payment experience. We are focused on building the necessary infrastructure now to connect wallets, chains, and assets, allowing them to function as a unified network. This funding validates that the winners of the next decade won't be those who issue the most tokens, but those who build the network of networks that makes traditional card rails obsolete."

"Payments are entering a new era where value moves as software. Mesh is building the interoperability layer that makes crypto practical at scale: consumers can spend any asset, merchants can settle instantly in the stablecoin or fiat they want, and the complexity stays under the hood. That 'any-to-any' experience is exactly what mainstream adoption demands, and we're excited to lead this round as Mesh becomes the universal network for global, compliant crypto payments," said Rob Hadick, General Partner at Dragonfly.

Solving the "Stablecoin Paradox" Through Universal Interoperability

While the rapid growth of new stablecoins and blockchains signals a healthy industry, it's also reintroducing fragmentation that crypto was designed to solve. In 2025, stablecoins reached a historic $300B market cap and processed over $27T in annual transaction volume.

This rapid growth has created isolated pockets of liquidity, however, forcing users to navigate a maze of disparate platforms and complex network choices. Mesh serves as the neutral layer that unifies this fragmented landscape, ensuring the future of payments is built on infrastructure that makes all assets universally spendable.

As the only network that works with all crypto, Mesh remains asset-agnostic, providing the infrastructure that allows the entire industry to function as a single system. Through its proprietary SmartFunding technology, Mesh enables a true "any-to-any" advantage: consumers pay with any asset they hold – from Bitcoin to Solana – while merchants receive instant settlement in their preferred stablecoin (e.g., USDC or PYUSD) or local currency (e.g., dollars or euros).

A portion of Mesh's $75M Series C round was settled using stablecoins to demonstrate that this infrastructure is ready for high-stakes, real-world use. This milestone serves as definitive proof that global institutions are now comfortably relying on blockchain-native settlement when enterprise-grade execution, auditability, and controls are firmly in place.

For more information about Mesh, visit https://meshpay.com/.

About Mesh

Founded in 2020, Mesh is building the first global crypto payments network, connecting hundreds of exchanges, wallets, and financial services platforms to enable seamless digital asset payments and conversions. By unifying these platforms into a single network, Mesh is pioneering an open, connected, and secure ecosystem for digital finance. For more information, visit https://www.meshpay.com/.

Contact: mesh@missionnorth.com

About Dragonfly Capital

Dragonfly is a $4B crypto-focused global investment firm. Since 2017, Dragonfly has been at the forefront of blockchain and crypto innovation with a long-term oriented, technical, and research-driven approach, having been early backers of some of the most influential protocols and companies in the industry.

Contact: annica@dragonfly.xyz

TrueFoundry introduces TrueFailover, a mission critical platform ensuring business-critical AI workflows are uninterrupted

ALSO READ

TrueFoundry launches TrueFailover to automatically reroute enterprise AI traffic during model outages - VentureBeat

Introducing the mission-critical resilience layer for your AI applications. TrueFailover automatically routes around model outages, regional failures, and API degradations so AI systems stay online.

AI outages are happening more frequently, and they hit production systems hard. TrueFailover is our new resilience feature that automatically routes around model outages, regional failures, and API degradation so your AI applications stay online.

- Solution serves as a resilience engine for mission-critical workloads

- Helps enterprises architect for continuity, not just capability, ensuring business operations stay live even during major provider disruptions

TrueFoundry, an enterprise AI infrastructure platform, today announced TrueFailover, a new solution designed to keep AI-powered applications online even when major providers experience outages and degradation.

The announcement comes as more and more enterprises suffer major outages, leaving thousands of users unable to perform mission-critical tasks and scrambling for alternatives. These downtime instances often directly affect the business and its customers through lost revenue opportunities, stalled meetings, missed service-level agreements, and tickets piling up. This creates a ripple effect that can quickly have global implications.

“Most people experience these outages as an inconvenience, like not being able to scroll through their favorite social media app,” said Nikunj Bajaj, Co-Founder and CEO of TrueFoundry. “But for teams building AI systems, it’s a stark reminder that even the biggest, most reliable platforms fail, and that failure can have real business consequences if there is no backup plan. Resilience is not optional anymore — it’s architecture.”

AI now sits squarely in critical businesses:

- Pharmacies use GenAI to refill prescriptions to avoid delaying drug delivery.

- Sales teams rely on AI to generate proposals and outreach.

- Developers rely on AI coding assistants to ship faster.

- Customer support teams deploying new agents risk reputational damage if agents do not work the first time.

The catch: most AI applications rely on external models and APIs (LLMs, embedding services, vector databases, and voice and vision APIs) that can fail, rate-limit, or degrade in quality without warning. Recent incidents have shown partial LLM outages, embedding APIs slowing to a crawl, and latency spikes in voice-generation services.

“Too many teams have architected for capability, not continuity,” Bajaj added. “They picked the ‘best’ model, but never asked what happens when it’s unavailable at 3 p.m. on a Tuesday.”

Introducing TrueFailover: outage resilience for AI, by design

TrueFailover packages TrueFoundry’s multi-model and multi-region capabilities into a focused outage-resilience solution that sits on top of the company’s AI Gateway and globally distributed deployment layer.

When a primary model, region, or provider fails, TrueFailover ensures that AI workloads transition seamlessly to healthy alternatives — without requiring application teams to rewrite code or manually reroute traffic.

Key capabilities include:

- Multi-model failover

Define primary and fallback models across multiple providers (e.g., OpenAI, Anthropic, Gemini, Groq, Mistral, or self-hosted) so that if one model is unavailable, rate-limited, or degraded, traffic transparently shifts to another. As a result, customer-facing and internal AI apps keep responding even when a primary model breaks. - Multi-region and multi-cloud resilience

Run AI endpoints across regions and clouds, with health-based routing that automatically diverts traffic away from unhealthy zones while maintaining low latency for global users. Regional outages become invisible to users, instead of global incidents. - Degradation-aware routing

Continuously monitor latency, error rates, and quality signals so that routing decisions respond not only to hard outages, but also to slowdowns and partial failures. Avoid “slow but technically up” failures that quietly destroy user experience and SLAs. - Health checks, monitoring, and tracing

Built-in health probes, observability, and request tracing provide a clear incident timeline: where failures originated, how traffic was rerouted, and which models carried the load. Now, Site Reliability Engineering and platform teams can diagnose issues in minutes, not hours, and prove how TrueFailover mitigated the impact. - Caching and rate protection

Strategic caching shields providers from sudden traffic spikes and protects customers from rate-limit cascades during high-traffic events or upstream instability. This allows systems to ride out demand spikes and provider limits without sudden brownouts or throttling surprises.

With TrueFailover, end-users and internal teams don’t see the outage — they see a system that continues to respond. The incident becomes a routing decision, not a business crisis.

From “Which model is best?” to “How do we ensure AI doesn’t break?”

Traditional AI conversations often focus on benchmark scores and model leaderboards. Forward-looking enterprises are starting with a different question: “How do we ensure AI doesn’t break?”

“TrueFoundry empowers us to deliver and scale AI capabilities seamlessly,” said Raghu Sethuraman, Vice President of Engineering at Automation Anywhere. “AI is now a fundamental requirement, and the control, availability, and resilience TrueFoundry provides enable us to confidently accelerate AI adoption and deployment across our organization.”

TrueFoundry brings hardened stability to the evolving AI stack by embedding TrueFailover at the AI Gateway Layer. This enables organizations to leverage health-based routing and graceful failover, ensuring AI applications remain as resilient as the world’s most robust distributed systems.

TrueFailover will be offered as an add-on resilience module on top of the TrueFoundry AI Gateway and platform. An early access program for design partners will open in the coming weeks, with broader availability to follow.

Enterprises interested in participating in the TrueFailover early access program can contact TrueFoundry via the company’s website.

About TrueFoundry

TrueFoundry is an Enterprise Platform as a Service that enables companies to build, observe, and govern Agentic AI applications securely, scalably, and with reliability through its AI Gateway and Agentic Deployment platform. Leading Fortune 1000 companies trust TrueFoundry to accelerate innovation and deliver AI at scale, with over 10 billion requests per month processed via the TrueFoundry AI Gateway and more than 1,000 clusters managed by its Agentic deployment platform. TrueFoundry’s vision is to become the central control plane for running Agentic AI at scale within enterprises, serving as the command center for enterprise AI. Headquartered in San Francisco, TrueFoundry operates across North America, Europe, and Asia-Pacific, supporting enterprise AI deployments for some of the world’s most innovative organizations. To learn more about TrueFoundry, visit truefoundry.com.

Contacts

Media Contact

VSC for TrueFoundry

truefoundry@vsc.co

Lightmatter Introduces ground breaking Guide Light Engine for AI with integrated lasers to scale CPO for AI infrastructure

ALSO READ

Building out the Photonic Stack - Lightmatter

Lightmatter and GUC Partner to Produce Co-Packaged Optics (CPO) Solutions for AI Hyperscalers - Lightmatter

Lightmatter Collaborates with Synopsys to Integrate Advanced Interface IP with Its Passage Co-Packaged Optics Platform - Lightmatter

Lightmatter and Cadence Collaborate to Accelerate Optical Interconnect for AI Infrastructure - Lightmatter

A new class of integrated lasers to scale CPO for AI infrastructure

Lightmatter, the leader in photonic (super)computing, announced a foundational advancement in laser architecture: Very Large Scale Photonics (VLSP). Embodied in the Guide™ light engine, this breakthrough creates the industry’s most integrated laser platform supporting unprecedented bandwidths—moving laser manufacturing from manual assembly lines toward foundry production. VLSP technology leverages large-scale photonic integration to overcome power-scaling limitations, enabling the photonic interconnect roadmap for AI with an initial 8X increase in optical bandwidth density, unprecedented deployment scalability, and wavelength stability.

Just as Lightmatter’s Passage™ photonic interconnects have shattered shoreline bandwidth limitations with their unique 3D architecture, the company’s new Guide™ light engine represents a giant leap forward in laser technology. The largest AI clusters in hyperscale data centers now depend on connectivity that is fundamentally constrained by bandwidth density—limited not only by I/O at the chip edges but also by the fact that even the most advanced photonic interconnects are only as capable as the laser technology that powers them.

Overcoming The Power Wall

Current co-packaged optics (CPO) and near-package optics (NPO) solutions rely on discrete indium phosphide (InP) laser diodes integrated in External Laser Small Form Factor Pluggable (ELSFP) modules. These architectures face a power wall: connector end faces and epoxy-bonded assemblies are vulnerable to thermal damage, where contamination absorbs light and can cause fiber damage at power levels as low as hundreds of milliwatts. This limits the usefulness of scaling optical power in InP lasers—the traditional roadmap for laser technology. Doubling the bandwidth today requires doubling the number of ELSFPs, leading to corresponding increases in cost, power consumption, and front-panel space, ultimately decreasing system-level reliability. Further, discrete lasers struggle to achieve tight wavelength spacing and control in upcoming dense wavelength division multiplexing, where laser arrays must maintain precise wavelengths with minimal drift.

A New Scaling Paradigm

The Guide VLSP light engine establishes a new bandwidth-scaling paradigm by reducing the high component counts associated with discrete laser modules while delivering inherently superior yields and field reliability. By moving to an integrated architecture, Lightmatter has defined a laser roadmap that efficiently scales from 1 to 64 wavelengths and beyond while reducing assembly complexity. The result is a massive density improvement: the first generation Guide validation platform enables 100 Tbps of switch bandwidth in a compact 1RU chassis, a feat that would require about 18 conventional ELSFP modules, occupying 4RU of rack space.

“Our customers are building infrastructure for MoE and world models at scales that demand semiconductor-grade integration everywhere—including the light source,” said Nick Harris, co-founder and CEO of Lightmatter. “Scalable lasers unlock scalable CPO. Guide delivers massive bandwidth density through integration.”

"The transition toward co-packaged optics (CPO) is becoming a key enabler for the next generation of AI-scale networks, driven by the need for higher bandwidth density and lower power per bit as electrical I/O limits tighten at very high data center rates”, said Jean-Christophe Eloy, Founder and President of Yole Group. "Lightmatter’s VLSP innovation represents a fundamental shift in how we power optical interconnects. Its level of photonic integration provides a scalable light source that can enable hyperscale CPO deployments over the next decade, addressing a laser market opportunity that alone rivals the scale of the optical engine segment.”1

Lightmatter VLSP Technology Validation

The Guide light engine, powering Passage M-Series and L-Series (“Bobcat”) rack-scale validation platforms, showcases unprecedented laser performance:

- High Bandwidth Density: Up to 51.2 Tbps per laser module for NPO and CPO applications

- High Optical Output Power: Minimum of 100 mW per fiber

- Wavelengths: generates 16 wavelengths with multiplexing

- Closed-Loop Control: Enabling bidirectional photonic links, in which two 400 GHz-spaced wavelength grids interleave at precisely 200 GHz offset with +/- 20 GHz accuracy, while delivering optical power uniformity of up to 0.1 dB across the channels in fiber

Learn more: Lightmatter SC25 live demonstrations

Availability

The VLSP-based Guide validation platform is sampling now, powering the latest rack-scale Passage L-Series and M-Series test and validation platforms. The Guide laser module is designed to be interoperable with third-party NPO and CPO solutions. Evaluation Kits (EVKs) are available on a priority basis to select strategic partners.

About Lightmatter

Lightmatter is leading the revolution in AI data center infrastructure, enabling the next giant leaps in human progress. The company’s groundbreaking Passage™ platform—the world’s first 3D-stacked silicon photonics engine—and Guide®—the industry's first VLSP light engine—connect thousands to millions of XPUs. Designed to eliminate critical data bottlenecks, Lightmatter’s technology delivers unprecedented bandwidth density and energy efficiency for the most advanced AI and high-performance computing workloads, fundamentally redefining the architecture of next-generation AI infrastructure. Visit www.lightmatter.co to learn more.

Lightmatter, Passage, and Guide are trademarks of Lightmatter, Inc.

Any other trademarks or registered trademarks mentioned in this release are the property of their respective owners.

1 Source: Co-Packaged Optics for Data Centers 2025 report, Yole Group

Contacts

Media Contacts:

Lightmatter

John O’Brien

press@lightmatter.co