Paladin Cyber Security and Insurance Platform Unveils Rebranding to Upfort in Light of Rapid Growth and Market Demand

The transition from Paladin Cyber to Upfort reflects the breadth of the expanded platform as the company gains critical mass as the leader in their category. Along with former Farmers Insurance and Swiss Re executives join Upfort’s leadership team.

Paladin Cyber, a leading full-stack cyber security and insurance platform, today announced that it has changed its corporate name to Upfort, reflecting its expanded capabilities amid unprecedented company growth and market demand. Upfort also announced two senior hires, Jeremy Wieber and Matthew Leanza, to advance its strategic initiatives and spearhead the company’s partnership-centric approach toward helping companies develop cyber resilience.

“We are thrilled to introduce Upfort, building upon our successes and offerings to bring AI-powered solutions to insurers and brokers that can transform the way cyber insurance is underwritten and sold”

Upfort will continue to offer the same services as Paladin Cyber and also broaden its suite of offerings, moving from solely focusing on cyber attack prevention to taking a more encompassing approach to building cyber resilience. When the company originally launched in 2017, it prioritized the development and delivery of a SaaS cybersecurity solution to small-to-medium enterprises. Since then, it has also created a turnkey platform to run cyber insurance programs powered by automated underwriting. The company will combine these efforts under the Upfort platform.

Upfort will continue to offer the same services as Paladin Cyber and also broaden its suite of offerings, moving from solely focusing on cyber attack prevention to taking a more encompassing approach to building cyber resilience. When the company originally launched in 2017, it prioritized the development and delivery of a SaaS cybersecurity solution to small-to-medium enterprises. Since then, it has also created a turnkey platform to run cyber insurance programs powered by automated underwriting. The company will combine these efforts under the Upfort platform.

“We are thrilled to introduce Upfort, building upon our successes and offerings to bring AI-powered solutions to insurers and brokers that can transform the way cyber insurance is underwritten and sold,” said Xing Xin, CEO and Co-Founder. “Upfort, formerly known as Paladin Cyber, represents our commitment to accelerating the world’s journey to cyber resilience, and we are excited to welcome two industry veterans to drive our partnership-led approach.”

Now, Upfort is working alongside insurers and brokers to streamline the cyber insurance underwriting process. In addition to conducting automated risk assessments, Upfort provides active guidance and security solutions to ensure applicants obtain comprehensive cyber insurance policies without hassle.

Already, Upfort has yielded meaningful results for businesses seeking cyber risk protection. In a recent 18-month study of real claims, Upfort has seen organizations that fully implemented Upfort Shield, its cybersecurity solution, file claims at 1/5th the rate of those who didn’t – all while not filing a single ransomware or wire transfer fraud claim, two of the leading claim types.

Upfort’s expanded capabilities empower all stakeholders across the cyber risk ecosystem – including brokers, insurers, MSPs, and other risk managers – to protect businesses against cyber threats and develop cyber resilience. By bridging the gap between insurance underwriting and cybersecurity, brokers can ensure clients meet underwriting standards through automation, address risk mitigation with turnkey security, and increase renewal retention with broader services. Upfort’s partners can leverage its proprietary underwriting technology to efficiently deploy risk management solutions with embedded insurance to large populations of clients, vendors, franchisees, and more.

The new executives – Jeremy Wieber and Matthew Leanza – bring decades of expertise to the growing Upfort team. Wieber joins Upfort as Head of Strategic Partnerships, leveraging more than 13 years of experience within the insurance industry, including most recently with Farmers Insurance, where he was the Executive Director of Strategic Distribution and Partnerships.

Leanza joins Upfort as Head of Business Development drawing on significant expertise in insurance, business development, building new teams, and providing stellar experiences for partners & customers. Most recently, Leanza was the Vice President of Distribution at Swiss Re Corporate Solutions.

“We are proud to welcome Jeremy and Matthew to the Upfort team as we step into a future of continued growth and Upfort helps many more companies realize cyber resilience,” said Josh Riley, Managing Director and Co-Founder.

To learn more about Upfort, visit upfort.com.

About Upfort

Upfort is a leading platform for cyber security and insurance that provides holistic protection from evolving cyber threats. Founded in 2017 to expand global access to cyber resilience, Upfort makes cyber risk easy to manage and simple to insure. Upfort delivers turnkey security proven to proactively mitigate risk and comprehensive cyber insurance from leading insurers. With proprietary data and intelligent automation, Upfort’s AI anticipates risk and streamlines mitigation for hassle-free underwriting. Insurers, brokers, and risk advisors partner with Upfort to offer clients resilience and peace of mind against cyber threats. To learn more about Upfort, visit upfort.com.

Contacts

Media:

Christina Levin

Caliber Corporate Advisers

upfort@calibercorporate.com

Machina Labs closes $32 Million Series B to revolutionize AI-driven robotics manufacturing

Their cutting edge disruptive innovation advances AI and Robotics integration in the production of advanced metal products

Machina Labs combines AI and robotics to rapidly manufacture advanced composite and metal products. Today, the company announced it has secured $32 Million in Series B investment to revolutionize AI-driven manufacturing.

“With their deep heritage in artificial intelligence and high-performance computing, we are looking forward to NVIDIA’s support as we further develop our AI and simulation capabilities” Tweet this

The round was co-led by new investor NVentures, NVIDIA’s venture capital arm, and existing investor Innovation Endeavors, with contributions from existing and other new investors. This latest funding brings the total raised by Machina Labs to $45 million.

The investment will be used to meet accelerating customer demand, to further intensify research initiatives, and to continue delivering innovative solutions that exceed customer expectations.

“With their deep heritage in artificial intelligence and high-performance computing, we are looking forward to NVIDIA’s support as we further develop our AI and simulation capabilities,” according to Edward Mehr, CEO and co-founder of Machina Labs. “We are also thrilled to see our current partner Innovation Endeavors continue to support our vision. This Series B funding underscores the transformative potential of merging robotics and artificial intelligence. With this support, we are poised to develop the next generation of manufacturing floors; ones that can easily remake production with no hardware or tooling changes, but only requiring software modification.”

With Machina Labs, factories no longer need to be tied to specific products, but can now be configured via software to power on-demand manufacturing, and unlock innovation in manufacturing. Machina Labs combines the latest advances in AI and robotics to deliver finished metal products in days – not months or years – and gives customers unprecedented time to market and competitive advantage.

“Over the past two years, Machina Labs has demonstrated the impact and scalability of combining robotics and AI in manufacturing,” said Innovation Endeavors Partner, Sam Smith-Eppsteiner. “We are thrilled to double down on our investment in Machina on the back of demonstrated execution, technology gains, and commercial traction. We expect Machina to play an important role in a number of key industrial trends of the next decade: domestic re-industrialization, defense innovation, electrification, and commercial space.”

“AI is rapidly accelerating industries across the global economy, including manufacturing,” said Mohamed “Sid” Siddeek, Corporate Vice President and Head of NVentures. “Machina Labs’s work to apply advanced computing and robotics to sheet metal formation enables companies to operate manufacturing facilities with substantially improved efficiency and broadened capabilities.”

Machina Labs uses robots the way a blacksmith uses a hammer to creatively manufacture different designs and material, introducing unprecedented flexibility and agility to the manufacturing industry. With Machina, great ideas can quickly and affordably become reality and businesses can benefit from rapid iteration to bring more innovative products to market faster.

Robotic sheet forming is the first process enabled by Machina’s patented manufacturing platform. Using material- and geometry-agnostic technology, the platform outperforms traditional sheet-forming methods that rely on custom molds or dies.

About Machina Labs

Founded in 2019 by aerospace and automotive industry veterans, Machina Labs is an advanced manufacturing company based in Los Angeles, California. Enabled by advancements in artificial intelligence and robotics, Machina Labs is developing Software-Defined Factories of the Future. The mission of the company is to develop modular manufacturing solutions that can be reconfigured to manufacture new products simply by changing the software. For more information, please visit https://www.machinalabs.ai/

Contacts

Tim Smith

Element Public Relations

415-350-3019

tsmith@elementpr.com

Stoke Space raises $100M Series B to send the worlds first 100% reusable rocket in orbit by 2025

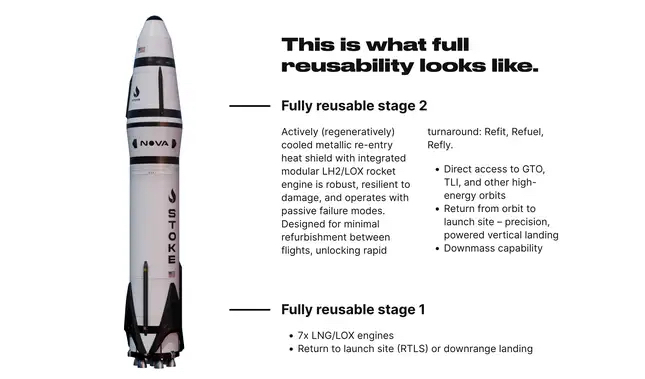

Washington-based rocket innovator startup Stoke Space raised $100 million in new funds, the company announced Thursday, as it aims to develop a fully reusable rocket called “Nova.”

Stoke’s latest investment round was led by Industrious Ventures – with the firm’s Steve Angel, chairman of chemicals giant Lindejoining the Stoke board of directors. The space company’s fundraise was also joined by investors including the University of Michigan, Sparta Group, Long Journey, Bill Gates’ Breakthrough Energy, YCombinator, Point72 Ventures, NFX, MaC Ventures, Toyota Ventures and In-Q-Tel.

Founded in 2019, Stoke had previously raised $75 million to date. A company spokesperson declined to comment on Stoke’s post-money valuation.

“The priority is to be able to keep the pedal to the metal and continue to develop in order to get to market as soon as possible and really fortify what is still a very fragile commercial space economy,” Stoke co-founder and CEO Andy Lapsa told CNBC.

Stoke said it would use the new capital to fund the development of its first rocket, which the company has finally christened, giving it the name “Nova.” That includes the development of the first-stage engine and structure, an orbital version of the second stage and building out launch infrastructure at Cape Canaveral in Florida.

The company recently completed a critical “hop” test of its reusable upper stage, successfully flying the development vehicle to an altitude of around 30 feet and vertically landing it about 15 feet away. While those numbers might seem unimpressive on the face of it, the hop proved out the stage’s novel oxygen-hydrogen rocket engine design. Unlike other nozzled rocket engines, the one on Stoke’s second stage is a distributed system, with thrusters that ring the circumference of the second stage.

The flight essentially concluded the development cycle of the second stage, meaning that the architecture is now complete and the company can move on to the rest of the vehicle’s structure. As Stoke co-founder CEO Andy Lapsa told TechCrunch last month, the company had to finalize the architecture of the second stage before building out the rest of the vehicle.

“The first step on our journey was figuring out what a fully reusable upper stage and space vehicle looks like,” he said. “We really believe that it’s hard to build the rest of the vehicle until you know an answer. So that’s why a lot of our focus so far has been on the reusable second stage.”

The company also announced that multinational chemical company Linde plc’s chairman, Steve Angel, will join Stoke’s board of directors. Angel is the former CEO of Linde and also sits on the board of GE.

AlphaSense, the leading AI market intelligence platform hits $2.5 valuation with $150 million Series E led by BOND

BOND leads AlphaSense's latest fundraise, cementing AlphaSense's leadership in AI-driven market intelligence and fueling its investment in GenAI capabilities for enterprise customers

ALSO READ:

AlphaSense, an AI-based market intel firm, snaps up $150M at a $2.5B valuation - TechCrunch

AI startup AlphaSense valued at $2.5 billion after latest funding round - Reuters

AlphaSense, the leading market intelligence and search platform, today announced its $150 million Series E financing round, led by BOND, and joined by existing investors including Alphabet's CapitalG, Viking Global Investors, and Goldman Sachs. The financing brings AlphaSense's valuation to $2.5 billion.

"At BOND, we look for iconic technology companies that are shaping the future," said Jay Simons, General Partner at BOND. "With the ability to deliver the right insights and data to help businesses confidently make the everyday, strategic decisions that ultimately define their future, AlphaSense immediately struck us as a category creator emerging into one of those iconic companies that significantly advances how the business world works."

The $150 million raise comes just months after a $100 million Series D investment led by Alphabet's CapitalG. The new capital will be used to expand AlphaSense's enterprise solution, deploying its market-leading AI search capabilities, purpose-built market intelligence and financial LLMs, and GenAI capabilities—all within customers' private clouds. This expanded product offering empowers professionals to monitor and extract critical insights from internal and external content through secure, accurate, and best-in-class AI-powered search, summarization, and chat capabilities.

BAM Elevate, the dedicated private investment team at Balyasny Asset Management, L.P. (BAM), has been a client of AlphaSense since 2015, and is a new investor in this round. Norman Chen, Partner at BAM Elevate, commented, "Today, we have over 150 investment professionals leveraging the AlphaSense platform to get insights and data points more rapidly and reliably. From our perspective, AlphaSense's AI and search technology has been incredibly powerful at surfacing the right information to help enhance our investment process at BAM - which is also why we invested in this latest round. We wholeheartedly believe in AlphaSense's growth and distinctive position in the industry and want to be an even greater part of its journey."

BOND's investment reflects AlphaSense's strong revenue growth and the rapid adoption of its innovative AI and search capabilities by more than 4,000 enterprise customers, including the majority of the S&P 500, the world's largest banks, investment firms, and consultancies, and leading companies spanning every sector of the economy. For over a decade, AlphaSense has been transforming the research process for business and financial professionals with the latest innovations in AI and NLP technology, amassing data to continuously train its language models, while also building its vast collection of top-tier, trustworthy business content.

The fundraise will also be used to grow its extensive collection of searchable business content and make strategic acquisitions that expand its platform capabilities and increase the value it delivers to customers. Since its April 2023 announcement of a $100M Series D addition led by CapitalG, AlphaSense has won a number of industry awards, including being named a Market and Competitive Intelligence leader by Forrester, a top 50 AI company by Forbes, and a Best Workplace in 2023 by Inc.

"I couldn't be more excited about BOND's investment in AlphaSense," said Jack Kokko, CEO and Founder of AlphaSense. "The additional capital allows us to invest strategically, so we can continue to lead the generative AI revolution in our market, and deliver on our mission of helping businesses find the right data and insights to support more confident and agile decision-making. We are building the future of market intelligence, and we are proud to continue revolutionizing search for enterprise customers."

About AlphaSense

AlphaSense is a market intelligence and search platform used by the world's leading companies and financial institutions. Since 2011, our AI-based technology has helped professionals make smarter business decisions by delivering insights from an extensive universe of public and private content—including equity research, company filings, event transcripts, expert calls, news, trade journals, and clients' own research content. Headquartered in New York City, AlphaSense employs over 1,000 people across offices in the U.S., U.K., Finland, Germany, India, and Singapore. For more information, please visit www.alpha-sense.com.

About BOND

BOND is a global technology investment firm that supports visionary founders throughout their entire life cycle of innovation and growth. For more information, please visit www.bondcap.com.

Media Contact:

Remi Duhé

Email: media@alpha-sense.com

Stoke Space edges closer to building the world's first fully reusable rocket

A small hop by a prototype upper stage was a big step in Stoke Space’s efforts to develop a fully reusable launch vehicle.

ALSO READ:

Update on Hopper2: The Hopper Has Landed - Stoke Space

Stoke Space completes milestone test in quest to build a fully reusable rocket - TechCrunch

Four-year-old Seattle-area startup Stoke Space executed a successful up-and-down test of its “Hopper” developmental rocket vehicle today, marking a major milestone in its quest to create a fully reusable launch system.

Stoke Space said it flew its Hopper2 vehicle at a test site at Moses Lake, Washington, Sept. 17. The vehicle, using an engine powered by liquid hydrogen and liquid oxygen, rose to an altitude of about nine meters before landing safely to conclude the 15-second flight.

The flight concluded the Hopper effort to develop technologies for a future reusable upper stage. “We successfully completed all of the planned objectives,” the company said in a statement after the test. “We’ve also proven that our novel approach to robust and rapidly reusable space vehicles is technically sound, and we’ve obtained an incredible amount of data that will enable us to confidently evolve the vehicle design from a technology demonstrator to a reliable reusable space vehicle.”

Eventually, Stoke plans to offer a fully reusable launch system, including a second stage that can be brought back to Earth without having to rely on exotic shielding.

The concept behind Stoke Space’s launch system has been compared to the much larger two-stage Starship system that’s being developed by SpaceX for trips beyond Earth orbit. You can extend that comparison to characterize today’s Hopper flight as a parallel to SpaceX’s Grasshopper test flights in 2012 and 2013, or the Starhopper tests in 2019.

Lapsa said he was “incredibly proud” of his team.

“The team is unbelievable, and you know, we’ve developed everything. Two and a half years ago, this spot in Moses Lake was a blank desert. Today we’ve launched a brand-new hydrogen-oxygen engine — and it’s a very unique engine — on a vehicle that took off and landed vertically,” he said. “I think everybody’s on cloud nine.”

Worldwide leader in AI powered IT security AnyTech365 to go public merging with Zalatoris Acquisition Corp. NYSE: TCOA

AI powered IT security leader AnyTech365, and Zalatoris Acquisition Corp. NYSE: TCOA.U, TCOA, TCOA.WS, a special purpose acquisition company SPAC incorporated in Delaware for the purpose of combining with one or more businesses or entities announced today that they have entered into a business combination agreement, expected to be completed in the first quarter of 2024, subject to regulatory approvals and other customary closing conditions.

AnyTech365: An Industry leader in Efficient, Customer-First AI Powered IT Security.

Founded in 2014, AnyTech365 is a leading provider of IT security software products and related services. At the core of its extensive portfolio stands the revolutionary AnyTech365 IntelliGuard, an AI-powered comprehensive threat prevention and performance enhancement optimization software. AnyTech365's subscription-based solutions, delivered as Software as a Service ("SaaS"), extend their protective reach to all internet-connected devices, including PCs, laptops, tablets, smartphones, smart TVs, and a myriad of other Internet of Things ("IoT") devices.

Founded in 2014, AnyTech365 is a leading provider of IT security software products and related services. At the core of its extensive portfolio stands the revolutionary AnyTech365 IntelliGuard, an AI-powered comprehensive threat prevention and performance enhancement optimization software. AnyTech365's subscription-based solutions, delivered as Software as a Service ("SaaS"), extend their protective reach to all internet-connected devices, including PCs, laptops, tablets, smartphones, smart TVs, and a myriad of other Internet of Things ("IoT") devices.

In an era where cybersecurity takes center stage and the intricacies of IT devices and software pose growing challenges for consumers and small businesses, AnyTech365 is committed to safeguarding its customers' digital world and streamlining the user experience. AnyTech365 provides dependable and secure solutions and services while ensuring round-the-clock, 365-day access to certified experts proficient in Android, iOS, and Windows, and fluent in over twenty (20) languages.

AnyTech365 has received widespread acclaim, earning multiple accolades on local, national, and international stages. In 2019, AnyTech365 was ranked as the 27th fastest-growing company in Europe across all sectors, according to the Financial Times FT1000.

AnyTech365 intends to use the proceeds from the Transaction to increase its growth via accelerating its strategic partnerships with MediaMarkt, one of Europe's largest electronic retailers, the expansion of its direct and online marketing activities, further implementation of AI as well as software development and pursuing strategic acquisitions.

Embracing AI to Drive Growth: AnyTech365 tackles security, performance, and optimization challenges with a blend of its pioneering AI-driven IT security and monitoring software. One of the key benefits of AnyTech365’s AI-driven approach is its ability to automate various processes, which leads to quicker response times and improved customer satisfaction. Through AI algorithms and machine learning technology, it can analyze vast amounts of data in real-time, enabling proactive detection and resolution of any potential IT security issues.

Embracing AI to Drive Growth: AnyTech365 tackles security, performance, and optimization challenges with a blend of its pioneering AI-driven IT security and monitoring software. One of the key benefits of AnyTech365’s AI-driven approach is its ability to automate various processes, which leads to quicker response times and improved customer satisfaction. Through AI algorithms and machine learning technology, it can analyze vast amounts of data in real-time, enabling proactive detection and resolution of any potential IT security issues.

Additionally, AnyTech365’s AI capabilities facilitate intelligent problem-solving and decision-making. The algorithms continuously learn and adapt based on user behavior and patterns, allowing for customized solutions tailored to the specific needs of each customer. This level personalization enhances the overall user experience and ensures that clients receive the most effective and efficient support.

Capitalizing on Favorable Market Tailwinds: The growing complexity of IoT technologies, alongside increased cybersecurity risk, has made it an opportune time for AnyTech365 to expand its legacy SaaS business. With the devices with which we interact every day becoming increasingly complex and connected and digital operations migrating to the cloud, small businesses and consumers are increasingly looking for high-quality, smart, simple and proactive IT security and support solutions. Furthermore, by utilizing its rapidly evolving AI technology, AnyTech365 is uniquely positioned to capitalize on the rising demand stemming from these trends. With a strong existing business-to-consumer presence and market resonance, AnyTech365 also has a tremendous opportunity to extend business-to-business applications serving small and medium enterprises across expanded end markets and geographies.

Expanding Strategic Partnerships: AnyTech365 is poised to scale its reach throughout Europe by expanding their strategic partnerships, such as with MediaMarkt, the leading Europe-based consumer electronic retailer with over one thousand (1,000) stores and an interactive ecommerce platform. AnyTech365’s agreement with MediaMarkt will see the rollout of the AnyTech365 products and services being included as a purchase option in the MediaMarkt online checkout following the ecommerce sale of an IoT product or device as well as featuring across hardware insurance products such as Extended Warranty and IT Helpdesk, sold in the MediaMarkt physical stores and ecommerce platform. Additionally, AnyTech365 is also pre-installing AnyTech365 IntelliGuard software on MediaMarkt laptops prior to sale. The agreement also provisions AnyTech365 to position technical personnel at each of MediaMarkt’s more than one hundred (100) stores in Spain to provide a “Shop in Shop” on-site technical expert experience. This unique agreement gives AnyTech365 the opportunity to offer its SaaS IT security services directly to the millions of MediaMarkt customers. Beyond Spain, the partnership is expected to roll out across additional EU territories where MediaMarkt operates.

Pursuing Complementary Acquisitions: The strong demand for IT security SaaS and the fragmented market presents a considerable opportunity for AnyTech365 to rapidly expand its service offerings and capabilities across end markets and geographies. With an industry leading compliance platform and highly scalable AI powered systems, AnyTech365 is positioned to achieve greater reach through consolidation in this nascent and fast-growing environment. With strong focus on implementing AI in all aspects of its business AnyTech365 also expect to be able to increase revenues and at the same time optimize cost and create efficiencies in performance and service level enhancements.

Management Comments

Janus R Nielsen, Founder of AnyTech365.

“Given the bright outlook for our company and our current place in the cybersecurity industry, we are extremely thrilled to be merging with Zalatoris. AnyTech365’s unique position in the fast-growing IT sector is giving us many opportunities for growth and expansion. We already have several exciting partnerships to launch in the near future and believe we can successfully expand our software development as well as our direct online sales and marketing activities. Moreover, we have identified a range of potential acquisition targets worth pursuing.

We have entered a new era, the era of Artificial Intelligence, and our industry is at the forefront of the AI adoption. Over the last eighteen (18) months we have been successfully implementing various AI tools and technologies. With the recent launch of AnyTech365 IntelliGuard, our proprietary platform for all AnyTech365’s security software products, services and plans we are indeed excited for what the future holds.

With the forthcoming IPO and the injection of substantial growth capital, that will supercharge our company over the next three to five years, we are confident we will reach our strategic goals. With the belief and vision both the Zalatoris and J.Streicher team have shown us, we are convinced this merger will unlock our full potential. Zalatoris is indeed a perfect fit for us,” Janus R Nielsen concludes.

Paul Davis, CEO of the Zalatoris.

“We are very excited about our announcement and the partnership with AnyTech365, a genuine leader in IT Security and support. We have been working at length and in-depth with the AnyTech365 executive team to understand the business, explore new opportunities and help build a plan for growth.

AnyTech365 is at the forefront of utilizing artificial intelligence to enhance its IT security and support services. By incorporating into its operations, AnyTech365 is able to provide its customers with advanced solutions and a higher level of efficiency. By harnessing the potential of AI, AnyTech365 remains ahead of the curve in the IT security and support industry, providing customers with cutting-edge solutions and an exceptional level of service, proven by its ratings and reviews on Google and TrustPilot.

AnyTech365’s collaboration with MediaMarkt also offers us the unique opportunity to expand into a huge new market potentially harnessing millions of customers in Spain and across Europe. AnyTech365’s early adoption of AI-driven services allows for an unprecedented amount of growth and the ability to scale without the need for a large increase in costs or operations.

AnyTech365 has genuinely strong foundations and offers a great service in a growing sector. We believe that the opportunity to expand and scale this business with strategic investment will see this company reach the huge potential it has.”

Transaction Overview

The Transaction values AnyTech365 at a $220 million enterprise value. The Transaction, which has been unanimously approved by the Boards of Directors of AnyTech365 and the Company, is subject to approval by the Company’s stockholders and other customary closing conditions, including the receipt of certain regulatory approvals.

Additional information about the Transaction, including a copy of the Business Combination Agreement, is available in the Company’s Current Report on Form 8-K filed with the U.S. Securities and Exchange Commission (the “SEC”) on September 8, 2023 and at www.sec.gov.

Legal Advisors

Cuatrecasas Goncalves Pereira, S.L.P. is serving as legal counsel to AnyTech365 in the Transaction. Nelson Mullins Riley & Scarborough LLP is serving as legal counsel to the Company in the Transaction.

About AnyTech365 visit www.anytech365.com.

Founded in 2014 and headquartered in Marbella, Spain, Anteco Systems, S.L. (“AnyTech365”) is a leading European AI powered IT Security company helping end users and small businesses have a worry-free experience with all things tech. With approximately 280 employees and offices in Marbella and Torremolinos (Spain), Casablanca (Morocco), and San Francisco (California, US), AnyTech365 offers an array of security, performance, threat prevention and optimization software and hardware.

The flagship product is their unique and groundbreaking AI-powered AnyTech365 IntelliGuard which is the cornerstone and foundation within all products, services, and plans. They offer qualified technicians who are available 24/7, 365 days a year, providing fast technical-focused support for practically any security, performance, or optimization issues that users may experience with their PC, laptop, smartphone, wearable technology, smart home devices or any Internet-connected device.

About Zalatoris Acquisition Corp.

Zalatoris Acquisition Corp. (the “Company”) is a blank check company, which was formed to acquire one or more businesses and assets, via a merger, capital stock exchange, asset acquisition, stock purchase, or reorganization. The Company was formed to effect a business combination with middle market “enabling technology” businesses or assets with a focus on eCommerce, FinTech, Big Data & Analytics and Robotic Process Automation.

About J. Streicher (Sponsor)

J. Streicher Holdings, LLC, though its subsidiaries (“J. Streicher”), is a private and diverse US financial organization that is founded on tradition, personal relationships, innovation, and steadfast principles. J. Streicher & Co. LLC, its broker dealer, holds the distinction of being one of the oldest firms on the New York Stock Exchange (“NYSE”), with roots dating back to 1910. Throughout J. Streicher’s history, it has consistently provided exceptional service to its family of listed companies, even in challenging market conditions.

While J. Streicher’s Broker Dealer primarily focuses on NYSE activities, its international investment team specializes in identifying, investing in, and nurturing potential target companies, guiding them through the complex process of transitioning into publicly traded entities. The ultimate goal is to position these companies for a successful listing. J. Streicher’s core strength lies in its ability to recognize strategic private target companies and assist them in becoming publicly traded entities on prestigious exchanges such as the NYSE or NASDAQ.

Contacts

Investor Relations & Media Contacts:

Email: pr@zalatorisac.com

Number: +1 (917) 675-3106

AnyTech365:

Monarch Tractor named one of Forbes' Next Billion-Dollar startups

Congratulations to the incredible team at Monarch Tractor for making the Forbes Next Billion Dollar Startup. We are thrilled and honored to be a part of the journey of innovating farming and agtech with their AI powered autonomous EV tractors. Empowering farmers and saving our planet = Incredible Mission

ALSO READ:

Monarch Tractor Identified as Ag Unicorn by Forbes - Monarch Tractor blog

Monarch Co-founder and CEO, Praveen Penmesta, said it best, “We need to change so that farmers don’t feel like there’s no future in farming.”

Each year Forbes looks at the field of the most competitive startups and selects 25 that it believes are the next big thing. With a 60% success rate, it has an impressive track record of identifying high achievers. Recognizing Monarch Tractor’s efforts to help solve some of agriculture’s most difficult problems, Forbes named Monarch to its 2023 Forbes’ Next Billion-Dollar Startups list.

“Diesel tractors are a major source of pollution in agriculture, and farmers have long struggled to hire enough workers. Monarch’s machines promise to solve both problems.” — Amy Feldman, Forbes’ Next Billion Dollar Startups List Editor

From equity funding, current valuations, and revenue projections to what Monarch is accomplishing for farmers and the environment; don’t miss this short documentary on the heart and soul of Monarch Tractor and its innovations that are helping elevate the world’s farmers into a happier, healthier, cleaner, safer, and more profitable lifestyle.

Monarch CEO Praveen Penmetsa expects sales to triple or more this year as it gets its autonomous electric tractors in the hands of farmers, earning the Livermore, California-based company a coveted spot on our annual list of the Next Billion-Dollar Startups. But launching an agricultural equipment company is tough. It is capital-intensive, and cash-strapped farmers tend to be a conservative lot resistant to change. But Livermore-based Monarch, which has raised $116 million in equity from investors and reached a valuation of $271 million at its most recent equity funding in November 2021, seems to have hit a tipping point. Last year, it booked $22 million in revenue, up from $5 million in 2021.

This year Penmetsa expects revenue to increase three- to fivefold. That would bring it above $66 million, and possibly over $100 million, as the number of its tractors in the field goes from more than 100 to 1,000. As it expands, Penmetsa expects that more of its revenue will come from software subscriptions (up to $8,376 per tractor per year) that give farmers real-time alerts about sick plants and safety risks, plus gathering and crunching a ton of data to improve crop yields.

Autonomy acquires EV Mobility and enters the car-sharing economy

Autonomy™, the nation’s largest electric vehicle subscription company, and EV Mobility, LLC., the leading all-electric vehicle car-sharing platform announce they have entered into an agreement whereby once certain conditions are met Autonomy will acquire the technology, assets and customer accounts of EV Mobility. The acquisition will accelerate flexible (hourly, daily, weekly, monthly, and yearly) access to an EV to a broader market by making an electric vehicle available to anyone with a valid driver's license, credit card, and smartphone.

“We like and understand the business, and this acquisition will allow us to scale quickly while increasing margins - it’s really that simple”

Autonomy has been providing a growing fleet of vehicles to EV Mobility over the last 12 months. “We like and understand the business, and this acquisition will allow us to scale quickly while increasing margins - it’s really that simple,” said Scott Painter, Founder and CEO of Autonomy.

EV Mobility operates a profitable, B-to-B car-share business that partners with high-end property owners and luxury hotels to provide flexible (hourly/daily) access to an EV as an amenity to their customers. The hotel/property owner covers the monthly cost of the vehicle, insurance, and charging infrastructure. Hourly usage fees are all processed by the customer directly in the EV Mobility app, with a revenue split between the property owner and EV Mobility. The result is a potential 2-5x revenue increase per vehicle with little to no downside risk.

EV Mobility's core technological innovations, including keyless remote activation of accounts and hourly billing, align closely with Autonomy's product roadmap. “EV Mobility represents an immediate benefit to Autonomy in reducing the time, cost, and risk of entering the car-share space,” said Martin Prescher, CTO of Autonomy.

EV Mobility is currently under contract with some of the leading hotel operators and high-end property owners in the US, such as Marriott International, Evolution Hospitality, Kor Group, Westgate, Brookfield, Olympus Property, Align Residential, Presidio, Zlife, AMC, Evans Hotels and The Proper Hotels. These partnerships represent thousands of physical locations that can manage multiple (2-5) vehicles each. This pipeline represents a strong “order book” that provides predictable demand for thousands of cars over the coming quarters. “The potential of de-risking our growth by having an installed base of stable property owners as partners holds the promise that we can properly allocate resources to meet the existing demand and scale efficiently,” said Redic Thomas, CFO of Autonomy.

“We are excited to join forces with Scott, Georg, and the experienced team at Autonomy. We believe that they have unlocked flexible, simple, and affordable access to an EV with a powerful brand and extraordinary technology,” said Ramy El-Batrawi, Founder of EV Mobility.

The financial details of the cash and stock agreement were not disclosed. The transaction is expected to close in Q4, 2023, and will be subject to all regulatory and compliance conditions being met.

About Autonomy

Autonomy is a mission-driven company that uses technology to accelerate the adoption of electric vehicles by making them more accessible and affordable. The company was founded by Scott Painter and Georg Bauer, disruptors in the auto retail, finance, and insurance industries who pioneered the Car-as-a-Service (CaaS) category with the first-ever used-vehicle subscription offering, Fair. Building upon that experience, Autonomy is up-leveling its commitment to carbon neutrality and financial inclusion. Its customers have driven over 11 million miles, saving more than 9.7 million pounds of CO2 from being emitted into the earth’s atmosphere. Easier to qualify for than a lease, its low commitment, 100% digital solution allows people to pay monthly on their credit card and aims to get more people driving EVs who otherwise might not be eligible or interested in traditional lease or loan products. And unlike leases of loans, everyone who qualifies is charged the same rate regardless of FICO score. Autonomy believes that the future of mobility is electric. It exists to enable that transition more rapidly through innovations in technology, finance, and insurance. Autonomy relies on partnerships with AutoNation and Tesla to bring easier and more affordable ways for people to access electric vehicles. Autonomy is based in Santa Monica, California.

Follow Autonomy on LinkedIn, Twitter, Instagram, Facebook, YouTube, and TikTok.

About EV Mobility

EV Mobility is the leading all electric vehicle car-sharing platform, providing electric vehicles on-demand through an easy-to-use mobile app. EV Mobility offers EVs as an amenity for luxury hotels, multi-family apartments, and commercial buildings. Through the app, residents or guests access electric vehicles located in their building or hotel, while properties benefit from the added value they can now offer to residents or guests of a low-cost, zero emission electric vehicle on demand. The all-electric car-sharing service began in Los Angeles in 2021 and is presently expanding across other cities.

Contacts

Autonomy PR Contacts:

Asher Gold

asher@gold-pr.com

Kai Rodriguez

kai@gold-pr.com

EV Mobility PR Contact:

Laurie DiGiovanni

laurie@evmobility.com

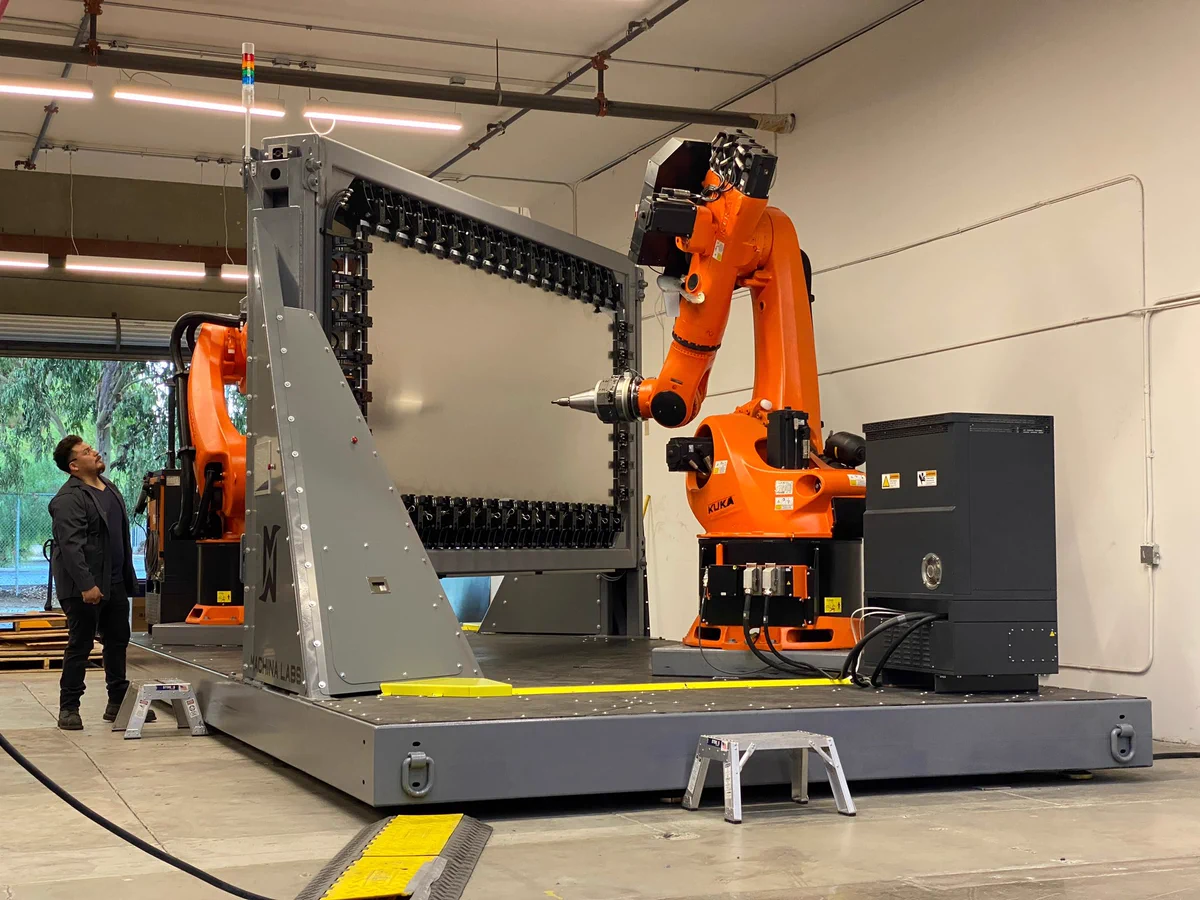

Machina Labs unveils portable AI robotics system for metal forming for agile manufacturing at FABTECH

Machina Labs to Unveil The Machina Deployable System at FABTECH Portable System for Metal Forming Combines AI and Robotics for Agile Manufacturing.

Machina Labs announced general availability of the Machina Deployable System, a commercially available portable robotic system that digitally forms and cuts custom composite and metal parts. The Machina Deployable System will make its public debut at FABTECH from September 11-14 in Chicago. Combining the power of artificial intelligence (AI) and robotics with unparalleled portability, the company’s Deployable System empowers manufacturers in a wide variety of industries to elevate production capabilities with minimum disruption to facilities.

“We pride ourselves on partnering with forward-thinking companies like Machina Labs that pioneer new ways for automation to impact industry. We are excited to host the debut of the Machina Deployable System at the KUKA booth B27051 during this year’s FABTECH.”

Machina Labs is now making its unique RoboForming™ technology available as an on-premise, stand-alone option to virtually any manufacturing site or other production facilities — including those with remote locations, such as an aircraft carrier — where it can be installed into an existing environment without significant foundational facility changes and work with any industrial robot. Machina customers already are utilizing the company’s unique offering for purposes of tooling, sustainment, research and development, rapid prototyping, and production to rapidly produce large, complex sheet metal parts at the point of use. The Machina Deployable System can form virtually any metal (aluminum, steel, titanium, Inconel, and more) up to thicknesses of ¼ inch and into parts as large as 12 x 5 x 4 feet. If it bends, the Machina Deployable System can form it.

“Machina Labs has a mission to develop manufacturing solutions that give businesses the ability to make changes with ease, and iterate and produce rapidly,” said Edward Mehr, CEO and Co-Founder of Machina Labs. “Our portable Deployable System is a game-changer in the manufacturing world. By providing manufacturers with a portable solution that combines flexibility, precision, and speed, we are essentially putting a twenty-first century blacksmith shop in the backyard of any business that wants one.”

From small, custom workshops to large-scale manufacturing facilities, the Deployable System redefines the possibilities of on-demand manufacturing, rapid prototyping, and agile hardware development and production. The United States Air Force, for example, is using the Machina Deployable System for maintenance and repair — or sustainment — of older aircraft where suppliers and parts are no longer being fabricated.

The Machina Deployable System consists of a portable platform, two 7-axis robotic arms, tool-changing corral, configurable frame, along with AI-driven process controls. The system can be transported on the back of a truck and can be up and running in a matter of hours on-premise and work with any industrial robot. Questions about price, availability, and training can be directed to https://machinalabs.ai/contact-us.

“Machina Labs’ advanced manufacturing platform utilizes our advanced robotic arms in new and innovative ways. Their intelligent process controls and proprietary end-effectors are unlocking cutting-edge manufacturing capabilities never thought possible that we are delighted to support,” according to Casey DiBattista, Chief Regional Officer – North America for KUKA Robotics. “We pride ourselves on partnering with forward-thinking companies like Machina Labs that pioneer new ways for automation to impact industry. We are excited to host the debut of the Machina Deployable System at the KUKA booth B27051 during this year’s FABTECH.”

The Machina Deployable System will be on display for the first time at FABTECH from September 11-14, 2023 in KUKA’s booth (B27051). Delivery of the new portable system will start in the fall of 2023. For more information or to request a demo, please visit https://machinalabs.ai/contact-us

Machina Labs CEO Edward Mehr is speaking at FABTECH on September 11 and 13. On September 11, he will discuss “Robotic Sheet Shaping: A Thesis on the Future of Manufacturing” at 2:00 pm CT in Room S501BC. On September 13, Mehr is participating in a panel entitled “Advancing Robotics in the Fabrication Metal Industry” at 12:30 pm CT in the FABTECH Theater.

About KUKA

KUKA is a global automation corporation with sales of around 4 billion euro and around 15,000 employees. The company is headquartered in Augsburg, Germany. As one of the world’s leading suppliers of intelligent automation solutions, KUKA offers customers everything they need from a single source. From robots and cells to fully automated systems and their connectivity in markets such as automotive with a focus on e-mobility & battery, electronics, metal & plastic, consumer goods, e-commerce, retail and healthcare.

About Machina Labs

Founded in 2019 by aerospace and automotive industry veterans, Machina Labs is an advanced manufacturing company based in Los Angeles, California. Enabled by advancements in artificial intelligence and robotics, Machina Labs is developing Software-Defined Factories of the Future. The mission of the company is to develop modular manufacturing solutions that can be reconfigured to manufacture new products simply by changing the software. For more information, please visit https://www.machinalabs.ai/.

Contacts

Tim Smith

Element Public Relations, for Machina Labs

415-350-3019

tsmith@elementpr.com

Chuck Bates

dgs Marketing Engineers, for KUKA

440-725-1269

bates@dgsmarketing.com

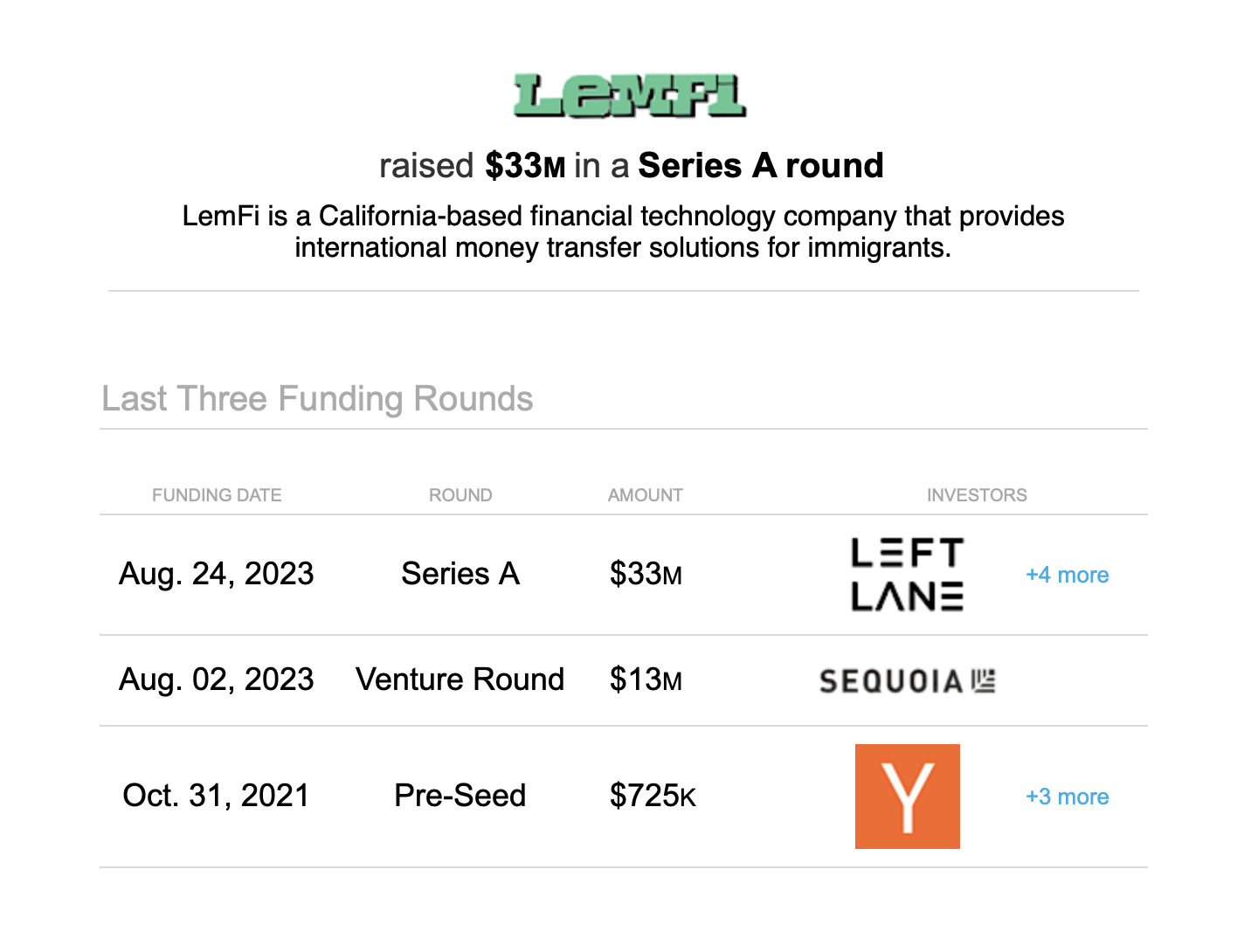

LemFi Raises $33 Million series A to accelerate transforming financial services for immigrants globally

We are excited to see LemFi close their Series A to accelerate their remarkable post Y Combinator trajectory, and global velocity to transform digital banking and financial services. The team is doing a phenomenal job executing on their remarkable growth as they expand their product offering and global customer base.

"We're pleased to announce the successful conclusion of our Series A round. Over 500,000 people believe in us and in our mission to enable International Payments for Everyone. 3 years of rewarding work done, many more exciting ones to come.

ALSO READ:

Exclusive: African immigrant-focused fintech LemFi raises $33 million - Axios

LemFi bags $33m in Series A funding round to fuel global expansion - Fintech Futures

LemFi, a leading fintech platform transforming financial services for immigrants, has raised a $33 million Series A round led by Left Lane Capital. Other investors included Y-Combinator, Zrosk, Global Founders Capital, and Olive Tree.

Each year, millions of immigrants move abroad to start new lives, and the biggest hurdle they face is access to financial services. In 2020, Founders Ridwan Olalere and Rian Cochran joined forces to solve these challenges with a vision to build a platform that empowers the next generation of immigrants.

Olalere and Cochran first met while building OPay - one of Africa's most successful fintech platforms. After combined decades of navigating the underlying complexities and regulatory nuances of cross-border payments in emerging markets, the two were uniquely positioned to leverage their experience as a launching pad for LemFi.

Olalere, LemFi's CEO, drew from his own experience as an immigrant and shared, "Having lived on three continents and leading a multicultural team, our mission is deeply personal: creating a world where financial services are universally accessible. We've already made life easier for over half a million people, but we're only just getting started."

Today, within minutes of residency, a user can onboard with LemFi and use its multi-currency offering to send, receive, hold, convert and save in the currencies of both their country of origin and country of residence. In addition, LemFi also offers instant international transfers at the best exchange rates with zero fees on transfers or account maintenance.

"Our product is a game changer for users since traditional banks and other leading neo-banks have always steered clear of less common or more volatile currencies," Ridwan Olalere explained. "This has driven immigrants to often use unsafe, informal channels or to stitch together several other services to solve some of their basic financial needs. Until now."

In 2020, LemFi launched in Canada to enable easy and low-cost remittance payments to Nigeria, Ghana, and Kenya. By 2021, the company quickly expanded to the UK, and in parallel, broadened its reach by enabling 10 new African remittance corridors.

In a strategic consideration, LemFi acquired UK-based Rightcard Payment Services in late 2021. Through the acquisition, LemFi obtained an Electronic Money Institution (EMI) license from the UK's Financial Conduct Authority to provide customers with more services, such as higher transaction limits, e-money accounts, and more.

"LemFi has been very deliberate and strategic in acquiring licenses and building a robust network of financial institution partners to facilitate cross-border payments for immigrants," said Matthew Miller, Principal at Left Lane Capital, who joined LemFi's Board of Directors as part of the transaction. "We're excited to support LemFi as it expands its product offering to serve more immigrant communities globally."

2023 has similarly been a pivotal year for LemFi, with its new subsidiary Rightcard Payment Services securing an International Money Transfer Operator (IMTO) license from the Central Bank of Nigeria. The IMTO license will enable LemFi to offer its services in partnership with Nigerian banks, empowering users by eliminating the need for intermediaries. The Acting Governor of the Central Bank, Folashodun Shonubi, recognizes the alignment of LemFi with the Central Bank's goals of improving the availability of foreign exchange in Nigeria and ensuring exchange rate stability.

LemFi remains committed to offering accessible and transparent financial services to migrant communities across the globe. With the conclusion of this latest investment round, the Company will seek to expand its product offering to the United States, Europe, the Middle East, and Asia, as well as innovate on new product offerings according to the needs of its users.

About Left Lane Capital

Founded in 2019, Left Lane Capital is a New York and London based global venture capital and growth equity firm investing in internet and technology companies with a consumer orientation. Left Lane's mission is to partner with extraordinary entrepreneurs who create category-defining companies across growth sectors of the economy, including software, healthcare, e-commerce, consumer, fintech, edtech, and other industries. Select investments include GoStudent, M1 Finance, Wayflyer, Yokoy, Masterworks, Blank Street, Talkiatry, WeTravel, and more. For more information, please visit www.leftlane.com.