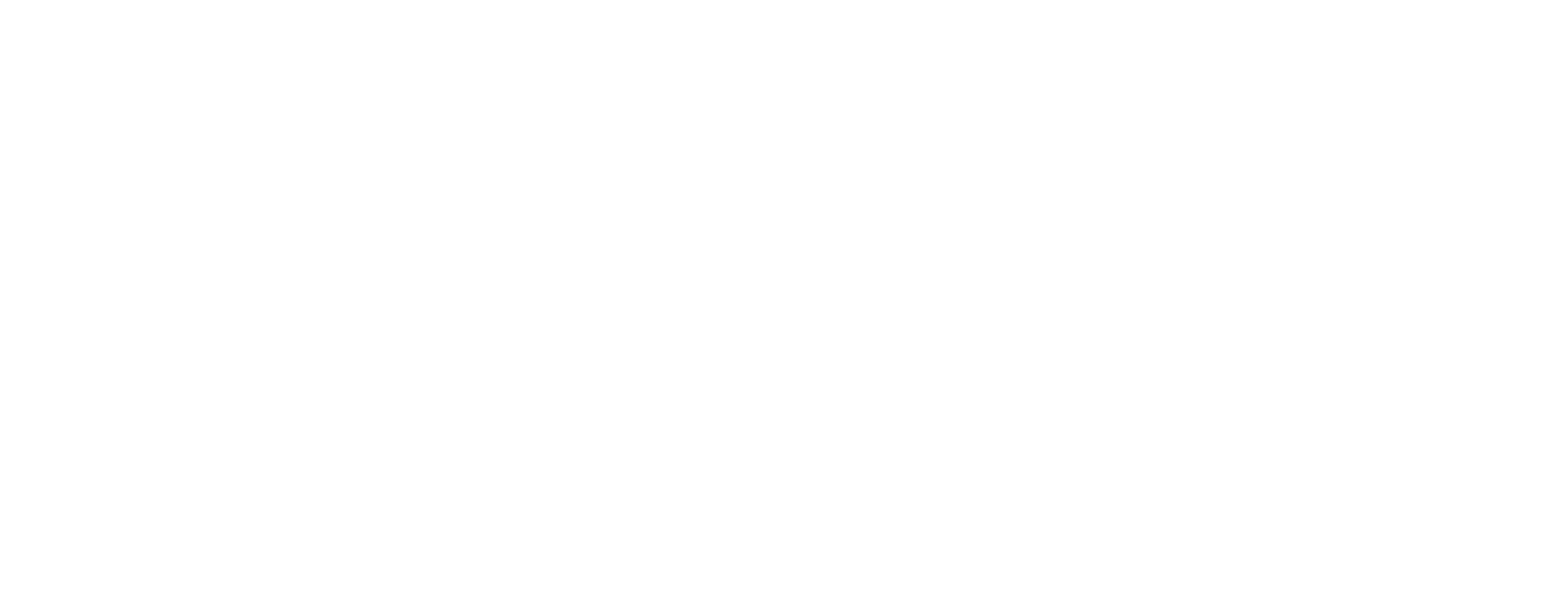

Enterprise AI leader TrueFoundry launched innovative AI Gateway unified control plane for agentic AI

TrueFoundry Enables Enterprises to Manage, Observe, and Govern Models, Model Context Protocol (MCP Servers), and Agents, Keeping Their Stack Cost-optimal and Future-ready

Govern, Deploy, Scale & Trace Agentic AI in One Unified Platform

TrueFoundry, an enterprise AI infrastructure platform, launched its AI Gateway on Product Hunt. The AI Gateway landed a spot in the top three and garnered more than 135 comments from the Product Hunt community, making it one of the most hunted products of the week. Already used in production for scaling thousands of agents across multiple Fortune 1000 companies, TrueFoundry’s AI Gateway provides a central hub where enterprises can manage, observe and govern agents.

Enterprises today are encountering significant roadblocks with AI evolving faster than their internal infrastructure can support. As workflows continue to shift toward agentic automation, there’s a real need for a centralized platform to manage models, prompts, guardrails, MCP servers and other agents. TrueFoundry is addressing this by providing a single control plane that connects various systems, keeping them organized, secure, and ready for the future.

TrueFoundry’s AI Gateway addresses the most complex enterprise challenges and serves as a centralized traffic controller for all agentic apps and corresponding components. It is specifically engineered to:

- Eliminate the "security blind spots" that arise when developers scatter API keys and credentials across disparate agent codebases.

- Provide a unified gateway architecture, governed registry of tools (via MCP), granular access controls, and production-ready observability.

- Empower teams to efficiently scale from prototype agents to enterprise automation.

“The response to our launch on Product Hunt proves there is clear demand for stronger agent-to-agent AI management tools, which we believe will become one of the most critical layers in the next generation of AI Production infrastructure,” said Anuraag Gutgutia, co-founder of TrueFoundry. “Our aim is to help teams transition from testing ideas to implementing them in real-life situations smoothly and efficiently, without wasting resources.”

To learn more about TrueFoundry, visit truefoundry.com.

About TrueFoundry

TrueFoundry is an Enterprise Platform as a Service that enables companies to build, observe, and govern Agentic AI applications securely, scalably, and with reliability through its AI Gateway and Agentic Deployment platform. Leading Fortune 1000 companies trust TrueFoundry to accelerate innovation and deliver AI at scale, with over 10 billion requests per month processed via the TrueFoundry AI Gateway and more than 1,000 clusters managed by its Agentic deployment platform. TrueFoundry’s vision is to become the central control plane for running Agentic AI at scale within enterprises, serving as the command center for enterprise AI. Headquartered in San Francisco, TrueFoundry operates across North America, Europe, and Asia-Pacific, supporting enterprise AI deployments for some of the world’s most innovative organizations. To learn more about TrueFoundry, visit truefoundry.com.

Contacts

Media Contact

Michelle Mead

VSC for TrueFoundry

truefoundry@vsc.co

Octane expands into the automotive market with Captive-as-a-Service or dealership groups

Octane announced a major expansion into the automotive industry with their turnkey Captive-as-a-Service solution that helps dealerships maximize their return on each sale and deliver a superior customer experience.

A Smart move by the Octane® team stepping into auto financing with a wholesale approach to dealer captives. When the capital stack, underwriting and servicing engines are built with compliance and performance in mind from day one, lenders and partners see stronger recoveries and fewer surprises down the road. If anyone in the ecosystem is thinking about how to operationalize that balance between asset performance and regulatory discipline.

- Octane’s Captive-as-a-Service is a turnkey solution that offers automotive dealer groups the benefits of owning their own captive without the time and expense of building one from scratch

- Brings together technology, underwriting, loan processing and servicing, compliance, and capital markets execution in a single platform under the dealer’s chosen brand

- Enables dealer groups to participate in loan originations facilitated through their captive brand, diversifying their revenue streams and building long-term enterprise value

- Strengthens customer retention by delivering a fully-branded end-to-end experience and creating new opportunities to engage with customers

- Leverages Octane’s successful track record: more than $7 billion in originations, more than $4.7 billion of asset-backed securities (ABS) issued, and AAA-ratings*

Octane® (Octane Lending, Inc.), the fintech company unlocking the power of financial products for retailers and consumers, announced today that it has entered the auto market to help dealerships maximize their return on each car sale while delivering a superior customer experience.

Octane’s Captive-as-a-Service offering enables auto dealerships to enjoy the benefits of owning a captive finance company without the significant up-front costs, time, effort, and investment required to establish such a business. Octane’s turnkey solution brings together core captive business elements, including credit underwriting, loan processing, loan servicing, funding and capital markets execution, under a brand name of the partner’s choice. Dealer partners and their customers benefit from a seamless technology-forward experience.

Additionally, tailored programs can include access to digital soft-pull tools, Octane Prequal, and Prequal Flex®, to drive qualified leads; a customized lending platform to support dealership-level promotions; and monthly customer touchpoints enabling dealer marketing for the duration of a consumer’s loan. As a result, Octane’s Captive-as-a-Service solution allows dealers to earn more money on each sale, grow and diversify their earning streams, strengthen customer loyalty, and build long-term enterprise value.

“At Octane, we are constantly looking for ways to help our partners grow their businesses. With Captive-as-a-Service, we give dealer groups the flexibility to create a program to meet their specific needs, like supplementing their existing lender mix, connecting their in-store and digital strategy, or turning their loan portfolio into a profit center,” said Jason Guss, Co-Founder and Chief Executive Officer of Octane. “After seeing success with captive and private label partnerships in the powersports and RV markets, we are excited to bring our Captive-as-a-Service solution to the auto market.”

“We’re extremely impressed with Octane’s efficient and seamless process, providing an integrated financing experience that directly benefits our customers,” said Roger W. Holler III, President & CEO at Holler-Classic Family of Dealerships. “Our buyers across the credit spectrum can move confidently through the loan process, while giving us more control as we continue to scale our business. Partnering with Octane’s team has been fantastic; a smart business move for the Holler-Classic Family of Dealerships.”

Through its in-house lender, Roadrunner Financial®, Inc., Octane has surpassed $7 billion in aggregate originations since its founding in 2014. The company has considerable underwriting expertise and consistent credit performance, as evidenced by its AAA ratings*. Dealers can benefit from Octane’s in-house loan processing team, which offers support seven days a week, and its award-winning loan servicing team, which handles in-house payments, collections, and recovery. Dealers can also leverage Octane’s significant capital markets experience; the company has issued more than $4.7 billion of asset-backed securities (ABS) since establishing its ABS program in December 2019, has cumulatively sold or secured commitments to sell $3.3 billion in loans through whole loan sale and forward flow transactions, and maintains strong relationships with institutional investors, banks, rating agencies, and warehouse capital providers. Octane’s leadership team, which includes Steven Fernald, President and CFO, Mark Molnar, Chief Risk Officer, and David Bertoncini, SVP of Credit Strategy, Auto, has more than 50 years of combined experience in capital markets, financial services, compliance, and credit risk, and over 25 years of combined experience in auto lending.

“The Octane Captive program makes an incredibly powerful tool — captive financing — approachable and deployable for us as a dealer group,” said Matt Greenblatt, Owner/Dealer Principal at Matt Blatt Dealerships. “We are able to serve our customers better, see a fantastic return on our portfolio, and leverage the Octane team to implement solutions that save us money and bring in new customers. This is a new area of growth and profit for us and we are excited to be leading the industry and embracing it.”

“Our Captive-as-a-Service solution also unlocks countless marketing opportunities to drive value across the dealership, like coupons for servicing and maintenance or remarketing messages to drive the next sale,” said Mark Davidson, Co-Founder and Chief Growth Officer at Octane. “At the same time, we help dealers deliver a fast, seamless financing experience to their customers through our digital tools and instant credit decisioning, and responsibly extend credit to a wide range of customers through our proprietary underwriting model.”

Learn more at octane.co/o/captive-lending.

*On the senior class of notes of the company’s asset-backed securitizations. The full analysis for S&P’s ratings, including any updates, which you should review and understand, is available on spglobal.com and can be accessed here. KBRA’s ratings are subject to all of the terms and conditions set forth in the related report and KBRA’s website, which you should review and understand, and can be accessed here.

Media Relations: Shannon O'Hara

Senior Vice President, Communications and People at Octane

Press@octane.co

Investor Relations:

IR@octane.co

AI infrastructure innovator Neurophos closes $110 million Series A to Launch Exaflop-Scale photonic AI chips

The era of the "Optical Processing Unit" (OPU) powering the next generation of AI infrastructure has arrived.

ALSO READ

From invisibility cloaks to AI chips: Neurophos raises $110M to build tiny optical processors for inferencing - Techcrunch

For years, we’ve known that AI is evolving faster than the hardware that supports it. Traditional silicon GPUs are hitting a wall, and consuming massive amounts of energy and generating heat that data centers can no longer manage.

2026 is the demarcation line where AI infrastructure technology changes to photonics. It's imperative in order to unlock the true value and global scale of artificial intelligence, and it's going to be powered by light.

We're thrilled to share that Neurophos has closed its Series A funding round, and our follow-on participation from our Series Seed investment. The Series A round led by Gates Frontier and Microsoft’s M12 is to scale what might be the most significant "physics-level" breakthrough in a decade: The OPU Photonic AI Chip.

Here is why the industry is buzzing about Neurophos:

100x More Efficient: By using light (photons) instead of electricity to perform calculations, these chips deliver up to 100x the performance and energy efficiency of traditional silicon.

The 10,000x Shrink: Their team has achieved the "impossible"—shrinking optical components by 10,000x to pack over 1 million processing elements on a single chip.

Sustainable Scaling: This isn't just about speed; it's about the planet. This technology slashes the carbon footprint of AI, allowing us to scale intelligence without crashing the power grid.

"Drop-in" Ready: No need to rebuild the rack. OPUs are designed to seamlessly replace existing GPUs.

With a powerhouse team featuring veterans from NVIDIA, Apple, Meta, and Intel, Neurophos isn't just predicting the future of compute—they’re building it.

Neurophos, a leader in photonic AI chip technology, has raised $110 million in an oversubscribed Series A round, bringing total funding to $118 million. The round was led by Gates Frontier, with participation from M12 (Microsoft's Venture Fund), Carbon Direct Capital, Aramco Ventures, Bosch Ventures, Tectonic Ventures, Space Capital, and others.

"Modern AI inference demands monumental amounts of power and compute," said Dr. Marc Tremblay, Corporate Vice President and Technical Fellow of Core AI Infrastructure at Microsoft. "We need a breakthrough in compute on par with the leaps we've seen in AI models themselves, which is what Neurophos' technology and high-talent density team is developing."

As AI adoption accelerates, data centers face critical limitations in power and scalability. Traditional silicon-based GPUs cannot meet growing computational demands, resulting in increased costs and energy consumption. Neurophos addresses these challenges with a proprietary optical processing unit (OPU) that integrates over one million micron-scale optical processing elements on a single chip. This innovation delivers up to 100x the performance and energy efficiency of current leading chips, offering a practical, drop-in replacement for GPUs in data centers.

"Moore's Law is slowing, but AI can't afford to wait. Our breakthrough in photonics unlocks an entirely new dimension of scaling, by packing massive optical parallelism on a single chip," says Dr. Patrick Bowen, CEO and Co-Founder of Neurophos. "This physics-level shift means both efficiency and raw speed improve as we scale up, breaking free from the power walls that constrain traditional GPUs."

Neurophos' breakthrough lies in the development of micron-scale metamaterial optical modulators—a 10,000x miniaturization over previous photonic elements—making large-scale, manufacturable photonic computing possible for the first time. The result is a new class of AI accelerator: ultra-fast, energy-efficient, and adaptable to future AI workloads.

"As the AI industry grapples with a surge in demand that tests our ability to satisfy with compute and power, disruptive approaches to compute may open routes to sustained or accelerated systems scaling that will be needed before the end of the decade. With their approach to hyper-efficient optical computation, the Neurophos team have advanced swiftly from a working proof of concept towards a realistic plan to deliver products on a timeline we can underwrite and believe in," said Michael Stewart, Managing Partner at M12, Microsoft's Venture Fund.

"From the start, we backed Neurophos because we believed the future of AI was bound by physics, not by algorithms," said Chris Alliegro, Managing Partner at MetaVC Partners. "Neurophos is addressing the only problem that really matters for the future of AI: the limits imposed by silicon. Their optical architecture provides the foundation for the next generation of machine intelligence."

Neurophos' technology enables significant reductions in power consumption, supporting the next generation of AI infrastructure without the need for exponential increases in energy or physical resources. The company's advancements promise to make AI more accessible and cost-effective across industries.

"Reducing chip-related emissions is now as essential as delivering compute," said Jonathan Goldberg, CEO of Carbon Direct Capital. "Neurophos offers step-function gains in both. This is the kind of next-generation AI infrastructure companies urgently need as their compute demands skyrocket."

The new funding will accelerate delivery of Neurophos' first integrated photonic compute system, including datacenter-ready OPU modules, a full software stack, and early-access developer hardware. The company is expanding its Austin headquarters and opening a San Francisco engineering site to meet early customer demand.

Additional investors include DNX Ventures, Geometry, Alumni Ventures, Wonderstone Ventures, MetaVC Partners, Morgan Creek Capital, Silicon Catalyst Ventures, Mana Ventures, Gaingels, and others. Cooley LLP serves as legal counsel.

About Neurophos

Neurophos Inc. is an Austin-based semiconductor company developing high-performance, energy-efficient photonic AI inference chips. Founded by Dr. Patrick Bowen and Dr. Andrew Traverso, the team includes industry veterans from NVIDIA, Apple, Samsung, Intel, AMD, Meta, ARM, Micron, Mellanox, Lightmatter, and more. For details, visit www.neurophos.com.

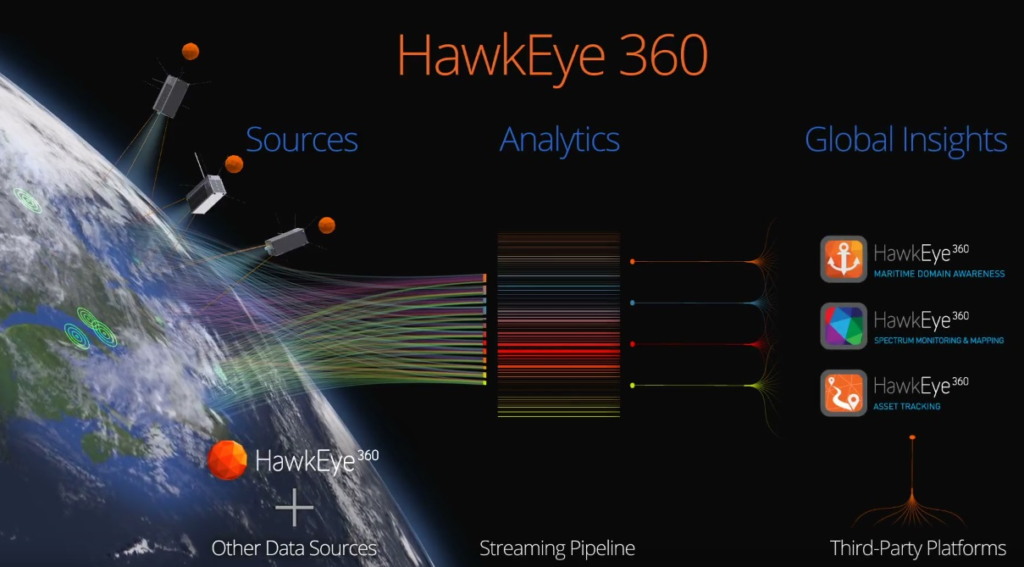

Signals intelligence data and analytics leader HawkEye 360 launched satellite cluster 13

HawkEye 360 successfully launched Cluster 13 on Sunday morning and has confirmed initial communications with the satellites.

This deployment advances their ability to support U.S. Government and international partners with consistent, high-quality RF data across multi-domain mission environments. Alongside recent strategic investments, Cluster 13 reflects a continued focus on strengthening the technology and capabilities their customers rely on.

The global leader in signals intelligence data and analytics, HawkEye 360, successfully launched its latest satellite trio, Cluster 13, and confirmed initial communications with the satellites. Cluster 13, integrated via Exolaunch, launched into a sun-synchronous orbit as part of the Twilight rideshare mission aboard a SpaceX Falcon 9 rocket.

This successful deployment and initial contact advance HawkEye 360's ability to support U.S. Government and international partners with consistent, high-quality radio-frequency insights across multi-domain mission environments. Operating in a sun-synchronous orbit, the satellites provide consistent opportunities to collect RF data over key regions, strengthening the delivery of RF insights worldwide.

"Cluster 13 strengthens our ability to provide the critical RF insights our partners need to navigate today's complex mission landscape," said John Serafini, CEO of HawkEye 360. "Alongside our recent acquisition and funding milestone, this launch reflects a continued investment in the technology, people, and capabilities our customers rely on, reinforcing HawkEye 360's role as a leader in signals intelligence."

The payload leverages advanced RF detection, enhanced onboard processing, and upgraded waveform-collection capabilities first introduced across recent launches. Together, these technologies capture a broader range of signals with greater clarity, improve geolocation performance, enhance onboard processing, and increase overall collection capacity. As a result, HawkEye 360 strengthens multi-domain mission support and enables customers to access RF insights more efficiently.

Following commissioning and on-orbit checkout, the satellites will integrate into HawkEye 360's space-derived signals intelligence architecture. This integration advances the company's use of scalable signal processing and AI-enabled analytics to detect, characterize, and geolocate radio-frequency activity worldwide, reinforcing HawkEye 360's defense-tech mission to deliver trusted domain awareness and support critical operations for defense and government partners.

About HawkEye 360

HawkEye 360 is equipping defense, intelligence, and national security leaders with mission-critical signals intelligence to enable faster, better decision-making. By detecting, geolocating, and characterizing radio-frequency emissions worldwide, HawkEye 360 delivers trusted domain awareness and early-warning indicators to the US Government and allied partners. Our space-based collection, proprietary signal processing, and AI-powered analytics transform knowledge of RF spectrum into a strategic advantage. Proven by operational mission success, HawkEye 360 is redefining how signals intelligence strengthens national and global security.

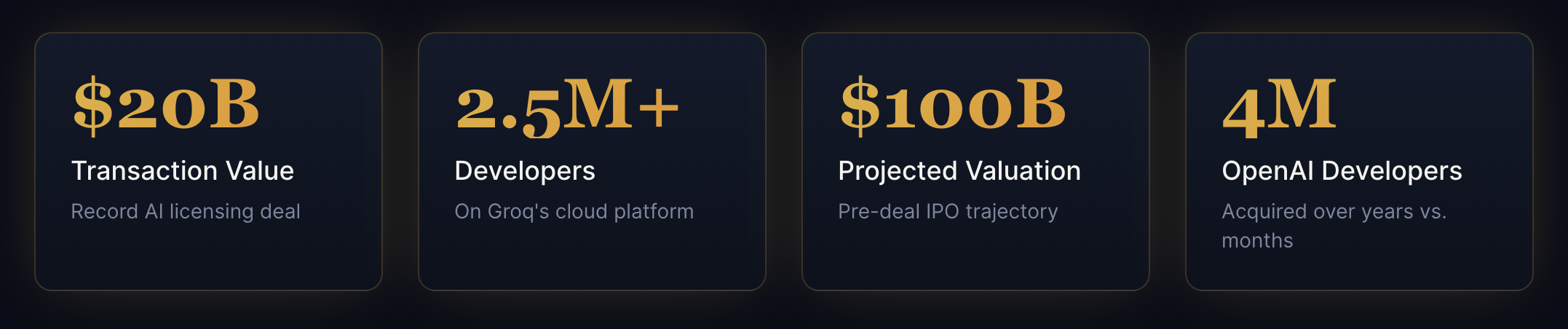

Nvidia's $20 Billion Groq Deal Reshapes AI Infrastructure for the age of inference

A record-breaking licensing transaction signals the dawn of the inference era and validates Groq's meteoric rise

Nvidia's $20 Billion Groq Deal: A Strategic Masterstroke in the Inference Era

As inference emerges as the defining battleground in AI's global expansion, Nvidia has made a decisive move—acquiring a $20 billion licensing agreement with Groq, one of the fastest-growing and best-executing companies in our portfolio.

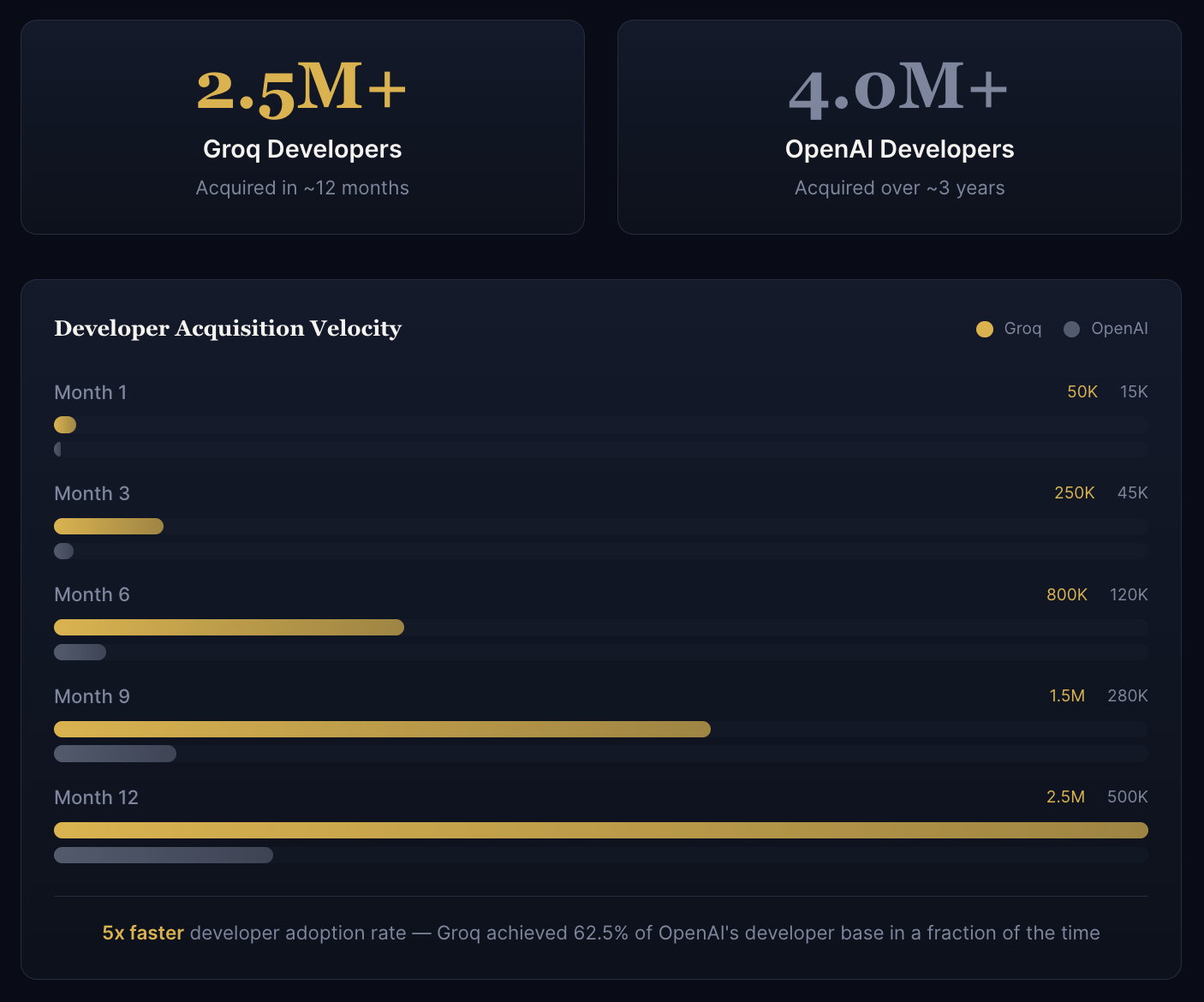

The numbers speak for themselves. Since launch, CEO Jonathan Ross and his team have been on a remarkable trajectory: over 2.5 million developers onboarded to their cloud, major global partnerships secured, and data centers deployed at a pace unmatched by any modern AI infrastructure company. For context, OpenAI took years to reach 4 million developers—Groq accomplished a comparable feat in months.

While we're thrilled to witness this record-setting outcome for the AI industry, we'll admit to some surprise. Based on Groq's momentum, we had been anticipating an IPO path with a potential $100 billion valuation within the next 24 months.

The Deal Structure

Understanding the mechanics of a $20B licensing transaction

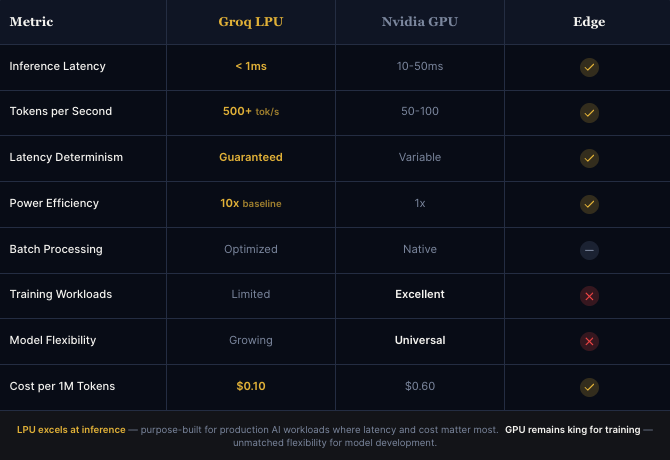

Nvidia's $20 billion licensing transaction with Groq represents a strategic pivot in how the AI giant approaches the rapidly evolving inference market. Rather than a traditional acquisition, this licensing structure allows Nvidia to integrate Groq's revolutionary LPU (Language Processing Unit) technology into its ecosystem while enabling Groq to maintain operational independence.

The deal structure is particularly notable for its focus on intellectual property licensing rather than equity acquisition. This approach provides Nvidia with immediate access to Groq's inference acceleration technology—critical as AI deployment shifts from training-heavy workloads to inference-dominated production environments.

"As inference becomes the next biggest phase in global AI scale and adoption, Groq became a must-have to add an inference layer to the Nvidia ecosystem."

Company & Employee Impact

What this means for Groq's trajectory and team

CEO and founder Jonathan Ross has led Groq on what can only be described as an epic trajectory. The company's execution has been remarkable: acquiring over 2.5 million developers on their cloud platform, closing major global partnerships, and building data centers at a pace that outstrips any modern AI infrastructure company.

For context, OpenAI accumulated 4 million developers over several years—Groq achieved more than half that figure in mere months. This velocity of adoption speaks to both the performance advantages of Groq's LPU architecture and the team's exceptional go-to-market execution.

Team Continuity

The licensing structure allows Groq's team to continue operating independently, preserving the culture and velocity that made them attractive to Nvidia in the first place.

Operational Scale

With $20B in licensing capital, Groq can accelerate data center buildout and talent acquisition without the constraints of traditional venture funding cycles.

Developer Adoption: Groq vs OpenAI

The Investor Perspective

A record outcome with an unexpected path

Many believed Groq was on a clear path to IPO, with projections suggesting a potential $100 billion valuation within 24 months post-listing. The decision to pursue a licensing arrangement with Nvidia rather than the public markets signals either an extraordinarily compelling offer or a strategic calculation about market timing and partnership value.

"We were thrilled to see this record AI industry outcome, but also surprised based on our belief that Groq was heading to IPO and a potential $100 billion valuation in the next 24 months."

Investment Thesis Validated

Groq has been described as "one of the fastest growing, best executing companies" in the AI infrastructure space. This deal validates the thesis that purpose-built inference hardware would become critical infrastructure as AI moves from research labs to production deployment at scale.

Technology & Industry Implications

Reshaping the AI infrastructure landscape

The strategic rationale for Nvidia is clear: as AI workloads shift from training to inference, the company needs to maintain its dominant position across the entire AI compute spectrum. Groq's LPU architecture offers deterministic latency and exceptional throughput for inference workloads—capabilities that complement Nvidia's GPU-centric approach.

LPU vs GPU: Performance Comparison

For Nvidia

Adds a critical inference layer to the ecosystem, positioning Nvidia to capture value across both training and production AI workloads without cannibalizing GPU sales.

For Groq

Gains access to Nvidia's enterprise relationships and global distribution, accelerating LPU adoption while maintaining technological independence.

For the Industry

Signals that inference optimization is now a first-class concern, validating investments in purpose-built inference infrastructure by other startups.

For Developers

The 2.5M+ developers on Groq's platform now have a pathway to deeper Nvidia integration, potentially simplifying hybrid training-inference deployments.

The Groq Journey From Google TPU spinout to record-breaking $20B deal

Looking Ahead

The inference era begins

Nvidia's willingness to pay $20 billion for licensing rights to inference technology validates what many in the industry have long believed: the real value in AI isn't in training models, but in deploying them at scale. Groq's LPU architecture, with its deterministic performance and exceptional throughput, is now positioned to be the inference backbone of the world's most valuable technology company's AI ecosystem.

For Groq, its team, and its investors, this is more than a transaction—it's validation of a vision that bet big on inference when the world was still focused on training.

Aerospace innovator Natilus expands globally into the fastest-growing aviation markets with SpiceJet India

Natilus and Spicejet have partnered with SpiceJet buying 100 of Natilu's innovative blended-wing aircraft, aiming for greater fuel efficiency, lower costs, and reduced emissions in India's growing market. Natilus established an Indian subsidiary (Natilus India) in Mumbai to support operations, as SpiceJet helps with local certification for these futuristic, sustainable planes, potentially transforming domestic air travel.

- The company's new subsidiary in Mumbai - Natilus India - will serve Indian airlines' need for new planes amid country's rapid economic growth

- SpiceJet places purchase order for 100 of Natilus's HORIZON passenger aircraft to reduce airline's emissions and operational costs

- The move reflects India's appetite for new aircraft that supplement commercial fleets and open the door to new domestic and international routes

Natilus, a U.S. aerospace manufacturer of blended-wing body aircraft, today debuted Natilus India, a subsidiary headquartered in Mumbai and led by Ravi Bhatia, Regional Director, to support in-country operations. Simultaneously, Natilus announced its first commercial partnership with one of India's largest passenger airlines, SpiceJet Ltd., which plans to purchase 100 of Natilus's flagship passenger plane, HORIZON, once the plane is certified in India. SpiceJet sees HORIZON as an opportunity to advance its sustainability efforts and drive forward aviation innovation in India and will be the first airline in India to add HORIZON to its fleets.

Natilus India will prioritize the expansion of Natilus's family of BWB aircraft into Indian markets and will coordinate closely with SpiceJet. This development also positions Natilus to begin exploratory sourcing of Indian-made manufactured components.

"We see immense opportunity to deliver a superior and more-efficient airplane for Indian airlines, such as SpiceJet," said Ravi Bhatia, Regional Director of Natilus India. "The establishment of the subsidiary in India is the first step in Natilus establishing roots in India and ultimately securing more commercial airlines customers who want to better serve the needs of their passengers."

Natilus is developing a family of blended-wing aircraft including its flagship passenger plane HORIZON and cargo plane KONA. Through improvements in aerodynamics, Natilus's blended-wing aircraft offer 40% greater capacity, 50% lower operating costs and 30% less fuel. Natilus's HORIZON, which can transport up to 240 passengers in its high-capacity configuration, is a prime solution for Indian commercial airlines looking to grow their fleets and keep flights affordable. Deliveries of the HORIZON will begin in the 2030s, at which point the HORIZON is poised to become the most cost- and fuel-efficient aircraft in existing commercial and cargo fleets.

"Today, India has one of the fastest growing markets in the world and it has an appetite for new aircraft that can both supplement its commercial carrier fleets, while opening the door to new domestic and international routes," said Aleksey Matyushev, CEO and Co-Founder of Natilus. "Our HORIZON serves an ideal solution and represents an innovative path forward for the industry, for India and for the world."

HawkEye 360 closes $150 millions Series E and strategic acquisition of Innovative Signal Analysis ISA

ALSO READ

HawkEye 360 Acquires ISA, Expanding One of the Industry's Most Advanced Signal-Processing Platforms

HawkEye 360, the global leader in signals intelligence data and analytics, today announced the completion of its acquisition of Innovative Signal Analysis (ISA), supported by equity and debt financings totaling $150 million.

The acquisition of ISA significantly expands HawkEye 360's signal-processing capabilities, bringing advanced algorithms, mission-ready systems, and deep engineering expertise that enhance the company's ability to detect, characterize, and analyze complex RF activity. ISA's technology and team strengthen HawkEye 360's end-to-end platform by accelerating data processing, improving performance in challenging RF environments, and supporting more scalable delivery of insights to customers.

This Series E preferred equity financing round was co-led by existing investor NightDragon and Center15 Capital, with additional secured and mezzanine debt financing from Silicon Valley Bank, a division of First Citizens Bank, Pinegrove Venture Partners, and Hercules Capital, Inc. The funding supports HawkEye 360's acquisition of ISA and strengthens the company's financial position, reinforcing the company's disciplined approach to growth and long-term financial management.

"This transaction marks an important step forward for HawkEye 360 as we continue to scale our platform and integrate highly complementary technical capabilities," said John Serafini, CEO of HawkEye 360. "The acquisition of ISA cements our position as the leading provider of RF data, signal processing, and analysis. The leadership of NightDragon and Center15 in this Series E round, alongside our lending partners, reflects confidence in our strategy and the value our capabilities bring to customers and partners worldwide."

"NightDragon is proud to continue our support of HawkEye 360's mission and growth strategy by leading this Series E round," said Dave DeWalt, Founder and CEO, NightDragon. "Since our initial investment, the company has made exceptional progress, and this funding and acquisition represent an important step in accelerating growth and advancing a platform we believe is essential to the market and to enduring national and global security." NightDragon has been an investor in HawkEye 360 since 2021.

"This financing supports the integration of ISA while maintaining a balanced and deliberate approach to scaling the business," said Craig Searle, CFO of HawkEye 360. "It strengthens our balance sheet and positions the company to execute on our operational priorities."

"Hawkeye360 is delivering mission-critical signals intelligence for the United States and its allies. We are proud to support Hawkeye 360's next phase of growth," said Ian Winer, Founder & CEO at Center15 Capital.

With the integration of ISA underway, HawkEye 360 continues to advance its platform and deliver signals intelligence capabilities that defense, government, and international partners rely on to support critical missions.

Cooley LLP represented HawkEye 360 in this transaction.

About HawkEye 360

HawkEye 360 is equipping defense, intelligence, and national security leaders with mission-critical signals intelligence to enable faster, better decision-making. By detecting, geolocating, and characterizing radio-frequency emissions worldwide, HawkEye 360 delivers trusted domain awareness and early-warning indicators to the US Government and allied partners. Our space-based collection, proprietary signal processing, and AI-powered analytics transform knowledge of RF spectrum into a strategic advantage. Proven by operational mission success, HawkEye 360 is redefining how signals intelligence strengthens national and global security.

Fintech leader Octane raises $100M in Series F Funding round to fuel record growth

ALSO READ:

Octane and Adventure Lifestyle Launch Adventure Lifestyle Finance

Equity Financing to Drive Continued Growth, Innovation, and Market Expansion.

The fintech revolutionizing the buying experience for the powersports industry Octane®, announced it has closed its Series F funding round of $100 million in equity capital. The raise includes new equity capital to be used for growth initiatives as well as amounts to be used for secondary share transfers.

The capital builds on Octane’s strong originations growth and enables the Company to further accelerate market penetration and deepen its product offering, positioning the Company even more favorably for long-term success. The Series F raise attracted a mix of returning and new investors; Valar Ventures led the round with participation from Upper90, Huntington Bank, Camping World and Good Sam, Holler-Classic, and others. Prior to the Series F, Octane had raised $242 million in total equity funding since inception, including its Series E, which closed in 2024.

The capital builds on Octane’s strong originations growth and enables the Company to further accelerate market penetration and deepen its product offering, positioning the Company even more favorably for long-term success. The Series F raise attracted a mix of returning and new investors; Valar Ventures led the round with participation from Upper90, Huntington Bank, Camping World and Good Sam, Holler-Classic, and others. Prior to the Series F, Octane had raised $242 million in total equity funding since inception, including its Series E, which closed in 2024.

“Building on our strong foundation, this capital allows us to move more quickly on key initiatives that will further differentiate us in existing markets and speed up our entrance into new ones,” said Jason Guss, CEO and Co-Founder of Octane. “We’re grateful to our existing investors for their continued support and belief in our vision, as well as to new investors for their partnership. We look forward to strengthening these relationships as we expand our offerings and unlock the full potential of financial products for merchants and consumers.”

“One of the investing lessons of the past two decades is that the best tech companies can compound for far longer than expected,” said James Fitzgerald, Founding Partner of Valar Ventures. “Octane’s unique offering supports dealers and OEMs with software and financing solutions unavailable elsewhere. We expect Octane to continue to take market share — both in its existing markets and in those it’s only begun to enter — for a very long time. We are excited to continue backing this team and to partner with them for another decade, or longer.”

“It’s been impressive to watch Octane’s execution in becoming a clear leader in the powersports market,” said Billy Libby, Managing Partner at Upper90. “Now the company is scaling its proprietary underwriting engine and end-to-end technology platform as it expands into new markets and helps dealers grow their profits and deliver better financing experiences to consumers. Few public or private companies are growing as rapidly — and profitably — as Octane, and we’re excited to be part of their continued growth.”

Thus far in 2025, Octane has launched a myriad of new products and technology enhancements, including groundbreaking updates for both merchants and consumers. Notably, Octane strengthened its industry-leading financing portal to provide even faster, easier customer acquisition and closing processes for merchants, helping them reach more buyers and increase profitability. At the same time, customers can access simplified payment options, expedited question resolution, and increased flexibility within the Customer Portal.

Since its founding in 2014, Octane has originated over $7 billion in loans through its in-house lender Roadrunner Financial®, Inc., issued more than $4.7 billion in asset-backed securities, and has sold or committed to sell $3.3 billion of secured consumer loans since December 2023. The Company grew originations by more than 30% from Q3 2024 to Q3 2025 and is GAAP net income profitable. Octane works with 60 original equipment manufacturer (OEM) partner brands and serves markets worth a combined $150 billion with its innovative technology solutions and fast, easy financing experience.

Senior Vice President, Communications and People at Octane

Press@octane.co

Investor Relations:

IR@octane.co

Forbes and Lightmatter CEO talk light based photonic compute and the future of AI infrastructure

Meet The Founder Betting On Light As The Future Of AI Chip Technology and Infrastructure.

Nicholas Harris, CEO of Lightmatter, sat down with Forbes to discuss building AI chip technology relying on light rather than electrical signals. Harris also discussed why the end of Moore's Law and the AI boom created the perfect moment for photonics, enabling massive acceleration in AI model training and increased data center efficiency.

- Addressing Bottlenecks: As AI chip processing power increases, the connections (interconnects) between chips become the primary limitation, causing GPUs to sit idle while waiting for data. Lightmatter's photonic solutions significantly increase bandwidth and reduce latency and power consumption compared to conventional copper interconnects.

- Photonic Interconnects: The company's core product is the Passage platform, a 3D-stacked silicon photonics engine that facilitates high-speed data transfer between processors. This technology uses light (photons) to move data, offering up to 100 times faster data movement between chips in some cases.

- Products & Integration:

- Passage M1000: An active photonic interposer (a layer that AI chips sit atop) with record-breaking optical bandwidth for large AI model training.

- Passage L200: A 3D co-packaged optics (CPO) chiplet that integrates directly with XPU (accelerator/processor) and switch designs for improved performance and energy efficiency.

- Envise: A complete photonic AI accelerator platform designed to run AI computations with greater power efficiency than traditional electronic systems.

- Scalability & Partnerships: Lightmatter's technology enables large-scale AI clusters with up to millions of nodes. The company partners with major semiconductor and packaging firms like GlobalFoundries, Amkor, and ASE to ensure high-volume manufacturing and industry standardization. They are also part of the UALink Consortium, which aims to standardize AI interconnect solutions.

- Higher Speed and Bandwidth: Light-based interconnects can transfer data at much faster speeds and higher densities than electrical signals, which is crucial for the massive datasets used in AI.

- Energy Efficiency: Photonic interconnects consume significantly less power, helping data centers manage their growing energy consumption and operating costs.

- Performance Scaling: By eliminating interconnect bottlenecks, Lightmatter enables faster training times for advanced AI models, allowing for the development of larger and more capable neural networks.

AlphaSense AI makes Inc.'s 2025 Best in Business list for a major year for AI innovation and enterprise adoption

Company recognized for leadership in "Best AI Implementation" and "Best in Innovation" categories, validating the impact of its enterprise AI workflow enhancements

AlphaSense, the AI platform redefining market intelligence for the business and financial world, today announced it has been named to Inc.'s 2025 Best in Business list in the "Best AI Implementation" and "Best in Innovation" categories. This recognition solidifies a record-breaking year of growth for the company, which recently surpassed $500 million in Annual Recurring Revenue (ARR), driven by accelerated adoption of AlphaSense's AI workflow capabilities now used by over 6,500 enterprises.

AlphaSense is pioneering how businesses in every industry integrate generative AI into critical workflows such as go-to-market planning, investment banking, M&A, investor relations, and competitive intelligence. The company's AI innovation is underscored by the rapid adoption of its Generative Search capabilities, which enable users to instantly find insights across 500 million documents with natural language queries. It understands industry-specific terminology, anticipates relevant questions, and delivers granularly cited, analyst-level insights in seconds.

These AI capabilities have since expanded to include sophisticated AI workflow agents that act like a team of trusted, domain-specific analysts to guide decision making.

The Inc. Best in Business award celebrates companies that have demonstrated the most impactful and tangible business wins of the year. This accolade is the latest in AlphaSense's notable industry recognitions already this year, including being named to the Forbes 2025 Cloud 100 and listed as No. 8 in the CNBC Disruptor 50.

"This recognition by Inc. and the AI advancements we've made this year validates the work we are doing to democratize access to elite market intelligence for our customers, with trustworthy AI at the helm," said Kiva Kolstein, President and Chief Revenue Officer of AlphaSense. "The companies winning in this new era aren't just experimenting with AI, they're using AI to earn trust and grow revenue. Our mission has never been more critical. We enable customers to shift from complexity to clarity, deliver intelligence that drives real impact, and empower users to move from insight to action before the competition."

AlphaSense's AI platform enhancements over the past year include Generative Search, Generative Grid, Deep Research, Financial Data and AI Agent Interviewer, demonstrating the company's evolution from AI search to fully automated, end-to-end AI workflows and addressing the growing need among enterprises for domain-specific AI that is trustworthy and scalable.

AlphaSense is accelerating its vision to deliver trusted intelligence at unprecedented speed. Upcoming enhancements will be focused on unlocking deeper insights across quantitative and qualitative data, automating complex workflows with customized agents, and generating decision-ready deliverables—from pitchbooks to newsletters—on-demand.

About AlphaSense

AlphaSense is the AI platform redefining market intelligence and workflow orchestration, trusted by thousands of leading organizations to drive faster, more confident decisions in business and finance. The platform combines domain-specific AI with a vast content universe of over 500 million premium business documents — including equity research, earnings calls, expert interviews, filings, news, and internal proprietary content. Purpose-built for speed, accuracy, and enterprise-grade security, AlphaSense helps teams extract critical insights, uncover market-moving trends, and automate complex workflows with high-quality outputs. With AI solutions like Generative Search, Generative Grid, and Deep Research, AlphaSense delivers the clarity and depth professionals need to navigate complexity and obtain accurate, real-time information quickly. For more information, visit www.alpha-sense.com.

Media Contact

Remi Duhe for AlphaSense

Email: media@alpha-sense.com