Lambda AI signs multibillion-dollar Microsoft agreement to deploy AI infrastructure powered by NVIDIA GPUs

“We’re in the middle of probably the largest technology buildout that we’ve ever seen,” said Lambda CEO Stephen Balaban. “The industry is going really well right now".

- AI cloud startup Lambda announced a multibillion-dollar partnership with Microsoft to build AI infrastructure powered by Nvidia chips.

- The Nvidia-backed firm has had a relationship with Microsoft since 2018.

- Lambda provides cloud services and software for training and deploying AI models and rents out AI servers.

Lambda to deliver mission-critical AI cloud compute at scale under a multi-year contract.

Lambda Superintelligence Cloud, today announced a multibillion-dollar agreement with Microsoft to deploy AI infrastructure powered by tens of thousands of NVIDIA GPUs, including NVIDIA GB300 NVL72 systems.

The strategic collaboration represents a significant multi-year NVIDIA AI infrastructure deployment, expanding access to critical cloud-based accelerated computing resources.

“It’s great to watch the Microsoft and Lambda teams working together to deploy these massive AI supercomputers,” said Stephen Balaban, CEO of Lambda. “We’ve been working with Microsoft for more than eight years, and this is a phenomenal next step in our relationship.”

The agreement between Lambda and Microsoft highlights the significant growth in global demand for high-performance computing, driven by the surge in AI assistant use and enterprise adoption.

We believe Lambda’s push to deploy gigawatt-scale AI factories is supporting one of the most significant technological shifts in human history. This collaboration demonstrates Lambda’s position as a trusted at-scale partner for deploying compute infrastructure for the world’s leading AI products.

About Lambda

Lambda, The Superintelligence Cloud, builds gigawatt-scale AI factories for training and inference. From prototyping to serving billions of users in production, we build the underlying infrastructure that powers AI. Lambda was founded in 2012 by published AI engineers.

Lambda’s mission is to make compute as ubiquitous as electricity and give everyone in America the power of superintelligence. One person, One GPU.

Contacts

Lambda Media Contact

pr@lambda.ai

AI superintelligence cloud Lambda doubles down on midwest expansion, to build AI factory in Kansas City

Site in Kansas City, MO, to welcome new jobs and more than 10,000 NVIDIA GPUs, with additional growth opportunities

Superintelligence Cloud Lambda announced plans to transform an unoccupied 2009-built facility in Kansas City, Missouri, into a state-of-the-art AI Factory.

This Kansas City deployment is part of Lambda's mission to build the infrastructure backbone for the Superintelligence era. Under the agreement, Lambda is planning to develop and operate the facility as the sole tenant. The site is expected to launch in early 2026 with 24MW of capacity, and the potential to scale up to more than 100MW in the future.

"Missouri is proud to welcome Lambda as they create new, high-quality jobs and strengthen our state's technology and innovation ecosystem," said Governor Mike Kehoe. "Their decision to grow here demonstrates the confidence that leading companies have in our people, our infrastructure, and our pro-business environment. It's been said that AI is the space race of our time, and we must win. Data centers are the future and critical to our continued ability to drive technological innovation, strengthen our economy, and safeguard our national security interests. Partnerships like this ensure Missouri remains at the forefront of America's winning strategy."

"Our Kansas City development perfectly embodies Lambda's strategy: a prime location for our customers, an accelerated deployment timeline, and an unwavering commitment to on-time delivery," said Ken Patchett, VP of Datacenter Infrastructure at Lambda. "We believe this success stems from completely rethinking how AI factories should be built and operated."

Building big, shipping fast

When the facility launches in early 2026, it will initially feature more than 10,000 NVIDIA Blackwell Ultra GPUs—a footprint expected to double over time. The supercomputer is dedicated to a single Lambda customer for large-scale AI training and inference, under a multi-year agreement.

"Today in Kansas City, we are building the infrastructure to capitalize on AI's boom," said Mayor Quinton Lucas. "An investment of this scale in the Northland highlights our city's strength in technology, innovation, and job creation, and brings an empty asset back to life through creative reuse."

"This investment from Lambda showcases the Kansas City region's ability to reimagine assets and attract transformative investment creatively," said Tim Cowden, President and CEO, Kansas City Area Development Council. "Data centers are critical to powering the innovation economy, and Kansas City wields the strength of infrastructure, reliable power, and a deep IT talent pool that continues to draw leading technology companies to the region."

The project enables Lambda to repurpose unused power and transform a formerly advanced data center into an AI-ready, future-proofed facility. The initial phase of the project is expected to include upwards of half a billion dollars and several employees and contracted staff working directly on site and maintaining the extensive equipment and machinery. Future phases at the site are still being evaluated and discussed with local partners.

"Lambda's investment in the Kansas City area emphasizes our state's growing strength in technology and innovation," said Michelle Hataway, Director of the Missouri Department of Economic Development. "DED is proud to support future-focused projects like this that enhance our workforce, drive sustainable growth across the region, and create opportunities for Missourians to prosper."

"Choosing Kansas City, Missouri, for a next-generation AI data center sends a clear message: Missouri is the tech leader in the center of the country," said Subash Alias, CEO of Missouri Partnership. "We applaud Lambda for building an AI factory in the heart of the U.S. This is a generational investment that will expand opportunity for Missourians and accelerate the digital economy."

This project was made through many local partners in Kansas City including the State of Missouri, Missouri Department of Economic Development, Missouri Partnership, Kansas City Area Development Council (KCADC), Platte County EDC, City of Kansas City, Mo., Economic Development Corporation of Kansas City, Mo., Port KC, Evergy, Spire, KC Tech Council, Russell, Henderson Engineers, U.S. Engineering, and Capital Electric.

About Lambda

Lambda, The Superintelligence Cloud, builds gigawatt-scale AI factories for training and inference. From prototyping to serving billions of users in production, we build the underlying infrastructure that powers AI. Lambda was founded in 2012 by published AI engineers.

Lambda's mission is to make compute as ubiquitous as electricity and give everyone in America the power of superintelligence. One person, One GPU.

About Missouri Partnership

Missouri Partnership is a public-private economic development organization focused on attracting new jobs and investment to the state and promoting Missouri's business strengths. Since 2008, Missouri Partnership has worked with partners statewide to attract companies that have created 35,400+ new jobs, $1.8 billion+ in new annual payroll, and $8.4 billion+ in new capital investment. Some recent successful projects that led to major investment in Missouri include Accenture Federal Services LLC, American Foods Group, Casey's, Chewy, Inc., Google, James Hardie, Meta, Swift Prepared Foods, URBN, USDA, and Veterans United.

Lambda Press Contact | pr@lambdal.com

Groq AI infrastructure to power the HUMAIN One real-time AI operating system for enterprise

Groq's low latency inference enables HUMAIN to deliver natural, voice-driven computing at scale.

The leader in AI inference, Groq, announced that HUMAIN has selected Groq's AI inference infrastructure to power HUMAIN One, a new operating system built for the age of AI.

HUMAIN One replaces traditional applications with a voice-based interface that understands intent and completes tasks through intelligent agents. Built to help teams across HR, finance, productivity, and procurement, it makes everyday work feel more natural and connected.

Running a platform that responds to spoken intent in real time requires inference that can coordinate hundreds of AI agents with consistent speed and precision. Groq's inference architecture provides the low-latency performance needed to power that experience.

"What makes HUMAIN One possible is inference that keeps up with human thought," said Jonathan Ross, Groq Founder and CEO. "Groq provides the real-time speed and predictability required to turn spoken intent into immediate, intelligent action."

Groq's efficient, U.S.-built compute architecture allows HUMAIN to scale globally while reducing cost and energy per inference. Together, the two companies are defining a new model for enterprise computing where interaction with technology feels as natural as conversation.

"Groq delivers the performance and reliability needed to bring HUMAIN One to life," said Tareq Amin, CEO of HUMAIN. "Their technology allows us to operate in real time with the consistency our system depends on."

About Groq

Groq is the inference infrastructure that powers AI with the speed and cost it requires. Founded in 2016, the company created the LPU and GroqCloud to ensure compute is faster and more affordable. Today, Groq is a key part of the American AI Stack and trusted by more than two million developers and many of the world's leading Fortune 500 companies.

Groq Media Contact: pr-media@groq.com

About HUMAIN

HUMAIN, a PIF company, is a global artificial intelligence company delivering full-stack AI capabilities across four core areas - next generation data centers, hyper-performance infrastructure & cloud platforms, advanced AI Models, including one of the world's most advanced Arabic multimodal LLMs, and transformative AI Solutions that combine deep sector insight with real-world execution.

HUMAIN's end-to-end model serves both public and private sector organizations, unlocking exponential value across all industries, driving transformation and strengthening capabilities through human-AI synergies. With a growing portfolio of sector-specific AI products and a core mission to drive IP leadership and talent supremacy world-wide, HUMAIN is engineered for global competitiveness and national distinction.

Fintech leader LemFi partners with GCash to enable 94 million Filipinos to receive instant remittances.

LemFi has partnered with GCash, the Philippines’ leading finance app, to make cross-border payments and connections between overseas Filipinos and home easier.

LemFi has partnered with GCash, the Philippines’ #1 mobile wallet, to make remittances quicker, easier, and zero-fee. Filipinos in North America and Europe can now send money directly to their GCash wallets within the LemFi app, delivered in minutes at competitive exchange rates.

Exciting news! We partnered with GCash, the Philippines' leading mobile wallet. With this partnership and the now-concluded integration, Filipinos in North America and Europe (including the United Kingdom) can send money directly to their GCash wallets right within the LemFi app.

This partnership follows key achievements this year, including a Series B fundraise, the strategic acquisition of a money remittance license in Europe, and, more recently, the acquisition of Pillar, a credit fintech, to expand access to financial services for the broader diaspora community.

About GCash

GCash is widely regarded as the #1 finance mobile app in the Philippines, with over 94 million registered users worldwide. With expanded offerings in payments, savings, credit and insurance, GCash has become a vital tool for Filipinos at home and abroad, particularly with the introduction of its GCash Overseas service, which enables users to register using international phone numbers.

About The Global Partnership

LemFi, a leading financial technology platform serving Filipinos and other immigrants across North America, the United Kingdom, and Europe, announces its partnership with GCash, the Philippines’ renowned mobile wallet, which has 94 million active customers. This collaboration will enable Filipinos in North America and Europe (including the United Kingdom) to send money directly to their GCash wallets.

This comes after key achievements by LemFi this year, including a Series B fundraise, the strategic acquisition of a money remittance license in Europe, and, more recently, the acquisition of Pillar, a credit fintech, to expand access to financial services for the broader diaspora community, including Filipinos in diaspora.

GCash is widely regarded as the #1 finance mobile app in the Philippines, with over 94 million registered users worldwide. With expanded offerings in payments, savings, credit and insurance, GCash has become a vital tool for Filipinos at home and abroad, particularly with the introduction of its GCash Overseas service, which enables users to register using international phone numbers.

This partnership positions LemFi as one of GCash's official remittance partners in North America & Europe (including the United Kingdom). Users can load GCash wallets through the LemFi app. The integration provides Filipinos in Diaspora with a seamless and low-cost way to support their loved ones, whether paying bills, topping up mobile data, or covering everyday expenses.

“This is more than convenience—it’s connection,” said Paul Albano, GCash International General Manager. “Our kababayans abroad want speed and reliability. This partnership delivers both, while making financial support feel immediate and intentional.”

“As the largest mobile wallet in the Philippines, GCash plays a critical role in the financial lives of Filipinos,” said Patricia Estrella, Growth Manager at LemFi. "With this partnership, we're giving the Filipino diaspora in North America, the United Kingdom, and the EU the tools to take care of their families and feel even closer to home."

Through the LemFi app, “Customers can deposit funds, converted automatically into their GCash wallet, at zero transfer fees, competitive exchange rates, no minimum transfer limits, and delivery in minutes”, added Raymund Abog, LemFi’s Head of Growth for South East Asia. “For millions of Filipinos using GCash, this partnership represents convenience, connection and a commitment to serve Filipinos wherever they are.”

Philip Daniel, LemFi’s Head of Global Expansion and Growth, emphasised the broader vision: “Our mission is to make international payments easier, quicker, and more inclusive. This partnership with GCash is a milestone for us, especially in connecting global Filipinos to trusted financial tools they already use. We are proud to support communities like those in North America, the UK, the EU, and soon, even more countries across the globe.”

This announcement was made at a community brunch in Toronto, hosted by LemFi and GCash. The event brought together 50 key Filipino community leaders, including organisers behind major cultural festivals, professionals across healthcare and finance, and long-time remitters.

As LemFi grows globally, its mission remains clear: To improve the financial life of the next generation of immigrants. This partnership is a significant step in delivering on that promise for the Filipino diaspora.

To learn more about LemFi and their work, please visit www.lemfi.com. For enquiries, contact legal@lemfi.com.

Ayoola Salako

LemFi

email us here

Visit us on social media:

LinkedIn

Ark Invest and BlackRock backed tokenization platform Securitize to go public via SPAC at $1.25 Billion valuation

ALSO READ

Securitize, the Leading Tokenization Platform, to Become a Public Company at $1.25B Valuation via Business Combination with Cantor Equity Partners II CEPT:NASDAQ

- Establishes the first public securities-focused tokenization infrastructure company

- Industry-leading end-to-end tokenization platform with blue-chip institutional partnerships including BlackRock, Apollo, Hamilton Lane and VanEck

- Upsized $225 million in committed common stock PIPE financing led by new and existing blue-chip institutional investors, including Arche, Borderless Capital, Hanwha Investment & Securities, InterVest, and ParaFi Capital

- Transaction values Securitize at a $1.25 billion pre-money equity value, with existing Securitize equity holders rolling 100% of their interests into the combined company, including ARK Invest, BlackRock, and Morgan Stanley Investment Management

Securitize, Inc. ("Securitize" or the "Company"), the world's leading platform1 for tokenizing real-world assets, and Cantor Equity Partners II, Inc. (Nasdaq: CEPT) ("CEPT"), a special purpose acquisition company sponsored by an affiliate of Cantor Fitzgerald, today announced that they have entered into a definitive business combination agreement through which Securitize will become a publicly-listed company. The Company will be uniquely positioned to participate in a $19 trillion TAM for tokenization of real-world assets.

Securitize, Inc. ("Securitize" or the "Company"), the world's leading platform1 for tokenizing real-world assets, and Cantor Equity Partners II, Inc. (Nasdaq: CEPT) ("CEPT"), a special purpose acquisition company sponsored by an affiliate of Cantor Fitzgerald, today announced that they have entered into a definitive business combination agreement through which Securitize will become a publicly-listed company. The Company will be uniquely positioned to participate in a $19 trillion TAM for tokenization of real-world assets.

The transaction values Securitize at a $1.25 billion pre-money equity value. Existing equity holders including ARK Invest, BlackRock, Blockchain Capital, Hamilton Lane, Jump Crypto, Morgan Stanley Investment Management and Tradeweb Markets will roll 100% of their interests into the combined company. The combined company will be renamed Securitize Corp. and its common stock is expected to trade on Nasdaq under the ticker symbol "SECZ".

In connection with the transaction, Securitize plans to tokenize its own equity, an industry first designed to demonstrate how the public company process and trading can move onchain.

"This is a defining moment for Securitize and for the future of finance," said Carlos Domingo, Co-Founder and CEO of Securitize. "We founded this company with a mission to democratize capital markets by making them more accessible, transparent, and efficient through tokenization. This is the next chapter in making financial markets operate at the speed of the internet and is another step in our mission to bring the next generation of finance onchain and tokenize the world."

"We believe that blockchain technology has massive potential to transform finance, and partnering with Securitize underscores our confidence in tokenization as a foundational force in the next era of capital markets" said Brandon Lutnick, Chairman and CEO of Cantor Fitzgerald and Chairman of Cantor Equity Partners II.

A Trusted Ecosystem for Tokenization

Securitize's platform powers the end-to-end relationship between issuers and investors, combining regulatory compliance, digital asset infrastructure, and broad ecosystem integrations across major blockchains, custodians, prime brokers, and DeFi protocols.

Key Investment Highlights:

- Trusted by the World's Leading Institutions – Partner to blue-chip financial institutions including BlackRock, Apollo, KKR, Hamilton Lane, and VanEck.

- Comprehensive and Regulated Stack – First platform with SEC-registered transfer agent, broker-dealer, ATS, investor advisor, and fund administration.

- Massive Addressable Market – Positioned to participate in a $19 trillion opportunity in tokenization across equities, fixed income, and alternative assets.

- Deep Ecosystem Integration – Securitize supports fifteen major blockchains, and is connected to leading DeFi protocols, stablecoin infrastructure, and digital custodians to enable secondary market liquidity.

Modernizing Capital Markets

Founded in November 2017, Securitize has built the most comprehensive and trusted infrastructure for tokenizing financial assets onchain. The company operates a fully regulated, end-to-end platform for the issuance, trading and servicing of tokenized securities. As the only vertically integrated tokenization provider with SEC-registered entities across a transfer agent, broker-dealer, alternative trading system (ATS), investor advisor and fund administration, Securitize uniquely enables a complete lifecycle for tokenized assets.

Today, Securitize has tokenized more than $4 billion in assets through partnerships with leading asset managers, including Apollo, BlackRock, Hamilton Lane, KKR, and VanEck. The firm's launch of KKR's Health Care Strategic Growth Fund II in 2022 marked the first time a major global investment manager tokenized a fund onchain, while BlackRock's BUIDL, tokenized by Securitize in 2024, became the largest tokenized real-world asset in the world.

Beyond institutional funds, Securitize has also pioneered the tokenization of equities, beginning with Exodus, the first U.S.-registered company to tokenize its common stock, and more recently, FG Nexus, a new framework for tokenizing stocks for publicly listed companies.

Transaction Overview

The proposed business combination values Securitize at a pre-money $1.25 billion equity value and is expected to deliver up to approximately $469 million2 of gross proceeds to Securitize, consisting of:

- $225 million pursuant to a fully committed PIPE, anchored by new and existing blue-chip institutional investors including Arche, Borderless Capital, Hanwha Investment & Securities, InterVest, and ParaFi Capital

- $244 million of cash held in CEPT's trust account, assuming no redemptions

Net proceeds from the transaction will strengthen the company's balance sheet with significant capital, enabling Securitize to accelerate its commercial roadmap, scale customer adoption, and unlock key growth opportunities. No existing Securitize shareholders will sell any shares or receive cash consideration as part of the transaction, and all existing Securitize shareholders will be locked up at close of the transaction. The transaction has been unanimously approved by both companies' boards of directors and is expected to close in the first half of 2026, subject to customary closing conditions and regulatory approvals.

1 https://app.rwa.xyz/platforms (Oct 2025)

2 Amounts exclude $50 million in incremental gross proceeds, of which $30 million was funded in October 2025 and $20 million that is expected to be funded at closing pursuant to an existing option issued pursuant to a prior funding round.

Advisors

Citigroup Global Markets Inc. ("Citi") is acting as financial and capital markets advisor to Securitize. Cantor Fitzgerald & Co. ("CF&Co.") is acting as financial and capital markets advisor to CEPT. Citi and CF&Co. are acting as co-placement agents for the PIPE.

Davis Polk & Wardwell LLP is serving as legal advisor to Securitize. Hughes Hubbard & Reed LLP is serving as legal advisor to CEPT. Skadden, Arps, Slate, Meagher & Flom LLP is serving as legal advisor to Citi and CF&Co in connection with their roles as co-placement agents.

About Securitize

Securitize is tokenizing the world with $4B+ AUM (as of Oct 2025) through tokenized funds and equities in partnership with top-tier asset managers, such as Apollo, BlackRock, Hamilton Lane, KKR, VanEck and others. Securitize, through its subsidiaries, is a SEC-registered broker dealer, digital transfer agent, fund administrator and operator of a SEC-regulated Alternative Trading System (ATS). Securitize has also been recognized as a 2025 Forbes Top 50 Fintech company.

For more information, please visit:

Website | X/Twitter | LinkedIn

About Cantor Equity Partners II

Cantor Equity Partners II, Inc. (Nasdaq: CEPT) is a special purpose acquisition company formed for the purpose of effecting a merger, share exchange, asset acquisition, share purchase, reorganization, or other similar business combination with one or more businesses or entities. CEPT is led by Chairman and Chief Executive Officer Brandon Lutnick and sponsored by an affiliate of Cantor Fitzgerald.

About Cantor Fitzgerald, L.P.

Cantor Fitzgerald, with more than 14,000 employees, is a leading global financial services and real estate services holding company and a proven and resilient leader for more than 79 years. Its diverse group of global companies provides a wide range of products and services, including investment banking, asset and investment management, capital markets, prime services, research, digital assets, data, financial and commodities brokerage, trade execution, clearing, settlement, advisory, financial technology, custodial, commercial real estate advisory and servicing, and more.

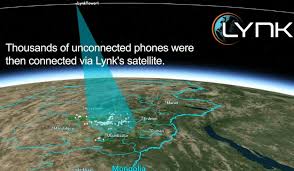

Lynk Global and Omnispace announce their merger to deliver next-generation global Direct-to-Device connectivity

SES, a global leader in space solutions, will continue as a key strategic partner to accelerate the delivery of powerful, cost-effective global D2D services

Lynk Global and Omnispace today announced plans to merge to deliver a comprehensive direct-to-device (D2D) connectivity solution, bridging the gap between today's satellite and terrestrial networks. Following the merger, SES will become a major strategic shareholder, facilitating a robust deployment of D2D and IoT services for mobile network operator (MNO), enterprise and government customers as part of a multi-orbit, multi-spectrum network architecture.

The combined entity will leverage Omnispace's 60 MHz of globally coordinated S-band spectrum and its high-priority filings with the International Telecommunication Union (ITU), optimized for D2D services. Omnispace's licensed mobile satellite spectrum is compliant with 3GPP standards for non-terrestrial networks (NTN), and adheres to national regulatory frameworks. It includes the largest S-band market access footprint, reaching over 1 billion people across the Americas, Europe, Africa and Asia. This foundation enables accelerated global deployment and scalable service delivery.

The combination will benefit from Lynk's patented, proven, low-cost, multi-spectrum satellite technology platform. Lynk's technology enables backward compatible satellite-delivered mobile voice and messaging services to more than 7 billion smartphones and IoT devices and will leverage the S-band to offer a step-change in its data, voice and messaging services to new smart phones and IoT devices, including automotive platforms. Lynk's relationships with over 50 MNO customers across more than 50 countries will see significant benefit from the enhanced D2D offering.

"We now have the right mix of technology, spectrum and leadership to extend mobile connectivity where and when it's needed most," said Ramu Potarazu, CEO, Lynk. "This merger will enable us to accelerate our efforts in delivering seamless, reliable messaging, voice and data services – serving MNOs, as well as consumer, commercial and industrial vehicles, and government and utility sectors worldwide."

"This merger unlocks the full potential of our global S-band spectrum assets and positions us at the forefront of D2D," said Ram Viswanathan, President and CEO, Omnispace. "By combining Omnispace's spectrum portfolio with Lynk's innovative technology, we're creating a powerful platform for scalable, cost-effective global D2D that will serve the immediate connectivity needs of customers and has the spectrum to enhance capacity over time."

SES, a current investor in both companies, will deepen its partnership profile following the merger, providing access to its multi-orbit network and globally deployed ground infrastructure. SES will also support the engineering, operations and regulatory needs of the combined entity. The partnership will enable SES to enhance current services for its customers around the world, including those in the mobile telecom, automotive and government sectors.

"We see enormous opportunities in D2D and IoT connectivity," said Adel Al-Saleh, CEO, SES. "The planned combination of Lynk and Omnispace will offer SES access to new LEO capabilities that align with our strategy to diversify into this high-growth segment. This merger pairs an industry-leading global spectrum portfolio with a disruptive cost-effective satellite technology platform – accelerating deployment and delivering significant value to our commercial and government customers."

The transaction is expected to close late this year or early next year, subject to customary approvals and closing conditions. Upon closing, Ramu Potarazu will serve as the Chief Executive Officer and Ram Viswanathan will serve as the Chief Strategy Officer of the new entity.

About Lynk

Lynk is a patented, proven, and commercially-licensed satellite-direct-to-standard-mobile-phone system. Lynk's technology enables MNOs to provide their subscribers with connections from space for their unmodified mobile devices, enabling messaging, voice and data services designed for both commercial and government applications. Lynk's technology has been tested and proven on all seven continents, and the Company is partnered with over 50 MNOs and has commercial contracts to deliver services to over 50 countries.

Lynk Media: Amy Mehlman, amy@lynk.world

Learn more at: www.lynk.world

About Omnispace, LLC

Headquartered in the Washington D.C. area, and founded by veteran telecommunications and satellite industry executives, Omnispace is redefining mobile connectivity for the 21st century. By leveraging 5G technologies, the company is combining the global footprint of a non-geostationary satellite constellation with the mobile networks of the world's leading telecom companies to bring an interoperable "one network" connectivity to users and IoT devices anywhere on the globe.

Omnispace Media: Marie Knowles, mknowles@omnispace.com

Learn more at: www.omnispace.com

About SES

At SES, we believe that space has the power to make a difference. That's why we design space solutions that help governments protect, businesses grow, and people stay connected—no matter where they are. With integrated multi-orbit satellites and our global terrestrial network, we deliver resilient, seamless connectivity and the highest quality video content to those shaping what's next. Following our Intelsat acquisition, we now offer more than 100 years of combined global industry leadership—backed by a track record of bringing innovation "firsts" to market. As a trusted partner to customers and the global space ecosystem, SES is driving impact that goes far beyond coverage. The company is headquartered in Luxembourg and listed on Paris and Luxembourg stock exchanges (Ticker: SESG).

SES Media: Steve Lott, steven.lott@ses.com

Learn more at: www.ses.com

Groq and IBM partner to accelerate enterprise AI deployment and workflows with speed and scale

Partnership aims to deliver faster agentic AI capabilities through IBM watsonx Orchestrate and Groq technology, enabling enterprise clients to take immediate action on complex workflows

IBM (NYSE: IBM) and Groq today announced a strategic go-to-market and technology partnership designed to give clients immediate access to Groq's inference technology, GroqCloud, on watsonx Orchestrate – providing clients high-speed AI inference capabilities at a cost that helps accelerate agentic AI deployment. As part of the partnership, Groq and IBM plan to integrate and enhance RedHat open source vLLM technology with Groq's LPU architecture. IBM Granite models are also planned to be supported on GroqCloud for IBM clients.

Enterprises moving AI agents from pilot to production still face challenges with speed, cost, and reliability, especially in mission-critical sectors like healthcare, finance, government, retail, and manufacturing. This partnership combines Groq's inference speed, cost efficiency, and access to the latest open-source models with IBM's agentic AI orchestration to deliver the infrastructure needed to help enterprises scale.

Powered by its custom LPU, GroqCloud delivers over 5X faster and more cost-efficient inference than traditional GPU systems. The result is consistently low latency and dependable performance, even as workloads scale globally. This is especially powerful for agentic AI in regulated industries.

For example, IBM's healthcare clients receive thousands of complex patient questions simultaneously. With Groq, IBM's AI agents can analyze information in real-time and deliver accurate answers immediately to enhance customer experiences and allow organizations to make faster, smarter decisions.

This technology is also being applied in non-regulated industries. IBM clients across retail and consumer packaged goods are using Groq for HR agents to help enhance automation of HR processes and increase employee productivity.

"Many large enterprise organizations have a range of options with AI inferencing when they're experimenting, but when they want to go into production, they must ensure complex workflows can be deployed successfully to ensure high-quality experiences," said Rob Thomas, SVP, Software and Chief Commercial Officer at IBM. "Our partnership with Groq underscores IBM's commitment to providing clients with the most advanced technologies to achieve AI deployment and drive business value."

"With Groq's speed and IBM's enterprise expertise, we're making agentic AI real for business. Together, we're enabling organizations to unlock the full potential of AI-driven responses with the performance needed to scale," said Jonathan Ross, CEO & Founder at Groq. "Beyond speed and resilience, this partnership is about transforming how enterprises work with AI, moving from experimentation to enterprise-wide adoption with confidence, and opening the door to new patterns where AI can act instantly and learn continuously."

IBM will offer access to GroqCloud's capabilities starting immediately and the joint teams will focus on delivering the following capabilities to IBM clients, including:

- High speed and high-performance inference that unlocks the full potential of AI models and agentic AI, powering use cases such as customer care, employee support and productivity enhancement.

- Security and privacy-focused AI deployment designed to support the most stringent regulatory and security requirements, enabling effective execution of complex workflows.

- Seamless integration with IBM's agentic product, watsonx Orchestrate, providing clients flexibility to adopt purpose-built agentic patterns tailored to diverse use cases.

The partnership also plans to integrate and enhance RedHat open source vLLM technology with Groq's LPU architecture to offer different approaches to common AI challenges developers face during inference. The solution is expected to enable watsonx to leverage capabilities in a familiar way and let customers stay in their preferred tools while accelerating inference with GroqCloud. This integration will address key AI developer needs, including inference orchestration, load balancing, and hardware acceleration, ultimately streamlining the inference process.

Together, IBM and Groq provide enhanced access to the full potential of enterprise AI, one that is fast, intelligent, and built for real-world impact.

Statements regarding IBM's and Groq's future direction and intent are subject to change or withdrawal without notice, and represent goals and objectives only.

About IBM

IBM is a leading provider of global hybrid cloud and AI, and consulting expertise. We help clients in more than 175 countries capitalize on insights from their data, streamline business processes, reduce costs, and gain a competitive edge in their industries. Thousands of governments and corporate entities in critical infrastructure areas such as financial services, telecommunications and healthcare rely on IBM's hybrid cloud platform and Red Hat OpenShift to affect their digital transformations quickly, efficiently, and securely. IBM's breakthrough innovations in AI, quantum computing, industry-specific cloud solutions and consulting deliver open and flexible options to our clients. All of this is backed by IBM's long-standing commitment to trust, transparency, responsibility, inclusivity, and service. Visit www.ibm.com for more information.

About Groq

Groq is the inference infrastructure powering AI with the speed and cost it requires. Founded in 2016, Groq developed the LPU and GroqCloud to make compute faster and more affordable. Today, Groq is trusted by over two million developers and teams worldwide and is a core part of the American AI Stack.

Media Contact:

Elizabeth Brophy

elizabeth.brophy@ibm.com

Introducing AlphaSense AI's new Financial Data suite of tools for integrated quantitative and qualitative market intelligence

Unified research platform combines premium qualitative content, comprehensive quantitative datasets, and domain-specific generative AI

AlphaSense, the AI platform redefining market intelligence for the business and financial world, today announced the launch of Financial Data, its newest innovation that provides financial and corporate professionals with a unified view of both quantitative analysis and qualitative research insights.

Announced at AlphaSummit, the company’s inaugural customer conference, Financial Data is a suite of structured datasets and purpose-built tools, orchestrated by domain-specific AI to meet the accuracy and trust requirements of high-stakes financial and business workflows. AlphaSense applies more than a decade of expertise in financial language and workflows to deliver AI that is precise, transparent, and decision-ready. According to Gartner®, “By 2027, 35% of agentic AI will succeed in scaling because of investment in domain-specific reasoning, up from 0% in 2024.”

Financial Data eliminates frequent pain points in market intelligence among private equity and venture capital firms and corporate teams by unifying every major market perspective in one platform. It also enables entirely new agentic workflows that accelerate deal sourcing, sector analysis, valuation modeling, and investment decisions. AlphaSense now returns more complete, trusted answers in Generative Search and Deep Research by blending structured financials, company KPIs, and transactions data with proprietary qualitative content such as expert transcripts, broker research, corporate filings, news, valuations, and funding rounds.

By uniting structured financial information with unstructured proprietary business content in a single workflow, customers gain more complete answers and make faster, better-informed decisions. Simple natural language prompts allow users to move at market speed, providing a 360-degree analysis of a company and surfacing insights before the competition.

“AlphaSense is the first domain-specific GenAI platform for quantitative and qualitative market intelligence, which delivers a huge advantage to our customers,” said Jack Kokko, Founder and CEO at AlphaSense. “With Financial Data, everything a finance or corporate professional needs to understand about a company lives seamlessly in one place, ready to discover the instant you type your prompt or a company name you want to research.”

With AlphaSense's intuitive interface led by Generative Search, users get structured, auditable financial data as part of their natural language queries. Critically, as with all AlphaSense research, every insight remains fully source-linked, ensuring transparency and confident decision making.

“The ability to rapidly synthesize financial data with market intelligence will ultimately help strengthen our decision-making processes,” said Rajeev Samuel, Director of Corporate Strategy and Operations at NetApp. “Starting with verified, trusted intelligence helps us quickly and thoroughly evaluate markets, emerging technologies, and strategic opportunities. The AlphaSense platform helps us meet our research needs more easily, through an integrated experience.”

Financial professionals can quickly create:

- AI investment memos: one-click company profiles, investment thesis development, and market analysis essential for investment capital preparation and limited partner updates

- Deal flow dashboard: track funding activity, valuation trends, and strategic developments across investment focus areas

- Public and private company intelligence: complete financial statements, valuation multiples, funding rounds, M&A history, and workforce data presented in a single, clean framework for public companies, and hard-to-find revenue metrics, funding rounds, and expert perspectives for unparalleled visibility into private companies

- Specialized deep-dive views: dedicated interfaces for expert transcripts, broker research, and SEC filings

Core Financial Data components include:

- Workflow-native design: built by industry insiders for specific research workflows like company and deal screening, comp tables, and due diligence

- Canalyst models: 4,500+ ready-to-use models with detailed financials, operating metrics, and segment breakdowns

- Integrated workflows: move seamlessly from screening to deep qualitative research across 300M+ documents

- Industry comps: 115+ pre-built sector comp sets with KPIs curated by equity analysts for instant benchmarking

- Historical financials and consensus: coverage across 17,000+ companies with citations to take users to Company Profile Financials for verification

- Company-specific KPIs: 4,000+ companies with sector-specific metrics like unit economics, margins, and average revenue per user. KPIs become answerable in Gen Search and auditable via Company Profiles

- M&A transactions: 950,000+ deals accessible with a Deal Intelligence Agent capable of producing one-click, rationale-rich summaries from citations

- Funding rounds: 685,000 private funding rounds used to enrich company backgrounds, peer sets, and market maps

- Market data: global equities with intraday pricing for quick context in Gen Search answers

- Workforce data: data on hiring velocity and role mix add context to private company and international signals in cases in which financials are sparse

Click here to learn more about Financial Data, and sign up for a free trial here.

About AlphaSense

AlphaSense is the AI platform redefining market intelligence and workflow orchestration, trusted by thousands of leading organizations to drive faster, more confident decisions in business and finance. The platform combines domain specific AI with a vast content universe of over 500 million premium business documents — including equity research, earnings calls, expert interviews, filings, news, and internal proprietary content. Purpose-built for speed, accuracy, and enterprise-grade security, AlphaSense helps teams extract critical insights, uncover market-moving trends, and automate complex workflows with high quality outputs. With AI solutions like Generative Search, Generative Grid, and Deep Research, AlphaSense delivers the clarity and depth professionals need to navigate complexity and obtain accurate, real-time information quickly. For more information, visit www.alpha-sense.com.

Media Contact

Pete Daly for AlphaSense

Email: media@alpha-sense.com

Lendflow Launches New AI Automation Suite for Embedded Lending

We’re thrilled to announce the launch of Lendflow's Automate, a powerful suite of AI-driven operational agents designed to help lenders scale without the growing pains.

From 24/7 customer engagement to instant document analysis and automated risk scoring, our agents work alongside your team like high-performing digital colleagues, streamlining workflows, lowering costs, and accelerating decision-making.

With over 50 specialized AI agents already in production, we’re helping lenders:

✅ Maximize conversion

✅ Reduce operational overhead

✅ Accelerate time-to-funding

'Lendflow Automate' to power the future of lending with AI-driven operational agents.

Lendflow, a leader in embedded lending infrastructure, announced the official launch of Lendflow Automate, a suite of AI-powered agents designed to optimize workforce efficiency and transform how lending operations are executed.

The announcement was made at Ai4, America’s largest AI event. Lendflow founder and CEO, Jon Fry, will join leaders from Chime, Mastercard, and Deloitte for a live panel, “Leveraging AI to Drive Value,” on August 12 at the event.

By combining automation with advanced AI, Lendflow Automate provides partners with an always-on, digital workforce to streamline operations, lower costs, and accelerate decision-making, helping them “do more with less.”

“Lendflow Automate is about enabling scale without the growing pains,” said Fry. “These AI agents operate like high-performing assistants to your top team members. Automate will make teams more productive with agents that are highly specialized and built to move deals forward - augmenting teams and making them more productive.”

At the heart of this initiative is a new class of AI Operational Agents that engage with customers across voice, text, email, and chat. It provides lenders with a fully autonomous, 24/7 digital workforce that maximizes conversion, accelerates time-to-funding, and reduces cost per lead. Lendflow has also integrated these tools internally to optimize team communications, currently saving over 500 hours of talk-time per week.

Many of Lendflow’s customers have already adopted Lendflow Automate, and have been able to increase conversions while simultaneously lowering costs.

Always-On Engagement: Lendflow’s AI Operational Agents

Lendflow’s communication-focused agents are configurable, multi-channel, and designed to support every stage of the lending lifecycle. Each agent follows a precise cadence of outreach using voice calls, SMS, and email to increase applicant engagement, reduce drop-off, and re-engage dormant leads. What truly sets these agents apart is their evolving nature: with every interaction, they absorb new insights and refine their behavior. This continual learning loop fuels more accurate context handling and sharpens their responsiveness.

Key agents include:

- Application Walkthrough Assistant

Reconnects with users who abandoned applications to help them complete the process, upload documents, and answer questions.

- Schedule Meeting Assistant

Schedules meetings between applicants and funding managers via a mix of personalized voice calls, emails, and text messages engaging users 47 times across 8 days.

- Document Collection Assistant

Collects required documentation from applicants with consistent, supportive communication ensuring nothing slips through the cracks.

- Dead Deal Assistant

Revives declined or inactive applicants with a long-term strategy to keep funding opportunities alive.

- Renewal Outreach Assistant

Proactively reconnects with funded merchants to explore add-on deals or new financing - executing a year-long, personalized follow-up strategy.

These agents operate autonomously, scale infinitely, and free up human teams to focus on higher-value work - delivering real operational ROI for lenders and fintech partners.

Supporting Agents for Data & Risk Intelligence

In addition to its communication suite, Lendflow Automate includes several specialized AI agents to handle classification, document processing, and risk scoring:

- Industry Map Agent

Instantly classifies businesses with accurate NAICS and SIC codes to streamline risk segmentation. It supports bulk uploads and has delivered up to 100% accuracy for lenders.

- Doc Analyzer

Extracts structured data from financial documents - like bank statements, debt forms and tax returns - cutting manual effort by up to 70%, removing errors and accelerating underwriting.

- Inbox Automation Agent

With a hosted inbox, AI will automatically identify email senders, classify emails, download attachments, create deals and remove manual workflows.

Lendflow Automate: AI-Powered Workforce Optimization

With more than 50 context-aware AI agents in production, Lendflow Automate serves as an intelligent execution layer that removes manual bottlenecks and drives operational efficiency at scale. Each agent is purpose-built to deliver real business outcomes from faster time-to-close to lower operational costs.

To explore the full Lendflow Automate suite, visit www.lendflow.com.

About Lendflow

Lendflow powers embedded lending infrastructure for fintechs, SaaS platforms, and lenders. With APIs, orchestration tools, and AI automation, Lendflow helps companies launch and scale lending products while streamlining underwriting, compliance, and operations.

Contacts

Media Contact:

Sarah Weaver

pr@lendflow.com

Capstack Technologies’ partnership with Jack Henry serving more than 3,000 banks and credit unions

Announcing Capstack Technologies’ partnership with Jack Henry 🚨, a leading provider of technology solutions, serving more than 3,000 banks and credit unions. Together, we’re bringing the Capstack loan marketplace, analytics, and loan servicing to Jack Henry community banks and credit unions nationwide.

This is a major step forward for community and regional banking.

In less than a year, Capstack has grown to more than $1B in loans currently available on the marketplace, with 200+ banks and credit unions connected directly and over $300 million in loans in active negotiations.

Capstack has proven that loan sales don’t have to take weeks of broker calls, cost margin, or risk borrower relationships. With Capstack, banks and credit unions moving faster, unlocking capital, and keeping lending strong together on one platform.

Now, with Jack Henry, that same opportunity is reaching thousands more banks and credit unions. Jack Henry powers the core banking systems that community banks depend on every day.

By combining their scale and trusted infrastructure with Capstack’s marketplace, analytics, and servicing, we’re helping banks diversify portfolios and unlock capital without leaving the systems they already rely on.

As part of the partnership, all Jack Henry customers will receive three months of complimentary access to Capstack Analytics, to evaluate counterparties and benchmark performance against similar banks for board and regulatory reviews