The AI tech IPO window is reopening with Anthropic and OpenAI moving preparing to IPO in 2026

Anthropic has hired Wilson Sonsini to prepare for a potential 2026 IPO while simultaneously raising a major private round (Bloomberg, The Information).

OpenAI is taking similar steps: the company has reportedly begun internal preparation for a 2026 listing, including discussions with banks, legal advisors, and early examinations of financial disclosures (Reuters, FT, Bloomberg).

These are concrete moves from two of the most capital-intensive AI companies — not speculation.

Private Capital Has Hit Its Limits

- AI scale-up looks more like energy infrastructure than software.

- IDC and Gartner estimate AI-related data-center capex in the hundreds of billions for 2025.

- Bloomberg Intelligence projects multi-trillion-dollar cumulative AI infra investment through 2030.

OpenAI and Anthropic both face heavy compute and power requirements. Private rounds alone cannot fund frontier model development at this scale. This is the structural reason top AI firms are preparing public filings.

IPO Activity Is Already Back

The US IPO market — frozen in 2022–2023 — has materially reopened:

- IPO volume in Q1–Q3 2025 nearly matched the entire year of 2024 (Renaissance Capital).

- ServiceTitan surged more than 40% on its December debut (WSJ).

- Anthropic and OpenAI both advancing IPO groundwork for 2026 (Bloomberg, Reuters, FT).

This activity shows a functioning pipeline, not a hypothetical one.

Why AI Is Driving the Shift to Public Markets

Major tech companies continue massive infrastructure expansion:

- AI capex for 2025 is measured in the hundreds of billions (IDC, Gartner).

- OpenAI’s annualized revenue has grown sharply, but the company still operates with substantial losses due to compute costs (FT).

- Microsoft has paused or slowed select data-center builds, signalling early supply constraints (Bloomberg).

These pressures create a simple reality: Only public markets can supply capital at the required scale.

Risks the Market Is Closely Watching

Monetization: OpenAI, Anthropic, and others show strong revenue growth but remain capital-intensive and unprofitable (FT).

Infrastructure bottlenecks: GPU availability, power constraints, and paused hyperscaler projects (Bloomberg).

Macro sensitivity: IPO activity reacts immediately to liquidity conditions; any broad market shock could slow issuance.

These risks are real and visible in filings, analyst notes, and infrastructure reports.

Bottom Line

Between Anthropic’s IPO preparation and OpenAI’s internal listing work, the direction of travel is clear:

late-stage AI companies are returning to public markets because they have to.

With IPO activity rising, strong debut performance, and unprecedented infrastructure spending needs, the tech financing environment has already shifted.

The IPO window isn’t “about to open.”

It’s open.

Heather Planishek joins the Lambda superintelligence cloud team as Chief Financial Officer

Big next step to IPO, and the public markets for Lambda and the AI industry. Today, Heather Planishek joins Lambda as Chief Financial Officer. Most recently, she served as Chief Operating and Financial Officer at Tines, the intelligent workflow platform, and has been Lambda's Audit Chair since July 2025. Heather brings deep company insight to our leadership team, as we accelerate the deployment of AI factories to meet demand from hyperscalers, enterprises, and frontier labs building superintelligence.

Heather is an industry veteran who brings deep financial and operational expertise as Lambda accelerates the deployment of AI factories to meet demand from hyperscalers, enterprises, and frontier labs building superintelligence.

Lambda, the Superintelligence Cloud, announced the appointment of Heather Planishek as Chief Financial Officer. Planishek brings deep experience scaling high-growth technology companies and will lead Lambda’s global finance organization as the company continues to expand its infrastructure footprint and operations.

Planishek most recently served as Chief Operating and Financial Officer at Tines, the intelligent workflow platform. She previously served as Chief Accounting Officer at Palantir Technologies Inc. (NASDAQ: PLTR), where she helped guide the company through a period of significant growth and its transition to the public markets. Earlier in her career, she held key leadership roles at Hewlett Packard Enterprise and Ernst & Young, building extensive expertise in enterprise-scale finance, operations, and governance. Planishek joined Lambda’s Board of Directors in July of this year, and as part of the transition, will step down from her board position.

“It’s awesome to have Heather join as our CFO,” said Stephen Balaban, co-founder and CEO of Lambda. “I’ve gotten to know her through our work together on the board, and it’s great to have her take on an executive leadership role at Lambda.”

In her new position, Planishek will oversee Lambda’s financial strategy, planning, accounting, treasury, investor relations, and business systems. Her appointment strengthens the company’s leadership team as Lambda continues to serve some of the world’s leading AI labs and largest hyperscalers, as well as tens of thousands of AI developers across research, startups, and enterprise.

“Lambda sits at the center of one of the most important shifts in technology, with infrastructure that I believe is rapidly becoming essential for AI at scale,” said Planishek. “I’m excited to join the leadership team and help strengthen the financial and operational foundation that will support Lambda’s continued growth and long-term market leadership.”

About Lambda

Lambda, The Superintelligence Cloud, is a leader in AI cloud infrastructure serving tens of thousands of customers.

Founded in 2012 by machine learning engineers who published at NeurIPS and ICCV, Lambda builds supercomputers for AI training and inference.

Our customers range from AI researchers to enterprises and hyperscalers.

Lambda’s mission is to make compute as ubiquitous as electricity and give everyone the power of superintelligence. One person, one GPU.

Contacts

Lambda Media Contact

pr@lambda.ai

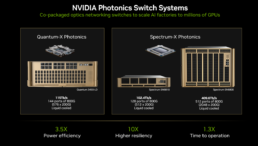

Lambda revolutionizes light based AI infrastructure with NVIDIA Quantum‑X Photonics for next-gen AI factories

Frontier AI training and inference now operate at unprecedented scale. The new co-packaged optics networking from NVIDIA delivers 3.5x higher efficiency and 10x resiliency for large-scale GPU clusters.

Lambda, the Superintelligence Cloud, today announced it is among the first AI infrastructure providers to integrate NVIDIA's silicon photonics–based networking.

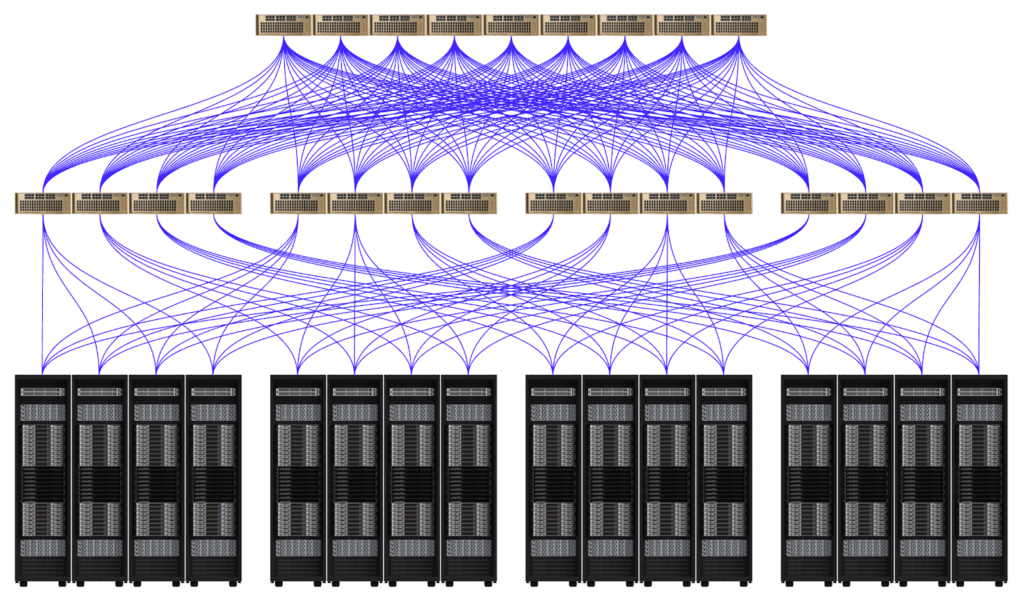

The shift to co-packaged optics CPO, addresses a critical bottleneck in AI infrastructure. As AI models now train on hundreds of thousands of GPUs, and beyond, the network connecting them has become as important as the GPUs themselves. Traditional networking approaches are not keeping pace with this scale.

Why CPO Matters for AI Compute Networks

NVIDIA Quantum-X Photonics InfiniBand and NVIDIA Spectrum-X Photonics Ethernet switches use co-packaged optics (CPO) with integrated silicon photonics to provide the most advanced networking solution for massive-scale AI infrastructure. CPO addresses the demands and constraints of GPU clusters across multiple vectors:

- Lower power consumption: Integrating the silicon photonic optical engine directly next to the switch ASIC eliminates the need for transceivers with active components that require additional power. At launch, NVIDIA mentioned a 3.5x in power efficiency improvement over traditional pluggable networks.

- Increased reliability and uptime: Fewer discrete optical transceiver modules, one of the highest failure rate components in a cluster, mean fewer potential failure points. NVIDIA cites 10x higher resilience and 5x longer AI application runtime without interruption over traditional pluggable networks.

- Lower latency communication: Placing optical conversion next to the switch ASIC minimizes electrical trace lengths. This simplified data and electro-optical conversion path provides lower latency than traditional pluggable networks.

- Faster deployment at scale: Fewer separate components, simplified optics cabling, and fewer service points mean that large-scale clusters can be deployed, provisioned, and serviced more quickly. NVIDIA cites 1.3x faster time to operation versus traditional pluggable networks.

"NVIDIA Quantum‑X Photonics is the foundation for high-performance, resilient AI networks. It delivers superior power efficiency, improved signal integrity, and enables AI applications to run seamlessly in the world’s largest datacenters,” said Ken Patchett, VP of DC Infrastructure, at Lambda. “By integrating optical components directly next to the network switches, we believe our customers can deploy AI infrastructure faster while significantly reducing operational costs – essential as we continue to scale to support frontier AI workloads."

NVIDIA reports that NVIDIA Photonics delivers 3.5x better power efficiency, 5x longer sustained application runtime, and 10x greater resiliency than traditional pluggable transceivers. Co-packaged optics can provide increased compute per watt and enhanced network reliability, enabling faster model training and inference.

Built for the era of real-time AI, NVIDIA Photonics networking features a simplified design, resulting in fewer components to install and maintain. Lambda continues to provide scalable AI infrastructure, helping enterprises, research labs, and startups build multi-site, large-scale GPU AI factories.

“AI factories are a fundamentally new class of infrastructure – defined by their network architecture and purpose-built to generate intelligence at massive scale,” said Gilad Shainer, senior vice president of networking at NVIDIA. “By integrating silicon photonics directly into switches, NVIDIA Quantum-X silicon photonics networking switches enable the kind of scalable network fabric that makes massive-GPU AI factories possible.”

How Lambda Plans to Leverage It

Lambda is preparing its next-generation GPU clusters to integrate CPO networking using NVIDIA Quantum-X Photonics InfiniBand and Spectrum-X Photonics Ethernet switches. These advances in silicon-photonics switching are critical as we design massive-scale training and inference systems. For Lambda’s NVIDIA GB300 NVL72 and NVIDIA Vera Rubin NVL144 clusters, we are adopting CPO-based networks to deliver higher reliability and performance for customers while simplifying large-scale deployment operations and improving power efficiency.

This builds on Lambda's cooperation with NVIDIA. Lambda recently achieved NVIDIA Exemplar Cloud status, validating its ability to deliver consistent performance for large-scale training workloads on NVIDIA Hopper GPUs. Over the past decade, Lambda has earned six NVIDIA awards, affirming its position as a trusted collaborator in NVIDIA’s ecosystem.

About Lambda

Lambda, The Superintelligence Cloud, is a leader in AI cloud infrastructure serving tens of thousands of customers.

Founded in 2012 by machine learning engineers who published at NeurIPS and ICCV, Lambda builds supercomputers for AI training and inference.

Our customers range from AI researchers to enterprises and hyperscalers.

Lambda’s mission is to make compute as ubiquitous as electricity and give everyone the power of superintelligence. One person, one GPU.

Contacts

Lambda Media Contact

pr@lambdal.com

Archetype AI Raises $35M Seiers E to scale deployment of physical agents to solve real-world problems

ALSO READ:

Archetype AI raises $35M for "physical" AI models - Axios

Imagine Physical Agents that reduce downtime, improve safety, and increase operational efficiency across warehouses, construction sites, and city infrastructure.

The teams results validate what we believed from the start: organizations don't need more data — they need intelligence where it matters most: inside their operational environments.

The breakthrough here is Newton, their frontier Physical AI foundation model — massively multimodal, capable of fusing sensor data with natural language, and scaling cross-modal reasoning across increasingly complex environments, tasks, and problems.

With the Series A, they will continue to grow the team and expand partnerships to make Physical AI accessible to every organization that manages physical assets — and ultimately, to bring intelligence to every asset in the physical world.

Archetype AI enables businesses to build, customize, and deploy Physical Agents that turn real-world sensor data into actionable insights, recommendations, and automations

The leading Physical AI company Archetype AI closed a $35 million Series A funding led by IAG Capital Partners and Hitachi Ventures, with participation from new and existing investors including Bezos Expeditions, Venrock, Amazon Industrial Innovation Fund, Samsung Ventures, Systemiq Capital, E12 Ventures, Higher Life Ventures, and others. Archetype AI is also introducing new tools to build and deploy Physical Agents that sense, understand, and act in real-world environments.

“Archetype AI is refining and defining the full stack of Physical AI, creating scalable solutions that operate in the real world, not just on screens or in simulations,” said Dennis Sacha, Founding Partner at IAG Capital Partners. “This team is building a category-defining company that will transform how humans and agents interact with everything from edge devices to critical infrastructure, generating lasting value at scale.”

While AI agents are increasingly used to automate digital workflows online, extending those capabilities into the physical world has remained complex, costly, and resource-intensive. Traditional approaches — siloed industry-specific solutions or custom ML tools — demand significant engineering expertise and capital investments, and they only solve narrow business problems (for example, safety) without the ability to generalize across multiple real-world use cases.

With Archetype’s Physical Agents, businesses can turn raw sensor data into real-world intelligence in minutes using natural language prompts and APIs that integrate seamlessly with existing frameworks. Powered by Newton™️, a breakthrough Physical AI foundation model, the agents fuse multimodal sensor data, video, and contextual information to generate insights, recommendations, and automations. The Archetype platform offers pre-built services like Agent Toolkit, enabling rapid assembly, testing, and deployment of Physical Agents. These agents can run anywhere — in a private cloud, on-premise, or at the edge — ensuring complete data sovereignty and enterprise-grade security, a critical requirement for physical industries.

“Physical Agents allow businesses to move from intent to action with speed and efficiency that were not previously possible,” said Ivan Poupyrev, Co-founder and CEO of Archetype AI. “Newton provides general physical intelligence, while the Archetype platform and Agent Toolkit make it simple to build and deploy customer-specific solutions that solve their critical problems by using specific knowledge about their physical operations — here and now.”

Agent Toolkit enables businesses to build custom Physical Agents that serve diverse use cases. To accelerate development, Archetype provides pre-built, ready-to-use agents, including:

- Process Monitoring Agent — Track ongoing machine operations, detect and discover anomalies, and identify machine states.

- Task Verification Agent — Verify worker adherence and compliance to planned workflows and procedures in services, training, and operations control.

- Safety Agent — Monitor environments for potential hazards and unsafe behaviors that can be defined by natural language to ensure safety compliance.

With these agents, customers can quickly deploy solutions tailored to their operations. The Archetype platform supports creating new agents, modifying existing ones, and enabling the model to adapt agents to new environments and requirements.

Early enterprise customers, including NTT DATA, Kajima, and the City of Bellevue, have already deployed Physical Agents to increase efficiency, reduce downtime, and improve safety in environments as varied as warehouses, construction sites, and city streets.

“Archetype AI’s Physical Agents improve operations and safety across real-world assets,” said a Samsung Ventures representative. “From reducing machine downtime in factories to monitoring construction sites in real time, their platform delivers tangible results enterprises can put into action immediately.”

The new funding will enable Archetype to accelerate scaling the Archetype platform, expand the Physical Agent capabilities, and invest further in frontier research and development to advance Newton's ability to interpret, reason, and act in the physical world. The company is releasing new research results demonstrating the state-of-the-art capabilities of Newton for physical signal-language fusion, which allows the model to generate continuous time series signals from language descriptions. With this research, Archetype is making the next step beyond understanding to acting and manipulating the physical world.

The Archetype AI Platform and Physical Agent Toolkit are available today in beta for select customers, with expanded capabilities rolling out in the coming months.

About Archetype AI

Archetype AI is the Physical AI company helping humanity make sense of the real world. Founded by Google veterans, it pioneers a new AI category that fuses real-time sensor data with natural language, enabling users to ask open-ended questions about what’s happening now and what could happen next. As the creators of Newton, a first-of-its-kind foundation model, Archetype AI designed this platform to interpret the physical world and enhance human decision-making. Archetype AI partners with Fortune Global 500 brands across industries — automotive, consumer electronics, logistics, and retail — turning real-world data into actionable insights. For more information, visit: archetypeai.io.

Contacts

Groq expands to Asia-Pacific with Equinix data center to power the next generation of AI inference

In collaboration with Equinix NASDAQ: EQIX, Groq brings low-latency, high-efficiency compute closer to customers in Australia.

Groq, a global leader in AI inference, today announced its first AI infrastructure footprint in Asia-Pacific, through its deployment in Equinix's data center in Sydney, Australia. The development is part of its continued global data center network expansion, following launches in the U.S. and Europe. This extends Groq’s global footprint and brings fast, low-cost and scalable AI inference closer to organizations and the public sector across Australia.

Under this partnership, Groq and Equinix will establish one of the largest high-speed AI inference infrastructure sites in the country with a 4.5MW Groq facility in Sydney, offering up to 5x faster and lower cost compute power than traditional GPUs and hyperscaler clouds. Leveraging Equinix Fabric®, a software-defined interconnection service, organizations in Asia-Pacific will benefit from secure, low-latency, high-speed interconnectivity, ensuring seamless access to GroqCloud™ for production AI workloads, ensuring full control, compliance and data sovereignty.

Groq is already working with customers across Australia, including Canva, to deliver inference solutions tailored to their business needs, from enhancing customer experiences to improving employee productivity.

“The world doesn’t have enough compute for everyone to build AI. That’s why Groq and Equinix are expanding access, starting in Australia.” said Jonathan Ross, CEO and Founder, Groq

Cyrus Adaggra, President, Asia-Pacific, Equinix, said: “Groq is a pioneer in AI inference, and we’re delighted they’re rapidly scaling their high-performance infrastructure globally through Equinix. Our unique ecosystems and wide global footprint continue to serve as a connectivity gateway to their customers and enable efficient enterprise AI workflows at scale.”

"We're entering a new era where technology has the potential to massively accelerate human creativity. With Australia's growing strength in AI and compute infrastructure, we're looking forward to continuing to empower more than 260 million people to bring their ideas to life in entirely new ways." said Cliff Obrecht, Co-Founder and COO of Canva

About Groq

Groq is the inference infrastructure that powers AI with the speed and cost it requires. Founded in 2016, the company created the LPU and GroqCloud to ensure compute is faster and more affordable. Today, Groq is a key part of the American AI Stack and trusted by more than two million developers and many of the world’s leading Fortune 500 companies.

Groq Media Contact

About Equinix

Equinix (Nasdaq: EQIX) shortens the path to boundless connectivity anywhere in the world. Its digital infrastructure, data center footprint and interconnected ecosystems empower innovations that enhance our work, life and planet. Equinix connects economies, countries, organizations and communities, delivering seamless digital experiences and cutting-edge AI – quickly, efficiently and everywhere.

Equinix Media Contacts

Annie Ho (Asia-Pacific)

Graham White (Australia)

Groq expands partnership with HUMAIN to deploy Next-Generation Inference Infrastructure in Saudi Arabia

Groq, the U.S. leader in ultra-low-latency inference acceleration, and HUMAIN, the PIF company delivering global full-stack artificial intelligence solutions. Announced a major expansion of their strategic partnership at the U.S.-Saudi Investment Forum held in Washington, D.C. alongside the visit of Saudi Arabia’s Crown Prince HRH Mohammed bin Salman Al-Saud.

The agreement builds on the region’s first and largest sovereign inference cluster, powered by Groq and already serving more than 150 countries. It continues the mission to establish HUMAIN as the number three global inference provider and affirms the joint commitment to keep this Groq-powered cluster the largest in the region as demand for real-time AI grows.

The agreement builds on the region’s first and largest sovereign inference cluster, powered by Groq and already serving more than 150 countries. It continues the mission to establish HUMAIN as the number three global inference provider and affirms the joint commitment to keep this Groq-powered cluster the largest in the region as demand for real-time AI grows.

As part of this deployment, HUMAIN will partner with Groq to expand the advanced Groq-powered inference infrastructure already operating in the Kingdom by more than three times the current capacity, ensuring rapid time-to-market and underscoring HUMAIN’s position in ultra-low-latency, sovereign AI compute. This proven architecture serves as the backbone of the nation’s real-time AI capabilities.

In addition, HUMAIN will partner with Groq to introduce Groq’s latest next-generation chipset and rack architecture, bringing substantial improvements in compute density, power efficiency, on-die memory bandwidth, and RealScale™ interconnect performance. This next-generation technology enables the deployment of more sophisticated, larger-scale AI models and agentic workloads with deterministic speed and efficiency.

Tareq Amin, CEO of HUMAIN, highlighted the strategic significance of the expansion.

“Our work with HUMAIN continues to advance. By combining immediate deployment capabilities with the introduction of our next-generation architecture, we are enabling real-time AI at a scale that sets a new global benchmark.” Jonathan Ross, Groq Founder and CEO

"By scaling our sovereign inference cluster and incorporating both our current high-performance infrastructure and Groq’s newest chipset innovations, together, we are building one of the world’s most advanced real-time AI platforms. This expansion accelerates our mission to bring world-leading compute capabilities directly into the Kingdom’s data centers. Tareq Amin,CEO of HUMAIN

The expanded cluster will power a new wave of national-scale AI applications across government, enterprise, healthcare, finance, industrial systems, multilingual AI, and real-time agentic workflows.

Lambda AI closed $1.5B Series E to accelerate building their superintelligence cloud infrastructure

ALSO READ about Lambda AI:

Lambda AI signs multibillion-dollar Microsoft agreement to deploy AI infrastructure powered by NVIDIA GPUs

AI superintelligence cloud Lambda doubles down on midwest expansion, to build AI factory in Kansas City

Investment will accelerate Lambda's push to deploy gigawatt-scale AI factories and supercomputers to meet demand from hyperscalers, enterprises, and frontier labs building superintelligence. Series E funding, led by TWG Global with participation from US Innovative Technology Fund (USIT) and several existing investors.

Superintelligence Cloud Lambda, closed on their $1.5B Series E funding, led by TWG Global, a holding company led by Thomas Tull and Mark Walter. Also participating in the round are Thomas Tull’s US Innovative Technology Fund (USIT) and several existing investors.

“This round of funding helps enable Lambda to develop gigawatt-scale AI factories that power services used by hundreds of millions of people every day,” said Stephen Balaban, co-founder and CEO of Lambda. “Our mission is to make compute as ubiquitous as electricity and bring the power of AI to every person in America. One person, one GPU. It’s a privilege to work with Thomas, USIT, and TWG Global to realize this vision.”

Lambda delivers large-scale AI factories that power mission-critical training and inference, just as demand for compute is rising and data center space is scarce.

“Since meeting Stephen and the Lambda team several years ago, we have been consistently impressed by their visionary focus and ability to deliver infrastructure at unprecedented scale,” said Thomas Tull, Co-Chairman of TWG Global and Chairman of USIT. “Generating enough compute power for AI is a defining infrastructure challenge of our time. We believe that Lambda is well-positioned to solve this challenge and continue to deliver in the decades ahead.”

The company’s AI specialization stems from its published machine learning founders, as well as its pioneering work in cloud supercomputers.

“As we move into a new phase of AI scale, the most valuable infrastructure will be that which converts kilowatts into tokens with minimal friction,” said Gaetano Crupi, Managing Director at USIT. “We are excited to continue supporting Lambda as it becomes a key player in the industrialization of inference and helps the U.S. master the energy-to-cognition pipeline.”

About Lambda

Lambda, The Superintelligence Cloud, is a leader in AI cloud infrastructure serving tens of thousands of customers.

Founded in 2012 by machine learning engineers published at NeurIPS and ICCV, Lambda builds supercomputers for AI training and inference.

Our customers range from AI researchers to enterprises and hyperscalers.

Lambda's mission is to make compute as ubiquitous as electricity and give everyone the power of superintelligence. One person, one GPU.

About TWG Global

TWG Global operates and invests in businesses with untapped potential and guides them to new levels of growth. For additional information, visit: twgglobal.com.

Contacts

Lambda Media Contact

pr@lambdal.com

TWG Media Contact

twg@prosek.com

AI market intelligence leader AlphaSense names Nilka Thomas as Chief People Officer

Former Lyft and Google executive joins to lead global people and culture strategy on the heels of the company surpassing $500 million in annual recurring revenue

AlphaSense, the AI platform redefining market intelligence for the business and financial world, today announced that Nilka Thomas has joined the company as Chief People Officer, following a period of record growth in which AlphaSense surpassed $500 million in annual recurring revenue (ARR)

Thomas will oversee AlphaSense's global people strategy, including talent acquisition, culture, organizational development, employee experience and inclusion, as the company continues to expand its global footprint and scale its AI and market intelligence platform. She brings more than two decades of experience building and leading high-performance, inclusive organizations at some of the world's most innovative technology companies.

Most notably, Thomas served as Chief People Officer at Lyft through their IPO and journey as a newly public company, where she led all aspects of the company's people function during a period of transformation and growth. Before Lyft, she spent more than a decade at Google, where she held senior HR and talent leadership roles focused on global staffing, culture, and inclusion initiatives.

"Nilka brings a rare combination of strategic vision, empathy, and hands-on experience leading global people organizations through high growth and scale," said Jack Kokko, Founder and CEO of AlphaSense. "Her background at Lyft and Google gives her deep perspective on how innovative cultures are built – and sustained – through times of rapid growth and transformation. As AlphaSense enters our next chapter of expansion, her leadership will be instrumental in developing the talent, systems, and culture that fuel continued innovation. I'm confident she will help us build an organization where every employee can thrive and where our people strategy remains a true competitive advantage."

"I'm passionate about building high-performing, people-first organizations that unlock both individual and business potential," said Thomas. "AlphaSense's mission to empower professionals across the business world with the insights they need to make better decisions deeply resonates with me. As the company continues to scale and surpass new milestones, we have the opportunity to shape a world-class team that reflects the vast breadth of customers and the markets we serve. I'm excited to help build an environment where world-class innovation and growth go hand in hand – and to demonstrate how a strong culture and cutting-edge AI can together redefine how people experience work."

Thomas will report directly to Founder and CEO Jack Kokko and lead the company's global people organization across North America, EMEA, and APAC.

About AlphaSense

AlphaSense is the AI platform redefining market intelligence and workflow orchestration, trusted by thousands of leading organizations to drive faster, more confident decisions in business and finance. The platform combines domain-specific AI with a vast content universe of over 500 million premium business documents – including equity research, earnings calls, expert interviews, filings, news, and internal proprietary content. Purpose-built for speed, accuracy, and enterprise-grade security, AlphaSense helps teams extract critical insights, uncover market-moving trends, and automate complex workflows with high-quality outputs. With AI solutions like Generative Search, Generative Grid, and Deep Research, AlphaSense delivers the clarity and depth professionals need to navigate complexity and obtain accurate, real-time information quickly. For more information, visit www.alpha-sense.com.

Media Contact

Pete Daly for AlphaSense

Email: media@alpha-sense.com

AI photonics leadership interview with LightMatter CEO Nick Harris on "Scaling AI with Light Not Copper"

Catch this deep-dive interview where Jose Pozo, CTO at Optica, sits down with our Founder and CEO, Nicholas Harris!

Lightmatter is on its way to becoming the NVIDIA of photonics and light-based AI infrastructure. Which is the only way AI truly enables the Orwelian flat interconnected world.

Join Jose and Nick as they discuss how Lightmatter is shaping the future of optical interconnects by mastering silicon photonics. Also, hear about Nick’s pragmatic approach to transforming the company’s bold vision into reality.

Harris explains why micro-ring resonators—tiny optical modulators just 10×10 µm in size—are central to Lightmatter’s architecture. These devices deliver exceptional bandwidth density and energy efficiency, but demand precise temperature control. Lightmatter’s engineers have mastered that stability, handling temperature shifts of more than 800 °C per second, a feat that keeps performance steady at scale.

Another defining move: liquid cooling. With AI chips now reaching up to two kilowatts per package and racks surpassing 600 kW, air cooling is no longer viable. Lightmatter designs its photonic platforms—like the Bobcat and M1 1000—for dense, water-cooled environments, achieving up to four times better energy efficiency than copper interconnects.

The company’s upcoming Bi-Di (Bi-directional) optical link pushes 800 Gb/s per fibre at just 2.6 pJ/bit, proving that optical interconnects can outperform copper in both speed and power.

Harris emphasises collaboration—but not with startups.

“Each new chip program costs tens of millions. We partner with large semiconductor and hyperscale companies that can invest and deliver volume.”

That said, Lightmatter is deeply engaged across the supply chain—GlobalFoundries, TSMC, Amkor Technology, Inc., and ASE Global among them—and works closely with ODMs to advance fibre management and rack design for mass deployment.

Lightspark acquires Striga to enable end-to-end payments experience for fiat and crypto

We're beyond excited to share that Lightspark has acquired Striga. As the first "MiCA"-like licensed entity in Estonia and having processed well over €200M since the first customer went live in early 2023. Striga continues to build and ship regulated payments infrastructure that covers the best of bitcoin, stablecoins, and fiat for Europe.

With the true YCombinator ethos of building something people want and finding actual product market fit, joining forces with Lightspark gives Striga the capability to make history in Europe together. With the vast resources Lightspark brings, they fully intend to grow faster and expand the breadth of capabilities under their E-Money License (EMI) and Crypto Asset Service Provider (CASP) license.

The Striga team built the platform to solve their own pain point at a time when there was no proper solution that compliantly encompassed Bitcoin & Fiat for global payments. After tackling the most regulated market on the planet (Europe), they now get to realize their ambition of operating on a truly global scale as part of a global organization led by an incredible team.

Lightspark shared that they have acquired Striga. Striga is the pioneering embedded finance and digital asset infrastructure platform with a robust regulatory footprint in Europe, operating through its VASP license across 30 countries in the European Economic Area (EEA). Striga’s deep regulatory expertise, licensing framework, and financial services stack, including direct integrations with fiat providers, card networks, and banks, will accelerate Lightspark’s ability to bring compliant, borderless money movement to millions of users and businesses worldwide.

Lightspark shared that they have acquired Striga. Striga is the pioneering embedded finance and digital asset infrastructure platform with a robust regulatory footprint in Europe, operating through its VASP license across 30 countries in the European Economic Area (EEA). Striga’s deep regulatory expertise, licensing framework, and financial services stack, including direct integrations with fiat providers, card networks, and banks, will accelerate Lightspark’s ability to bring compliant, borderless money movement to millions of users and businesses worldwide.

Lightspark will work closely with Striga to bring incremental capabilities and offerings to customers, while also strengthening its regulatory positioning in Europe by applying for both e-money and MiCA licenses. Lightspark will then be able to offer a complete end-to-end experience to its customers, both for fiat and crypto across European member states.

Why Striga?

Striga was built from the ground up to solve a problem that every fintech or crypto builder knows: launching a compliant financial product is hard. Really hard.

From card issuing and Virtual IBAN creation to crypto custody and fiat ramps, Striga has built a modern payments stack that abstracts away the complexity of regulation, licensing, compliance, and integration with banks, brokers, and card schemes. Striga has led digital asset-enabled payments in Europe—enabling builders to launch sophisticated financial products in weeks, not years.

They didn’t just build the stack. They also earned the VASP license to operate in the EU. Striga was the first company approved under Estonia’s new, MiCA-style regulatory system. In a post-2022 world where regulators are cracking down, Striga has emerged as a rare success story: compliant, cash flow-positive, and trusted by over 50 companies building regulated finance apps, wallets, and card programs across the EEA.

What This Means for Lightspark

Lightspark is building open payments for the Internet, powered by Bitcoin. To unlock that, Lightspark needs to make payments as open, easy, compliant, and seamless as sending a message online, especially for financial institutions and regulated businesses. With Striga, Lightspark gains:

- A robust regulatory footprint in Europe, including a VASP license and direct integrations with card networks and banks. As part of this strategic acquisition, Striga will further strengthen its regulatory position in the EU by applying for both e-money and MiCA licenses. This will allow Lightspark to support the entire payment experience for both fiat and crypto across European countries.

- An end-to-end fintech infrastructure platform – enabling Virtual IBAN issuance, card issuing, crypto-fiat ramps, and custody, all through APIs.

- A proven team and platform – with product-market fit, with well over $200M in processed volume, and deep expertise in compliance and risk management. Jointly, Lightspark and Striga are working towards being among the first regulated e-Money institutions and Crypto Asset Service Providers in Estonia and Europe.

- A faster path to compliant go-to-market – helping partners in Europe and beyond embed Bitcoin-native payments into existing financial workflows.

Compliant Bitcoin-Powered Payments

Bringing Bitcoin into everyday finance isn’t just about technology—it’s about trust, compliance, and real-world interoperability. Striga’s infrastructure bridges the gap between crypto innovation and traditional financial institutions by doing the heavy lifting: onboarding users, managing compliance, issuing cards, and connecting to fiat rails. This, paired with Lightspark’s protocol-level capabilities, such as Spark, provides a powerful combination: regulated endpoints that can connect to open, global money movement.

Imagine a neobank in France issuing a debit card backed by Bitcoin. A crypto wallet in Spain offering instant Bitcoin payments with IBAN pay-ins. A cross-border payments app in Portugal onboards users and seamlessly converts euros to sats. All in a fully compliant way. That’s the future Lightspark is building—where Bitcoin isn't just an asset, but a foundation for global, open, regulated payments.

What’s Next

Lightspark’s one unified platform will deliver a fully compliant, always-on, Internet-scale payments experience for partners and their users. Of course, Striga will continue to serve existing customers with the same high level of service and support, while becoming an integral part of Lightspark’s infrastructure stack.

Lightspark is thrilled to welcome the entire Striga team, including CEO Prashanth Balasubramanian and key leaders across compliance, risk, engineering, and growth. They’ve built something exceptional—and together, Lightspark and Striga are going to take it even further.

About Lightspark

The Internet has open protocols for everything, except money. Lightspark is changing that. We're building modern, always-on payment solutions powered by Bitcoin: the only open, neutral network for moving value. With enterprise tools like Connect, UMA, and Spark, businesses can send and receive money instantly, securely, and at a fraction of the cost, anytime, anywhere. Follow on X @lightspark

About Striga

Striga has built the tools that innovators need to build compliant payment applications covering Bitcoin, stablecoins, and fiat across 30 countries in the EEA, without doing any of the heavy lifting. Backed by YCombinator, UC Berkeley, Stillmark, Fulgur Ventures, and many other notable names, Striga has enabled European businesses to move more than 2000 Bitcoin in less than 28 months, at a tiny fraction of the time and cost it takes to build an EU-compliant payment product.