Airspace Link Receives FAA NTAP Approval as UTM Provider for BVLOS Strategic Deconfliction Services

FAA Near-Term Approval Process (NTAP) Positions Cities, Federal Installations, and Commercial Operators for Proposed Part 108 Implementation

Airspace Link, a leading FAA-approved UAS Service Supplier of LAANC and B4UFLY services, today announced it has received formal approval of its Unmanned Aircraft System (UAS) Traffic Management (UTM) services through the FAA Near-Term Approval Process (NTAP). The Letter of Acceptance (LOA), issued September 23, 2025, confirms that the FAA has evaluated Airspace Link's UTM strategic deconfliction service and found it effectively mitigates the safety risk of drone-to-drone collision.

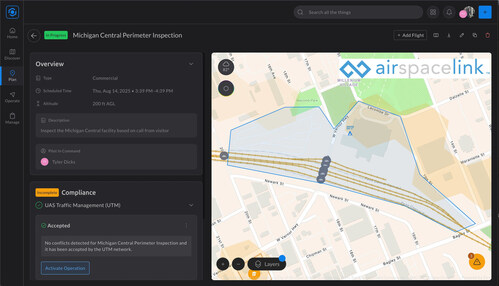

This milestone positions Airspace Link's AirHub® Portal as the leading Drone Operations Management System (DOMS) for enterprise that unifies flight planning, fleet management, FAA B4UFLY safety checks, LAANC authorization, risk assessment, and BVLOS strategic deconfliction within a single platform—delivering the comprehensive digital infrastructure commercial operators, cities, and federal installations will need as the FAA's proposed Beyond Visual Line of Sight (BVLOS) rules are expected to take effect in 2026.

The approval comes at a critical moment for organizations preparing for the drone economy. Under executive orders mandating pathways to routine BVLOS operations and the comprehensive Part 108 regulations proposed on August 5, 2025, state and local governments, federal agencies, and commercial operators face an urgent need to establish drone operation frameworks and technology infrastructure. In most urban and suburban areas, BVLOS operations conducted under the proposed Part 108 rules will require operators to use an FAA-approved Automated Data Service Provider (ADSP) for strategic deconfliction.

"UTM is the digital infrastructure that will let drones scale safely and predictably in our communities," said Michael Healander, Co-Founder and CEO of Airspace Link. "Completing NTAP approval is a major step forward for our customers and partners. This means that AirHub® Portal can exchange drone operations flight intent across a collaborative digital network, so drone deliveries, inspections, and public safety missions can launch faster, avoid conflict, and earn public trust. We're ready for what's next under Part 108 and Part 146 and already delivering it today."

"AirHub® Portal brings UTM seamlessly into your existing workflow," said Tyler Dicks, Head of Product at Airspace Link. "Operators can plan BVLOS missions, assess risk, coordinate with nearby flights, and monitor live activity, bringing together drone operations, weather, ADS-B data, drone detection, local constraints, and your own GIS information into a single pane of glass. With this U.S. Shared Airspace onboarding, coordination now extends across all participating operators, not just within a single platform, positioning you for Part 108 and Part 146 compliance from day one."

Strategic Positioning Under Proposed Part 108 Rules

The proposed Part 108 rules will introduce operational permits for smaller operators and operational certificates for larger-scale missions, with both pathways requiring integration with FAA-approved ADSP/UTM services for strategic deconfliction in most populated areas. Organizations that establish comprehensive drone frameworks early will be positioned to leverage federal funding opportunities and implement advanced emergency response, infrastructure inspection, and delivery services as the rules take effect in 2026.

Comprehensive Platform for the Drone Economy

Airspace Link's AirHub® Portal manages over 100,000 monthly users across 6,000 unique businesses, municipalities, and federal agencies across multiple jurisdictions. AirHub® Portal delivers strategic insight and situational awareness into all drone operations, whether coordinating multiple emergency response teams, monitoring delivery operations, or tracking airspace activity near sensitive facilities.

About Airspace Link

Founded in 2018, Airspace Link is a Detroit-based drone software company empowering governments, enterprises, and public safety agencies to plan, manage, and scale drone operations safely. As an FAA-approved B4UFLY, LAANC, US UTM provider, and Esri technology partner, Airspace Link's AirHub® Portal serves as a Drone Operations Management System (DOMS) that unifies flight planning, risk assessment, UTM coordination, and real-time situational awareness. By connecting national airspace data with local GIS, policies, and real-time inputs, Airspace Link helps communities and operators integrate drones into everyday life with confidence. Learn more at airspacelink.com.

Media Contact

Rich Fahle, Vice President of Marketing

Samantha Stewart Gacaferi, Marketing Manager

rich.fahle@airspacelink.com

sam.stewart@airspacelink.com

Leading crypto payments network Mesh launches Mesh AI Wallet

With Connectivity To Over 300 Wallets And Exchanges, Mesh AI Wallet Enables the First AI Agent Buying Physical Goods with Stablecoins

- A user connects an existing crypto wallet to Mesh's system.

- The user provides a simple prompt, such as "buy a bag of dog food from this retailer," to their Mesh AI (MAI) agent.

- The AI agent uses Mesh's underlying technology to execute the purchase by converting funds from the user's wallet into stablecoins for a quick checkout.

- Mesh's infrastructure handles the complex backend process of connecting and orchestrating payments across over 300 different wallets, exchanges, and merchants.

- The wallet supports Google's Agent Payments Protocol (AP2), a framework for enabling secure AI-driven transactions.

- Simplify transactions: It removes complexity from crypto payments by handling the process from start to finish based on a simple user command.

- Enable new uses: The technology allows for the creation of autonomous transactions and commerce, expanding the potential uses of digital assets.

- Make crypto more accessible: The platform is designed to make crypto payments feel as seamless and secure as using traditional payment systems.

Mesh, the leading crypto payments network, today unveiled the future of agentic commerce during a live demo at TOKEN2049, demonstrating an AI agent completing a crypto transaction. The demonstration highlighted how an AI agent can seamlessly complete a real world ecommerce purchase using stablecoins, in this case finishing a purchase of dog food from a retailer.

This end-to-end flow - from account funding to stablecoin checkout - underscores Mesh's role as the connective layer powering programmable payments. By integrating wallets, exchanges, and merchants into a single experience and intelligently orchestrating payments across all parties, Mesh enables AI agents to securely transact on behalf of users without human intervention.

Mesh's infrastructure eliminates the complexity of agent-driven transactions. Now, users can simply connect their wallet to Mesh AI (MAI), and with a simple prompt, leverage Mesh's proprietary technology to complete a crypto transaction. Mesh also provides the ability to spin up new wallets programmatically, expanding the scope of what agents can do beyond the demo shown at TOKEN2049.

"Agentic commerce is no longer a thought experiment – it's here," said Bam Azizi, Co-Founder and CEO at Mesh. "This is the first step toward a world where transactions are intelligent, borderless, seamless, and secure. Our technology is the unifying infrastructure that will empower agents to harness the power of this new financial model."

This new offering follows Mesh's support of Google's new Agent Payments Protocol (AP2), a framework for enabling AI agents to securely transact on behalf of users. By powering intelligent payment orchestration across more than 300 platforms, Mesh unlocks access to the digital asset market, enabling agentic commerce to tap into this massive, growing opportunity. Compared to legacy financial systems, crypto is the most ideal use case for programmable commerce, enabling autonomous agents to initiate, authorize, and complete transactions with speed and flexibility.

For more information about Mesh, visit https://meshconnect.com/.

About Mesh

Founded in 2020, Mesh is building the first global crypto payments network, connecting hundreds of exchanges, wallets, and financial services platforms to enable seamless digital asset payments and conversions. By unifying these platforms into a single network, Mesh is pioneering an open, connected, and secure ecosystem for digital finance. For more information, visit https://www.meshconnect.com/.

Contact: mesh@missionnorth.com

AI Super Intelligence cloud Lambda achieves NVIDIA Exemplar Cloud performance validation

Lambda is one of the first cloud providers to achieve NVIDIA validation for mission-critical AI training workloads at scale

Lambda, the Super Intelligence Cloud, today announced it has achieved NVIDIA Exemplar Cloud Status, becoming one of the first GPU cloud providers worldwide to receive performance validation from NVIDIA.

This validates Lambda’s ability to deliver consistent performance on NVIDIA H100 GPUs at scale. Lambda's infrastructure meets NVIDIA's requirements for real-world workload performance and reliability, with transparent, workload-specific benchmark results demonstrating performance within 5% of NVIDIA's published baselines for large-scale training workloads.

“NVIDIA validates what our customers already know: Lambda delivers the infrastructure and operations that leading AI labs and enterprises trust for their most critical AI workloads,” said Paul Zhao, Head of Product at Lambda. “Our 13-year focus on AI and co-engineering partnership with NVIDIA has fostered the expertise to operate at the scale and reliability the superintelligence race demands.”

As part of the NVIDIA ecosystem, Lambda is providing AI-optimized infrastructure to support the DGX Cloud Lepton marketplace to power AI training, fine-tuning, and inference workloads.

“The NVIDIA Exemplar Cloud achievement is a testament to Lambda’s unwavering commitment to delivering world-class AI infrastructure,” said Warren Barkley, Vice President of Product Management at NVIDIA. “The Exemplar Clouds initiative sets a transparent standard for performance and resiliency, and we congratulate Lambda for embracing a high standard of workload performance in their infrastructure. This accomplishment means that developers and enterprises can now deploy their most demanding AI workloads with absolute confidence, knowing they are running on a platform validated for superior performance, reliability, and total cost of ownership.”

Lambda currently serves over 200,000 AI developers and teams worldwide. Over the past decade, Lambda has earned six NVIDIA awards, and now with Exemplar Cloud, further affirms its position as one of NVIDIA’s most trusted partners.

About Lambda

Lambda, The Superintelligence Cloud, builds gigawatt-scale AI factories for training and inference. From prototyping to serving billions of users in production, we build the underlying infrastructure that powers AI. Lambda was founded in 2012 by published AI engineers.

Lambda’s mission is to make compute as ubiquitous as electricity and give every person access to artificial intelligence. One person, one GPU.

Contacts

Media Contact

pr@lambdal.com

Aerospace innovator Odys Aviation close Series A Funding to Accelerate Full-Scale Flight Testing and Global Operational Launches

Odys Aviation, a dual-use aviation company building hybrid-electric vertical take-off and landing (VTOL) aircraft, today announced the closing of its $26M Series A funding round. Led by Nova Threshold and with investment from Tuchen Ventures and key insiders, the capital will be used to accelerate U.S. full-scale aircraft flight testing of its Laila aircraft and to expand the team as the company prepares for its first international global launch operations, launching in Q1 2026.

Building on major technical milestones achieved in 2024 and 2025, Odys is entering the next phase of execution, proving that advanced air mobility is not just about aircraft in isolation, but about building the operational ecosystem that will connect regions, economies and ultimately communities in entirely new ways.

“This funding marks a critical inflection point for Odys,” said James Dorris, CEO of Odys Aviation. “We’ve already proven the technology and performance potential of hybrid-electric VTOL. Now, our focus shifts to scaling operations, bringing together the right people, systems and partners to demonstrate what a full AAM ecosystem looks like in practice, not theory.”

"Odys is positioned to meet strong customer demand for longer-range, high-efficiency regional mobility,” said Justin Hamilton, Managing Director of Nova Threshold. “Their innovative aircraft and hybrid-electric propulsion system have demonstrated outstanding performance and reliability. By targeting unmanned cargo and defense applications within existing U.S. and international regulations, Odys is creating a faster, more credible path to market readiness and long-term profitability, setting the stage for future manned operations.”

The Series A follows successful integration and testing of Odys Aviation’s hybrid propulsion system and the completion of key design and certification milestones under the JARUS/SORA 2.5 framework. The company’s ongoing U.S. flight test campaign will validate full-scale flight performance and autonomy systems, laying the foundation for a seamless transition to commercial demonstration in Q1 of 2026.

Odys Aviation’s global Proof of Concept programs will represent the world’s first end-to-end operational demonstrations of a dual-use VTOL system, linking civil, commercial, and defense applications in a single scalable model. The project will showcase how the company’s hybrid architecture enables both sustainable and practical deployment at long range and high payload capacity, bridging the gap between conventional aviation and emerging AAM services. These programs will build on the company’s already-proven track record with the United States Department of Defense, helping to support gaps in UAV availability, affordability and scalability with 14 awarded contracts across three branches awarded to date valued at over $11M.

“What sets Odys apart is our ability to execute beyond a single aircraft or flight,” said Andy Apple, VP of Strategy for Odys Aviation. “We’re developing the infrastructure, autonomy and operational frameworks needed to integrate AAM into real-world airspace and regulatory environments. This funding ensures we can deliver on that vision for our customers and partners.”

With this new capital, Odys will make strategic hires across engineering, certification, and operations, expanding its U.S. and international presence as it scales. The company continues to attract interest from both commercial airlines and government partners seeking sustainable long- range connectivity and dual-use logistics capabilities.

About Odys Aviation

Odys Aviation designs, develops and manufactures long-range, technologically advanced dual-use VTOL aircraft that solve global challenges across defense, logistics, and passenger travel. The company is pioneering the next generation of VTOL aircraft which use hybrid-electric propulsion systems to deliver the optimal balance between range and payload.

Based in Long Beach, CA, Odys Aviation was founded in 2022 and is led by seasoned engineers and strategists from SpaceX, Gulfstream, Airbus, Tesla and the U.S. Department of Defense. The company has secured $11B+ in signed LOIs to date. Visit www.odysaviation.com for more information.

Contacts

Media Contact: Louise@odysaviation.com

AI business intelligence leader AlphaSense acquires Carousel to power AI-driven Excel modeling

AlphaSense News:

AlphaSense Surpasses $500M in ARR as Adoption of Applied AI Workflows Surge

AlphaSense Launches Financial Data to Offer Powerful Integrated View of Quantitative and Qualitative Market Intelligence

Acquisition extends AlphaSense's leadership in market intelligence by embedding AI-driven financial modeling directly into analyst workflows

AlphaSense, the AI platform redefining market intelligence for the business and financial world, today announced at its AlphaSummit customer conference the acquisition of Carousel, an innovator in AI-powered Excel modeling. This acquisition expands AlphaSense's leadership in generative AI by adding Carousel's dynamic modeling technology to AlphaSense's generative AI suite, creating a new standard of unified intelligence – from documents to financial data, and now dynamic models.

For decades, Excel has been central to decision-making, yet building models has required burdensome manual workflows. With Carousel added as an extension of AlphaSense's end-to-end AI platform, customers will be able to create and update financial models almost at the speed of thought. AlphaSense will uniquely combine generative Excel modeling on top of the world's most extensive premium content universe, including its fully drivable Canalyst models for 4,500 companies, incorporating historical financials and granular KPI drivers for detailed modeling of every company's financial performance.

"This acquisition extends and accelerates our efforts to automate financial workflows with Generative AI, uniquely combining Canalyst's one-of-a-kind data asset with Carousel's AI-driven modeling tool," said Jack Kokko, CEO and Founder of AlphaSense. "AlphaSense has long been the leader in AI-driven market intelligence. By incorporating what the Carousel team has built, we extend that leadership into Excel, streamlining one of the most important analytical workflows in the financial and business world."

Carousel eliminates the most tedious parts of an analyst's workflow – extracting data from PDFs and presentations, formatting models, building formulas, and explaining complex logic. Now, as Carousel's modeling velocity becomes seamlessly embedded into the AlphaSense ecosystem, it evolves from an execution tool into an iterative thought partner. Analysts can spend less time wrestling with manual tasks and more time generating strategic insights, with AI that not only builds models faster but also enables intelligent iteration – helping them sharpen their craft and deliver the high-value analysis that drives smarter, faster decisions.

This will deliver unmatched speed, accuracy, and depth of insight by enabling customers to:

- Build and iterate on models informed by market intelligence: Carousel can both build first drafts and iteratively refine models with natural language. With AlphaSense integrated, analysts can incorporate insights from earnings calls, research reports, and competitive filings to drive their modeling assumptions and explore new strategic angles, all without leaving Excel.

- Ingest unstructured sources from anywhere into Excel: Carousel already extracts data from user-provided PDFs, CSVs, and presentations. With AlphaSense integrated, analysts can also search across thousands of filings for specific line items buried in footnotes or across multiple 10-Ks, pulling precise data points directly into models without manual hunting.

- Understand, explain, and check models: Model Walkthrough breaks down cell-level formulas into plain language, while Carousel's chat interface helps users understand how models are architected and laid out. Senior team members can quickly get comfortable with junior team members' work, simplifying reviews, ensuring accuracy, and accelerating onboarding.

- Work with context-aware AI directly in Excel: Carousel understands your model's context to help analysts test more scenarios, pursue alternative hypotheses, and stress-test assumptions thoroughly. Analysts gain leverage to pursue deeper insights that would otherwise require days of manual work.

"Carousel takes care of tedious Excel work, but together with AlphaSense, we can now build toward a bigger opportunity," said Daniel Wolf, Co-Founder of Carousel. "By pairing our modeling velocity with AlphaSense's unparalleled intelligence capabilities, millions of documents, and thousands of Canalyst models, we can help analysts iterate with market insights and pursue deeper analysis they wouldn't have time for otherwise. We can make analysts better, not just faster."

A Unified Vision

This acquisition reinforces AlphaSense's position as the market's AI and intelligence leader while expanding its value proposition from research to modeling – and from insight to action. The integration of Carousel's technology ensures a seamless, in-platform experience where Excel modeling is embedded directly into AlphaSense's generative AI workflows, enabling customers to work smarter and faster than ever before.

The financial terms of the transaction were not disclosed.

About AlphaSense

AlphaSense is the AI platform redefining market intelligence and agentic workflow orchestration, trusted by thousands of leading organizations to drive faster, more confident decisions in business and finance. The platform combines domain-specific AI with a vast content universe of over 500 million premium business documents – including equity research, earnings calls, expert interviews, filings, news, and clients' internal proprietary content. Purpose-built for speed, accuracy, and enterprise-grade security, AlphaSense helps clients extract critical insights, uncover market-moving trends, and automate complex workflows with high-quality outputs. With AI solutions like Generative Search, Generative Grid, and Deep Research, AlphaSense delivers the clarity and depth professionals need to navigate complexity and obtain accurate, real-time information quickly. For more information, visit www.alpha-sense.com.

Media Contact

Pete Daly for AlphaSense

Email: media@alpha-sense.com

Reusable Rocket innovator Stoke Space Raises $510 Million to Scale Manufacturing of Fully Reusable Nova Launch Vehicle

-

- $510 Million Series D Funding and nears $2B valuation: Stoke Space announced a significant Series D funding round of $510 million, led by the US Innovative Technology Fund, along with a $100 million debt facility. This capital is intended to accelerate the development of its reusable Nova rocket.

-

Stoke Space’s $510M round shows the future of launch belongs to defense - Techcrunch

-

Space Startup Stoke Nears $2 Billion Valuation in New Financing - The Information

Thomas Tull's USIT leads round to support Stoke’s mission to strengthen resilience and competition across the U.S. space industrial base

Stoke Space, the rocket company developing fully reusable medium-lift launch vehicles, announced today it has raised $510 million in Series D funding led by Thomas Tull’s US Innovative Technology Fund (USIT) in conjunction with a $100 million debt facility led by Silicon Valley Bank. Stoke’s new financing, which more than doubles its total capital raised to $990 million, will accelerate product development and expansion.

The round also drew support from Washington Harbour Partners LP and General Innovation Capital Partners, underscoring Stoke’s importance to national security and the U.S. industrial base. Existing backers who also participated include 776, Breakthrough Energy, Glade Brook Capital, Industrious Ventures, NFX, Sparta Group, Toyota Ventures, Woven Capital, among others.

Earlier this year, the U.S. Space Force awarded Stoke a National Security Space Launch contract that will expand the country’s access to space. The award reflects the growing demand for medium-lift capacity across commercial, defense, and emerging architectures, such as the Golden Dome. Stoke’s fully and rapidly reusable Nova launch vehicle is being developed to provide high-frequency access to orbit and support missions to, through, and from space, including satellite constellation deployment, in-space mobility, and downmass.

“Launch capacity is now a defining factor in the U.S.’s ability to compete and lead in the space economy,” said Thomas Tull, Chairman of USIT. “Stoke’s pioneering approach to reusable launch systems directly advances our national security and commercial access to orbit. Their vision for resilient, high-frequency launch operations is the kind of innovation essential to maintaining leadership in the space industry. We’re proud to support their mission in defining the next chapter of U.S. aerospace.”

Since its Series C, Stoke has completed mission duty cycle testing on Stage 1 and Stage 2 flight-like engine configurations and advanced structural qualifications for both stages. The company has also made substantial progress in the refurbishment of Launch Complex 14 at Cape Canaveral Space Force Station, Florida, which is scheduled for activation in early 2026.

“This funding gives us the runway to complete development and demonstrate Nova through its first flights,” said Andy Lapsa, co-founder and CEO, Stoke. “We’ve designed Nova to address a real gap in launch capacity, and the National Security Space Launch award, along with our substantial manifest of contracted commercial launches, affirms that need. The fresh support from our investors and government partners enables our team to remain laser focused on bringing Nova’s unique capabilities to market.”

The new capital will expand production capacity for the Nova vehicle and complete activation of Launch Complex 14 at Cape Canaveral. Stoke will also invest in supply chain, its Boltline product, and infrastructure to prepare for high-cadence launch operations, strengthening capability across the U.S. space industrial base.

About Stoke Space

Stoke is scaling the space economy by providing lower-cost, on-demand transport to, through and from space. It’s developing the fully and rapidly reusable Nova rocket designed to operate with aircraft-like frequency. Stoke’s technology development has been funded by the United States Space Force, DIU, NASA, the National Science Foundation, and other government and private partners.

Contacts

Media Contact

John Taylor for Stoke Space

+ 1 (571) 437-4685

jtaylor@stokespace.com

Toyota partners with Machina Labs to advance custom automotive manufacturing with AI and Robotics

Toyota pilot program and Woven Capital investment support automotive manufacturing breakthrough

Machina Labs, a leader in advanced manufacturing and robotics, today announced next-generation manufacturing methods for automotive body panels and accessories. This will enable automakers to bring customized vehicles to market at mass-production prices, representing a breakthrough in automotive manufacturing customization.

The announcement was made today at UP.Summit, alongside a pilot of the technology with Toyota Motor North America and a strategic investment from Woven Capital, Toyota’s growth-stage venture investment arm. The pilot project will apply Machina’s RoboForming technology to customize production body panels, with the goal of bringing automotive-grade quality and throughput to low-volume manufacturing.

This capability directly addresses a massive and growing market: the automotive customization and accessories industry, valued at $2.4 billion in 2024 for trucks alone. Conventional high-volume manufacturing often overlooks opportunities for customized production. By introducing flexible, low-volume production capabilities within existing operations, Machina Labs is unlocking new value streams at scale.

“Traditional production tools are often massive, comparable in size to a small car and weighing over 20 tons,” said Ed Mehr, Co-Founder and CEO of Machina Labs. “With our solution, the need for dedicated tooling per model variation is eliminated. That means lower project capital, less storage both in-plant and for past models, which today can last up to 15 years, and faster production changeovers.”

“We envision a future where customization is available for every Toyota driver," said Zach Choate, General Manager of Production Engineering and Core Engineering Manufacturing at Toyota Motor North America. “The ability to deliver a bespoke product into the hands of our customers is the type of innovation we are excited about.”

“AI-powered manufacturing is transforming how products are designed and produced at scale," said George Kellerman, Founding Managing Director at Woven Capital. “Customers increasingly demand more personalized products while engineers need faster, more cost-effective paths from concept to production without the constraints of traditional supply chains. We're excited to team up with Machina Labs, supercharge their development roadmap in automotive, and support their journey in accelerating innovations that advance the future of manufacturing.”

Machina Labs’ AI driven RoboCraftsman™ platform and RoboForming™ technology is a proprietary form of incremental sheet forming and has a proven ability to deliver highly customized panels from sheet metal for automotive and aerospace vehicles at high volume, high quality, and short lead time, a capability which is not otherwise possible in today's manufacturing processes.

Furthermore, this shift impacts process flow within the factory: current manufacturing models require separate storage, repackaging, and dedicated assembly lanes for custom parts. Machina’s approach enables on-demand part production in low volumes from cells near the assembly line, allowing for dynamic batching or broadcast-driven manufacturing – all without disrupting existing flow.

About Machina Labs

Machina Labs is unlocking manufacturing with AI and robotics, delivering flexible, on-demand production solutions that eliminate traditional tooling constraints. The company’s RoboCraftsman™ platform integrates advanced robotics and AI-driven process controls to rapidly manufacture complex metal structures for aerospace, defense, and automotive applications. Founded in Los Angeles in 2019, Machina Labs is building the next generation of intelligent, adaptive, and software-driven factories. For more information, please visit https://machinalabs.ai and view our press kit here.

Contacts

Press Contact

machinalabs@sbscomms.com

AI infrastructure leader Lambda appoints Heather Planishek to Board of Directors as Audit Chair

In preparing the Company for the public markets and IPO with Morgan Stanley, JPMorgan, and CITI. Lambda added a great new Board member in Heather Planishek to help move them into the next phase of growth in the global AI economy as a public reporting Company.

An Industry veteran, Heather brings deep financial and operational expertise from Tines and Palantir.

Lambda, the Superintelligence Cloud, today announced the appointment of Heather Planishek to its Board of Directors, where she will serve as Audit Chair. Planishek brings extensive experience scaling high-growth technology companies, currently serving as Chief Operating and Financial Officer at Tines, the intelligent workflow platform. She previously served as Chief Accounting Officer at Palantir Technologies Inc. (NASDAQ: PLTR), where she guided the company through a period of significant growth and its transition to the public markets.

Lambda, the Superintelligence Cloud, today announced the appointment of Heather Planishek to its Board of Directors, where she will serve as Audit Chair. Planishek brings extensive experience scaling high-growth technology companies, currently serving as Chief Operating and Financial Officer at Tines, the intelligent workflow platform. She previously served as Chief Accounting Officer at Palantir Technologies Inc. (NASDAQ: PLTR), where she guided the company through a period of significant growth and its transition to the public markets.

As Audit Chair, Planishek will lead the board's oversight of Lambda's financial reporting, risk management, and compliance. Her appointment strengthens Lambda's board governance as the company continues to scale its operations, serving the world’s leading AI labs, hyperscalers, and more than 200,000 AI developers across research, startups, and enterprise.

“Heather brings world-class financial and operational expertise to our board of directors,” said Stephen Balaban, co-founder and CEO of Lambda. “I’m very proud to welcome her appointment as our Audit Chair and look forward to working closely with her.”

Throughout her career, Planishek has established a reputation for combining strategic thinking with mission-critical execution. Her earlier roles at Hewlett Packard Enterprise and EY provided deep expertise in enterprise-scale finance and operations.

"Lambda's singular focus on AI infrastructure over the past decade has positioned the company at the forefront of one of technology's most important transformations," said Planishek. “I’m excited to partner with Lambda’s leadership and board to uphold strong governance as the company continues transforming AI infrastructure.”

About Lambda

Lambda, The Superintelligence Cloud, builds gigawatt-scale AI factories for training and inference.

Lambda is where AI teams find infinite scale to build and deploy intelligence: from prototyping to serving billions of users in production. We build the underlying infrastructure that powers AI.

Lambda’s customers include top AI labs, enterprises, and hyperscalers. We have over a decade of experience co-engineering, deploying, and operating mission-critical GPU capacity for the largest companies in the world.

Lambda was founded in 2012 by published AI engineers. Lambda’s mission is to make compute as ubiquitous as electricity and give every person access to artificial intelligence. One person, one GPU.

Contacts

Media Contact

pr@lambdal.com

Robotics leader Machina Labs partners with U.S. Air Force to advance AI Driven manufacturing for defense

In partnership with the ARM Institute, this multiyear program will enhance the deployable RoboCraftsman with advanced AI/ML capabilities to support DoD sustainment modernization

Machina Labs, a leader in advanced robotics manufacturing that combines AI and robotics for the rapid production of metal structures, today announced it has been awarded a contract from the U.S. Air Force Research Laboratory (AFRL) in partnership with the Advanced Robotics for Manufacturing (ARM) Institute, a Department of Defense (DoD) Manufacturing Innovation Institute based in Pittsburgh, PA.

Machina Labs, a leader in advanced robotics manufacturing that combines AI and robotics for the rapid production of metal structures, today announced it has been awarded a contract from the U.S. Air Force Research Laboratory (AFRL) in partnership with the Advanced Robotics for Manufacturing (ARM) Institute, a Department of Defense (DoD) Manufacturing Innovation Institute based in Pittsburgh, PA.

The multiyear award will expand Machina Labs’ flagship RoboCraftsman™ platform with advanced AI and machine learning capabilities tailored to DoD sustainment modernization needs. The program will initially focus on automating tool path generation for airframe skins and panels, which are critical to maintaining fleet readiness, while laying the foundation to extend these capabilities across additional sustainment applications.

“This award underscores RoboCraftsman’s role in the DoD’s sustainment modernization strategy,” said Edward Mehr, CEO and Co-Founder of Machina Labs. “Our mission is to deliver a portable manufacturing solution that can operate closer to the point of need, even in contested logistics environments. This enables mission-critical components to be produced faster, more efficiently, and without dependency on traditional tooling.”

The program will apply AI and machine learning, combined with data generated on RoboCraftsman platforms, to automate tool path generation for airframe production. Airframes are a critical sustainment challenge, where long delays in sourcing qualified parts have grounded aircraft and limited readiness. The broader vision is clear: RoboCraftsman is being developed as a flexible, deployable manufacturing solution capable of producing structural components for a wide range of defense platforms — from aircraft and missiles to vehicles and weapon systems.

For DoD stakeholders, these new capabilities are expected to help reduce Mission Impaired Capability Awaiting Parts (MICAP) ratios, shorten production timelines, and improve availability of critical aircraft. More broadly, the enhancements reinforce Machina Labs’ strategy to scale RoboCraftsman’s AI-driven, software-defined manufacturing platform across defense, aerospace, automotive and industrial markets.

About Machina Labs

Machina Labs is unlocking manufacturing with AI and robotics, delivering flexible, on-demand production solutions that eliminate traditional tooling constraints. The company’s RoboCraftsman™ platform integrates advanced robotics and AI-driven process controls to rapidly manufacture complex metal parts and structures for aerospace, defense, automotive, and industrial applications. Founded in Los Angeles in 2019, Machina Labs is building the next generation of intelligent, adaptive, and software-driven factories. For more information, please visit https://machinalabs.ai.

About the ARM Institute

The Advanced Robotics Manufacturing (ARM) Institute is a Manufacturing Innovation Institute (MII) funded by the Office of the Secretary of Defense under Agreement Number W911NF-17-3-0004 and is part of the Manufacturing USA® network. The ARM Institute leverages a unique, robust, and diverse consortium of 450+ members and partners across industry, academia, and government to make robotics, autonomy, and artificial intelligence more accessible to U.S. manufacturers large and small, train and empower the manufacturing workforce, strengthen our economy and global competitiveness, and elevate national security and resilience. Based in Pittsburgh, PA since 2017, the ARM Institute is leading the way to a future where people & robots work together to respond to our nation’s greatest challenges and to produce the world’s most desired products. For more information, visit www.arminstitute.org.

About the Air Force Research Laboratory

The Air Force Research Laboratory (AFRL) is the primary scientific research and development center for the Department of the Air Force. AFRL plays an integral role in leading the discovery, development, and integration of affordable warfighting technologies for our air, space, and cyberspace force. With a workforce of more than 12,500 across nine technology areas and 40 other operations across the globe, AFRL provides a diverse portfolio of science and technology ranging from fundamental to advanced research and technology development. For more information, visit: www.afresearchlab.com.

Contacts

AI inference chip maker Groq raises $750 Million at $6.9 Billion as demand surges

ALSO READ:

Groq more than doubles valuation to $6.9 billion as investors bet on AI chips - Reuters

Nvidia AI chip challenger Groq raises even more than expected, hits $6.9B valuation - Techcrunch

"Compute is the new oil," said Goq founder and CEO Jonathan Ross on Bloomberg when referencing AI and its scale in the 4th industrial age.

The investment strengthens Groq's role in the American AI Stack, delivering fast, affordable compute worldwide.

Groq, the pioneer in AI inference, today announced $750 million in new financing at a post-money valuation of $6.9 billion. The round was led by Disruptive with significant investment from Blackrock, Neuberger Berman, Deutsche Telekom Capital Partners and a large US-based West Coast mutual fund manager. The raise also included continued support from Samsung, Cisco, D1, Altimeter, 1789 Capital and Infinitum.

Groq powers more than two million developers and Fortune 500 companies with fast, affordable compute and is growing its presence globally, building on existing data centers in North America, Europe, and the Middle East.

Groq raises $750M, strengthening Groq's role in the American AI Stack and delivering fast, affordable compute globally.

"Inference is defining this era of AI, and we're building the American infrastructure that delivers it with high speed and low cost." — Jonathan Ross, Groq Founder and CEO

The White House recently issued an executive order promoting the export of the American AI Technology Stack, emphasizing the global deployment of US-origin AI technology. Groq is playing a central role, with their American-built inference infrastructure already powering developers and enterprises worldwide.

Disruptive, a Dallas-based growth investment firm, has backed some of the most transformative and successful companies in the last decade, including large investments in Palantir, Airbnb, Spotify, Shield AI, Hims, Databricks, Stripe, Slack and many other AI leaders and AI adjacent businesses. Disruptive has invested nearly $350 million in Groq.

"As AI expands, the infrastructure behind it will be as essential as the models themselves," said Alex Davis, Founder, Chairman, and CEO of Disruptive. "Groq is building that foundation, and we couldn't be more excited to partner with Jonathan and his team in this next chapter of explosive growth."

About Groq

Groq is the inference infrastructure that powers AI with the speed and cost it requires. Founded in 2016, the company created the LPU and GroqCloud to ensure compute is faster and more affordable. Today, Groq is a key part of the American AI Stack and trusted by more than two million developers and many of the world's leading Fortune 500 companies.

Groq Media Contact: pr-media@groq.com